mirror of

https://github.com/crewAIInc/crewAI.git

synced 2026-02-24 06:48:24 +00:00

Compare commits

9 Commits

devin/1749

...

lg-update-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

ccd98cc511 | ||

|

|

5c51349a85 | ||

|

|

5b740467cb | ||

|

|

e9d9dd2a79 | ||

|

|

3e74cb4832 | ||

|

|

db3c8a49bd | ||

|

|

8a37b535ed | ||

|

|

e6ac1311e7 | ||

|

|

b0d89698fd |

@@ -1,184 +0,0 @@

|

||||

# A2A Protocol Integration

|

||||

|

||||

CrewAI supports the A2A (Agent-to-Agent) protocol, enabling your crews to participate in remote agent interoperability. This allows CrewAI crews to be exposed as remotely accessible agents that can communicate with other A2A-compatible systems.

|

||||

|

||||

## Overview

|

||||

|

||||

The A2A protocol is Google's standard for agent interoperability that enables bidirectional communication between agents. CrewAI's A2A integration provides:

|

||||

|

||||

- **Remote Interoperability**: Expose crews as A2A-compatible agents

|

||||

- **Bidirectional Communication**: Enable full-duplex agent interactions

|

||||

- **Protocol Compliance**: Full support for A2A specifications

|

||||

- **Transport Flexibility**: Support for multiple transport protocols

|

||||

|

||||

## Installation

|

||||

|

||||

A2A support is available as an optional dependency:

|

||||

|

||||

```bash

|

||||

pip install crewai[a2a]

|

||||

```

|

||||

|

||||

## Basic Usage

|

||||

|

||||

### Creating an A2A Server

|

||||

|

||||

```python

|

||||

from crewai import Agent, Crew, Task

|

||||

from crewai.a2a import CrewAgentExecutor, start_a2a_server

|

||||

|

||||

# Create your crew

|

||||

agent = Agent(

|

||||

role="Assistant",

|

||||

goal="Help users with their queries",

|

||||

backstory="A helpful AI assistant"

|

||||

)

|

||||

|

||||

task = Task(

|

||||

description="Help with: {query}",

|

||||

agent=agent

|

||||

)

|

||||

|

||||

crew = Crew(agents=[agent], tasks=[task])

|

||||

|

||||

# Create A2A executor

|

||||

executor = CrewAgentExecutor(crew)

|

||||

|

||||

# Start A2A server

|

||||

start_a2a_server(executor, host="0.0.0.0", port=10001)

|

||||

```

|

||||

|

||||

### Custom Configuration

|

||||

|

||||

```python

|

||||

from crewai.a2a import CrewAgentExecutor, create_a2a_app

|

||||

|

||||

# Create executor with custom content types

|

||||

executor = CrewAgentExecutor(

|

||||

crew=crew,

|

||||

supported_content_types=['text', 'application/json', 'image/png']

|

||||

)

|

||||

|

||||

# Create custom A2A app

|

||||

app = create_a2a_app(

|

||||

executor,

|

||||

agent_name="My Research Crew",

|

||||

agent_description="A specialized research and analysis crew",

|

||||

transport="starlette"

|

||||

)

|

||||

|

||||

# Run with custom ASGI server

|

||||

import uvicorn

|

||||

uvicorn.run(app, host="0.0.0.0", port=8080)

|

||||

```

|

||||

|

||||

## Key Features

|

||||

|

||||

### CrewAgentExecutor

|

||||

|

||||

The `CrewAgentExecutor` class wraps CrewAI crews to implement the A2A `AgentExecutor` interface:

|

||||

|

||||

- **Asynchronous Execution**: Crews run asynchronously within the A2A protocol

|

||||

- **Task Management**: Automatic handling of task lifecycle and cancellation

|

||||

- **Error Handling**: Robust error handling with A2A-compliant responses

|

||||

- **Output Conversion**: Automatic conversion of crew outputs to A2A artifacts

|

||||

|

||||

### Server Utilities

|

||||

|

||||

Convenience functions for starting A2A servers:

|

||||

|

||||

- `start_a2a_server()`: Quick server startup with default configuration

|

||||

- `create_a2a_app()`: Create custom A2A applications for advanced use cases

|

||||

|

||||

## Protocol Compliance

|

||||

|

||||

CrewAI's A2A integration provides full protocol compliance:

|

||||

|

||||

- **Agent Cards**: Automatic generation of agent capability descriptions

|

||||

- **Task Execution**: Asynchronous task processing with event queues

|

||||

- **Artifact Management**: Conversion of crew outputs to A2A artifacts

|

||||

- **Error Handling**: A2A-compliant error responses and status codes

|

||||

|

||||

## Use Cases

|

||||

|

||||

### Remote Agent Networks

|

||||

|

||||

Expose CrewAI crews as part of larger agent networks:

|

||||

|

||||

```python

|

||||

# Multi-agent system with specialized crews

|

||||

research_crew = create_research_crew()

|

||||

analysis_crew = create_analysis_crew()

|

||||

writing_crew = create_writing_crew()

|

||||

|

||||

# Expose each as A2A agents on different ports

|

||||

start_a2a_server(CrewAgentExecutor(research_crew), port=10001)

|

||||

start_a2a_server(CrewAgentExecutor(analysis_crew), port=10002)

|

||||

start_a2a_server(CrewAgentExecutor(writing_crew), port=10003)

|

||||

```

|

||||

|

||||

### Cross-Platform Integration

|

||||

|

||||

Enable CrewAI crews to work with other agent frameworks:

|

||||

|

||||

```python

|

||||

# CrewAI crew accessible to other A2A-compatible systems

|

||||

executor = CrewAgentExecutor(crew)

|

||||

start_a2a_server(executor, host="0.0.0.0", port=10001)

|

||||

|

||||

# Other systems can now invoke this crew remotely

|

||||

```

|

||||

|

||||

## Advanced Configuration

|

||||

|

||||

### Custom Agent Cards

|

||||

|

||||

```python

|

||||

from a2a.types import AgentCard, AgentCapabilities, AgentSkill

|

||||

|

||||

# Custom agent card for specialized capabilities

|

||||

agent_card = AgentCard(

|

||||

name="Specialized Research Crew",

|

||||

description="Advanced research and analysis capabilities",

|

||||

version="2.0.0",

|

||||

capabilities=AgentCapabilities(

|

||||

streaming=True,

|

||||

pushNotifications=False

|

||||

),

|

||||

skills=[

|

||||

AgentSkill(

|

||||

id="research",

|

||||

name="Research Analysis",

|

||||

description="Comprehensive research and analysis",

|

||||

tags=["research", "analysis", "data"]

|

||||

)

|

||||

]

|

||||

)

|

||||

```

|

||||

|

||||

### Error Handling

|

||||

|

||||

The A2A integration includes comprehensive error handling:

|

||||

|

||||

- **Validation Errors**: Input validation with clear error messages

|

||||

- **Execution Errors**: Crew execution errors converted to A2A artifacts

|

||||

- **Cancellation**: Proper task cancellation support

|

||||

- **Timeouts**: Configurable timeout handling

|

||||

|

||||

## Best Practices

|

||||

|

||||

1. **Resource Management**: Monitor crew resource usage in server environments

|

||||

2. **Error Handling**: Implement proper error handling in crew tasks

|

||||

3. **Security**: Use appropriate authentication and authorization

|

||||

4. **Monitoring**: Monitor A2A server performance and health

|

||||

5. **Scaling**: Consider load balancing for high-traffic scenarios

|

||||

|

||||

## Limitations

|

||||

|

||||

- **Optional Dependency**: A2A support requires additional dependencies

|

||||

- **Transport Support**: Currently supports Starlette transport only

|

||||

- **Synchronous Crews**: Crews execute synchronously within async A2A context

|

||||

|

||||

## Examples

|

||||

|

||||

See the `examples/a2a_integration_example.py` file for a complete working example of A2A integration with CrewAI.

|

||||

@@ -200,6 +200,37 @@ Deploy the crew or flow to [CrewAI Enterprise](https://app.crewai.com).

|

||||

```

|

||||

- Reads your local project configuration.

|

||||

- Prompts you to confirm the environment variables (like `OPENAI_API_KEY`, `SERPER_API_KEY`) found locally. These will be securely stored with the deployment on the Enterprise platform. Ensure your sensitive keys are correctly configured locally (e.g., in a `.env` file) before running this.

|

||||

|

||||

### 11. Organization Management

|

||||

|

||||

Manage your CrewAI Enterprise organizations.

|

||||

|

||||

```shell Terminal

|

||||

crewai org [COMMAND] [OPTIONS]

|

||||

```

|

||||

|

||||

#### Commands:

|

||||

|

||||

- `list`: List all organizations you belong to

|

||||

```shell Terminal

|

||||

crewai org list

|

||||

```

|

||||

|

||||

- `current`: Display your currently active organization

|

||||

```shell Terminal

|

||||

crewai org current

|

||||

```

|

||||

|

||||

- `switch`: Switch to a specific organization

|

||||

```shell Terminal

|

||||

crewai org switch <organization_id>

|

||||

```

|

||||

|

||||

<Note>

|

||||

You must be authenticated to CrewAI Enterprise to use these organization management commands.

|

||||

</Note>

|

||||

|

||||

- **Create a deployment** (continued):

|

||||

- Links the deployment to the corresponding remote GitHub repository (it usually detects this automatically).

|

||||

|

||||

- **Deploy the Crew**: Once you are authenticated, you can deploy your crew or flow to CrewAI Enterprise.

|

||||

|

||||

@@ -29,6 +29,10 @@ my_crew = Crew(

|

||||

|

||||

From this point on, your crew will have planning enabled, and the tasks will be planned before each iteration.

|

||||

|

||||

<Warning>

|

||||

When planning is enabled, crewAI will use `gpt-4o-mini` as the default LLM for planning, which requires a valid OpenAI API key. Since your agents might be using different LLMs, this could cause confusion if you don't have an OpenAI API key configured or if you're experiencing unexpected behavior related to LLM API calls.

|

||||

</Warning>

|

||||

|

||||

#### Planning LLM

|

||||

|

||||

Now you can define the LLM that will be used to plan the tasks.

|

||||

|

||||

@@ -32,6 +32,7 @@ The Enterprise Tools Repository includes:

|

||||

- **Customizability**: Provides the flexibility to develop custom tools or utilize existing ones, catering to the specific needs of agents.

|

||||

- **Error Handling**: Incorporates robust error handling mechanisms to ensure smooth operation.

|

||||

- **Caching Mechanism**: Features intelligent caching to optimize performance and reduce redundant operations.

|

||||

- **Asynchronous Support**: Handles both synchronous and asynchronous tools, enabling non-blocking operations.

|

||||

|

||||

## Using CrewAI Tools

|

||||

|

||||

@@ -177,6 +178,62 @@ class MyCustomTool(BaseTool):

|

||||

return "Tool's result"

|

||||

```

|

||||

|

||||

## Asynchronous Tool Support

|

||||

|

||||

CrewAI supports asynchronous tools, allowing you to implement tools that perform non-blocking operations like network requests, file I/O, or other async operations without blocking the main execution thread.

|

||||

|

||||

### Creating Async Tools

|

||||

|

||||

You can create async tools in two ways:

|

||||

|

||||

#### 1. Using the `tool` Decorator with Async Functions

|

||||

|

||||

```python Code

|

||||

from crewai.tools import tool

|

||||

|

||||

@tool("fetch_data_async")

|

||||

async def fetch_data_async(query: str) -> str:

|

||||

"""Asynchronously fetch data based on the query."""

|

||||

# Simulate async operation

|

||||

await asyncio.sleep(1)

|

||||

return f"Data retrieved for {query}"

|

||||

```

|

||||

|

||||

#### 2. Implementing Async Methods in Custom Tool Classes

|

||||

|

||||

```python Code

|

||||

from crewai.tools import BaseTool

|

||||

|

||||

class AsyncCustomTool(BaseTool):

|

||||

name: str = "async_custom_tool"

|

||||

description: str = "An asynchronous custom tool"

|

||||

|

||||

async def _run(self, query: str = "") -> str:

|

||||

"""Asynchronously run the tool"""

|

||||

# Your async implementation here

|

||||

await asyncio.sleep(1)

|

||||

return f"Processed {query} asynchronously"

|

||||

```

|

||||

|

||||

### Using Async Tools

|

||||

|

||||

Async tools work seamlessly in both standard Crew workflows and Flow-based workflows:

|

||||

|

||||

```python Code

|

||||

# In standard Crew

|

||||

agent = Agent(role="researcher", tools=[async_custom_tool])

|

||||

|

||||

# In Flow

|

||||

class MyFlow(Flow):

|

||||

@start()

|

||||

async def begin(self):

|

||||

crew = Crew(agents=[agent])

|

||||

result = await crew.kickoff_async()

|

||||

return result

|

||||

```

|

||||

|

||||

The CrewAI framework automatically handles the execution of both synchronous and asynchronous tools, so you don't need to worry about how to call them differently.

|

||||

|

||||

### Utilizing the `tool` Decorator

|

||||

|

||||

```python Code

|

||||

|

||||

@@ -9,7 +9,12 @@

|

||||

},

|

||||

"favicon": "images/favicon.svg",

|

||||

"contextual": {

|

||||

"options": ["copy", "view", "chatgpt", "claude"]

|

||||

"options": [

|

||||

"copy",

|

||||

"view",

|

||||

"chatgpt",

|

||||

"claude"

|

||||

]

|

||||

},

|

||||

"navigation": {

|

||||

"tabs": [

|

||||

@@ -201,6 +206,7 @@

|

||||

"observability/arize-phoenix",

|

||||

"observability/langfuse",

|

||||

"observability/langtrace",

|

||||

"observability/maxim",

|

||||

"observability/mlflow",

|

||||

"observability/openlit",

|

||||

"observability/opik",

|

||||

@@ -256,7 +262,8 @@

|

||||

"enterprise/features/tool-repository",

|

||||

"enterprise/features/webhook-streaming",

|

||||

"enterprise/features/traces",

|

||||

"enterprise/features/hallucination-guardrail"

|

||||

"enterprise/features/hallucination-guardrail",

|

||||

"enterprise/features/integrations"

|

||||

]

|

||||

},

|

||||

{

|

||||

|

||||

185

docs/enterprise/features/integrations.mdx

Normal file

185

docs/enterprise/features/integrations.mdx

Normal file

@@ -0,0 +1,185 @@

|

||||

---

|

||||

title: Integrations

|

||||

description: "Connected applications for your agents to take actions."

|

||||

icon: "plug"

|

||||

---

|

||||

|

||||

## Overview

|

||||

|

||||

Enable your agents to authenticate with any OAuth enabled provider and take actions. From Salesforce and HubSpot to Google and GitHub, we've got you covered with 16+ integrated services.

|

||||

|

||||

<Frame>

|

||||

|

||||

</Frame>

|

||||

|

||||

## Supported Integrations

|

||||

|

||||

### **Communication & Collaboration**

|

||||

- **Gmail** - Manage emails and drafts

|

||||

- **Slack** - Workspace notifications and alerts

|

||||

- **Microsoft** - Office 365 and Teams integration

|

||||

|

||||

### **Project Management**

|

||||

- **Jira** - Issue tracking and project management

|

||||

- **ClickUp** - Task and productivity management

|

||||

- **Asana** - Team task and project coordination

|

||||

- **Notion** - Page and database management

|

||||

- **Linear** - Software project and bug tracking

|

||||

- **GitHub** - Repository and issue management

|

||||

|

||||

### **Customer Relationship Management**

|

||||

- **Salesforce** - CRM account and opportunity management

|

||||

- **HubSpot** - Sales pipeline and contact management

|

||||

- **Zendesk** - Customer support ticket management

|

||||

|

||||

### **Business & Finance**

|

||||

- **Stripe** - Payment processing and customer management

|

||||

- **Shopify** - E-commerce store and product management

|

||||

|

||||

### **Productivity & Storage**

|

||||

- **Google Sheets** - Spreadsheet data synchronization

|

||||

- **Google Calendar** - Event and schedule management

|

||||

- **Box** - File storage and document management

|

||||

|

||||

and more to come!

|

||||

|

||||

## Prerequisites

|

||||

|

||||

Before using Authentication Integrations, ensure you have:

|

||||

|

||||

- A [CrewAI Enterprise](https://app.crewai.com) account. You can get started with a free trial.

|

||||

|

||||

|

||||

## Setting Up Integrations

|

||||

|

||||

### 1. Connect Your Account

|

||||

|

||||

1. Navigate to [CrewAI Enterprise](https://app.crewai.com)

|

||||

2. Go to **Integrations** tab - https://app.crewai.com/crewai_plus/connectors

|

||||

3. Click **Connect** on your desired service from the Authentication Integrations section

|

||||

4. Complete the OAuth authentication flow

|

||||

5. Grant necessary permissions for your use case

|

||||

6. Get your Enterprise Token from your [CrewAI Enterprise](https://app.crewai.com) account page - https://app.crewai.com/crewai_plus/settings/account

|

||||

|

||||

<Frame>

|

||||

|

||||

</Frame>

|

||||

|

||||

### 2. Install Integration Tools

|

||||

|

||||

All you need is the latest version of `crewai-tools` package.

|

||||

|

||||

```bash

|

||||

uv add crewai-tools

|

||||

```

|

||||

|

||||

## Usage Examples

|

||||

|

||||

### Basic Usage

|

||||

<Tip>

|

||||

All the services you are authenticated into will be available as tools. So all you need to do is add the `CrewaiEnterpriseTools` to your agent and you are good to go.

|

||||

</Tip>

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew

|

||||

from crewai_tools import CrewaiEnterpriseTools

|

||||

|

||||

# Get enterprise tools (Gmail tool will be included)

|

||||

enterprise_tools = CrewaiEnterpriseTools(

|

||||

enterprise_token="your_enterprise_token"

|

||||

)

|

||||

# print the tools

|

||||

print(enterprise_tools)

|

||||

|

||||

# Create an agent with Gmail capabilities

|

||||

email_agent = Agent(

|

||||

role="Email Manager",

|

||||

goal="Manage and organize email communications",

|

||||

backstory="An AI assistant specialized in email management and communication.",

|

||||

tools=[enterprise_tools]

|

||||

)

|

||||

|

||||

# Task to send an email

|

||||

email_task = Task(

|

||||

description="Draft and send a follow-up email to john@example.com about the project update",

|

||||

agent=email_agent,

|

||||

expected_output="Confirmation that email was sent successfully"

|

||||

)

|

||||

|

||||

# Run the task

|

||||

crew = Crew(

|

||||

agents=[email_agent],

|

||||

tasks=[email_task]

|

||||

)

|

||||

|

||||

# Run the crew

|

||||

crew.kickoff()

|

||||

```

|

||||

|

||||

### Filtering Tools

|

||||

|

||||

```python

|

||||

from crewai_tools import CrewaiEnterpriseTools

|

||||

|

||||

enterprise_tools = CrewaiEnterpriseTools(

|

||||

actions_list=["gmail_find_email"] # only gmail_find_email tool will be available

|

||||

)

|

||||

gmail_tool = enterprise_tools[0]

|

||||

|

||||

gmail_agent = Agent(

|

||||

role="Gmail Manager",

|

||||

goal="Manage gmail communications and notifications",

|

||||

backstory="An AI assistant that helps coordinate gmail communications.",

|

||||

tools=[gmail_tool]

|

||||

)

|

||||

|

||||

notification_task = Task(

|

||||

description="Find the email from john@example.com",

|

||||

agent=gmail_agent,

|

||||

expected_output="Email found from john@example.com"

|

||||

)

|

||||

|

||||

# Run the task

|

||||

crew = Crew(

|

||||

agents=[slack_agent],

|

||||

tasks=[notification_task]

|

||||

)

|

||||

```

|

||||

|

||||

## Best Practices

|

||||

|

||||

### Security

|

||||

- **Principle of Least Privilege**: Only grant the minimum permissions required for your agents' tasks

|

||||

- **Regular Audits**: Periodically review connected integrations and their permissions

|

||||

- **Secure Credentials**: Never hardcode credentials; use CrewAI's secure authentication flow

|

||||

|

||||

|

||||

### Filtering Tools

|

||||

On a deployed crew, you can specify which actions are avialbel for each integration from the settings page of the service you connected to.

|

||||

|

||||

<Frame>

|

||||

|

||||

</Frame>

|

||||

|

||||

|

||||

### Scoped Deployments for multi user organizations

|

||||

You can deploy your crew and scope each integration to a specific user. For example, a crew that connects to google can use a specific user's gmail account.

|

||||

|

||||

<Tip>

|

||||

This is useful for multi user organizations where you want to scope the integration to a specific user.

|

||||

</Tip>

|

||||

|

||||

|

||||

Use the `user_bearer_token` to scope the integration to a specific user so that when the crew is kicked off, it will use the user's bearer token to authenticate with the integration. If user is not logged in, then the crew will not use any connected integrations. Use the default bearer token to authenticate with the integrations thats deployed with the crew.

|

||||

|

||||

<Frame>

|

||||

|

||||

</Frame>

|

||||

|

||||

|

||||

|

||||

### Getting Help

|

||||

|

||||

<Card title="Need Help?" icon="headset" href="mailto:support@crewai.com">

|

||||

Contact our support team for assistance with integration setup or troubleshooting.

|

||||

</Card>

|

||||

@@ -277,22 +277,23 @@ This pattern allows you to combine direct data passing with state updates for ma

|

||||

|

||||

One of CrewAI's most powerful features is the ability to persist flow state across executions. This enables workflows that can be paused, resumed, and even recovered after failures.

|

||||

|

||||

### The @persist Decorator

|

||||

### The @persist() Decorator

|

||||

|

||||

The `@persist` decorator automates state persistence, saving your flow's state at key points in execution.

|

||||

The `@persist()` decorator automates state persistence, saving your flow's state at key points in execution.

|

||||

|

||||

#### Class-Level Persistence

|

||||

|

||||

When applied at the class level, `@persist` saves state after every method execution:

|

||||

When applied at the class level, `@persist()` saves state after every method execution:

|

||||

|

||||

```python

|

||||

from crewai.flow.flow import Flow, listen, persist, start

|

||||

from crewai.flow.flow import Flow, listen, start

|

||||

from crewai.flow.persistence import persist

|

||||

from pydantic import BaseModel

|

||||

|

||||

class CounterState(BaseModel):

|

||||

value: int = 0

|

||||

|

||||

@persist # Apply to the entire flow class

|

||||

@persist() # Apply to the entire flow class

|

||||

class PersistentCounterFlow(Flow[CounterState]):

|

||||

@start()

|

||||

def increment(self):

|

||||

@@ -319,10 +320,11 @@ print(f"Second run result: {result2}") # Will be higher due to persisted state

|

||||

|

||||

#### Method-Level Persistence

|

||||

|

||||

For more granular control, you can apply `@persist` to specific methods:

|

||||

For more granular control, you can apply `@persist()` to specific methods:

|

||||

|

||||

```python

|

||||

from crewai.flow.flow import Flow, listen, persist, start

|

||||

from crewai.flow.flow import Flow, listen, start

|

||||

from crewai.flow.persistence import persist

|

||||

|

||||

class SelectivePersistFlow(Flow):

|

||||

@start()

|

||||

@@ -330,7 +332,7 @@ class SelectivePersistFlow(Flow):

|

||||

self.state["count"] = 1

|

||||

return "First step"

|

||||

|

||||

@persist # Only persist after this method

|

||||

@persist() # Only persist after this method

|

||||

@listen(first_step)

|

||||

def important_step(self, prev_result):

|

||||

self.state["count"] += 1

|

||||

|

||||

BIN

docs/images/enterprise/crew_connectors.png

Normal file

BIN

docs/images/enterprise/crew_connectors.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 1.2 MiB |

BIN

docs/images/enterprise/enterprise_action_auth_token.png

Normal file

BIN

docs/images/enterprise/enterprise_action_auth_token.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 54 KiB |

BIN

docs/images/enterprise/filtering_enterprise_action_tools.png

Normal file

BIN

docs/images/enterprise/filtering_enterprise_action_tools.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 362 KiB |

BIN

docs/images/enterprise/user_bearer_token.png

Normal file

BIN

docs/images/enterprise/user_bearer_token.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 49 KiB |

@@ -22,7 +22,7 @@ Watch this video tutorial for a step-by-step demonstration of the installation p

|

||||

<Note>

|

||||

**Python Version Requirements**

|

||||

|

||||

CrewAI requires `Python >=3.10 and <=3.13`. Here's how to check your version:

|

||||

CrewAI requires `Python >=3.10 and <3.14`. Here's how to check your version:

|

||||

```bash

|

||||

python3 --version

|

||||

```

|

||||

|

||||

152

docs/observability/maxim.mdx

Normal file

152

docs/observability/maxim.mdx

Normal file

@@ -0,0 +1,152 @@

|

||||

---

|

||||

title: Maxim Integration

|

||||

description: Start Agent monitoring, evaluation, and observability

|

||||

icon: bars-staggered

|

||||

---

|

||||

|

||||

# Maxim Integration

|

||||

|

||||

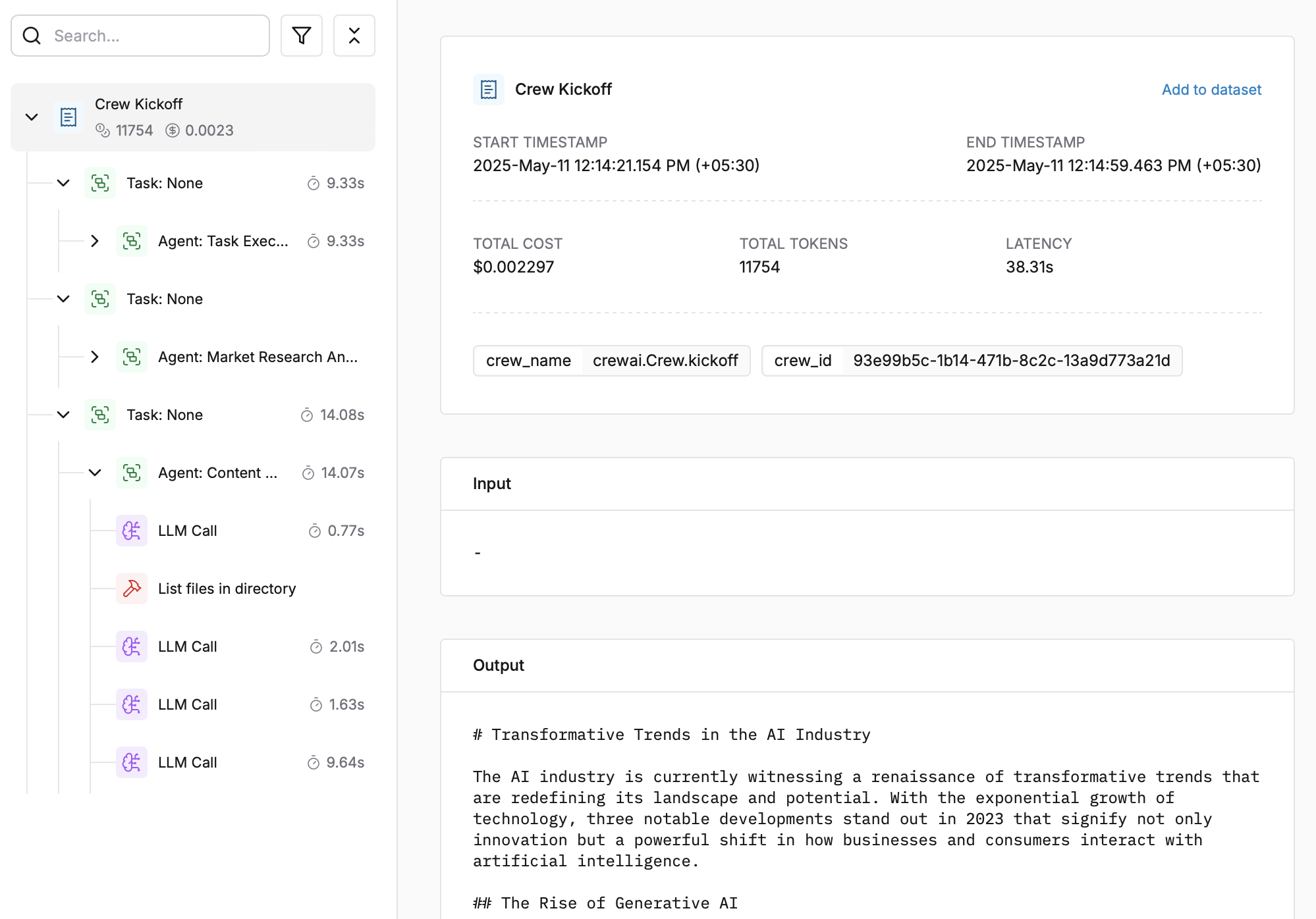

Maxim AI provides comprehensive agent monitoring, evaluation, and observability for your CrewAI applications. With Maxim's one-line integration, you can easily trace and analyse agent interactions, performance metrics, and more.

|

||||

|

||||

|

||||

## Features: One Line Integration

|

||||

|

||||

- **End-to-End Agent Tracing**: Monitor the complete lifecycle of your agents

|

||||

- **Performance Analytics**: Track latency, tokens consumed, and costs

|

||||

- **Hyperparameter Monitoring**: View the configuration details of your agent runs

|

||||

- **Tool Call Tracking**: Observe when and how agents use their tools

|

||||

- **Advanced Visualisation**: Understand agent trajectories through intuitive dashboards

|

||||

|

||||

## Getting Started

|

||||

|

||||

### Prerequisites

|

||||

|

||||

- Python version >=3.10

|

||||

- A Maxim account ([sign up here](https://getmaxim.ai/))

|

||||

- A CrewAI project

|

||||

|

||||

### Installation

|

||||

|

||||

Install the Maxim SDK via pip:

|

||||

|

||||

```python

|

||||

pip install maxim-py>=3.6.2

|

||||

```

|

||||

|

||||

Or add it to your `requirements.txt`:

|

||||

|

||||

```

|

||||

maxim-py>=3.6.2

|

||||

```

|

||||

|

||||

|

||||

### Basic Setup

|

||||

|

||||

### 1. Set up environment variables

|

||||

|

||||

```python

|

||||

### Environment Variables Setup

|

||||

|

||||

# Create a `.env` file in your project root:

|

||||

|

||||

# Maxim API Configuration

|

||||

MAXIM_API_KEY=your_api_key_here

|

||||

MAXIM_LOG_REPO_ID=your_repo_id_here

|

||||

```

|

||||

|

||||

### 2. Import the required packages

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew, Process

|

||||

from maxim import Maxim

|

||||

from maxim.logger.crewai import instrument_crewai

|

||||

```

|

||||

|

||||

### 3. Initialise Maxim with your API key

|

||||

|

||||

```python

|

||||

# Initialize Maxim logger

|

||||

logger = Maxim().logger()

|

||||

|

||||

# Instrument CrewAI with just one line

|

||||

instrument_crewai(logger)

|

||||

```

|

||||

|

||||

### 4. Create and run your CrewAI application as usual

|

||||

|

||||

```python

|

||||

|

||||

# Create your agent

|

||||

researcher = Agent(

|

||||

role='Senior Research Analyst',

|

||||

goal='Uncover cutting-edge developments in AI',

|

||||

backstory="You are an expert researcher at a tech think tank...",

|

||||

verbose=True,

|

||||

llm=llm

|

||||

)

|

||||

|

||||

# Define the task

|

||||

research_task = Task(

|

||||

description="Research the latest AI advancements...",

|

||||

expected_output="",

|

||||

agent=researcher

|

||||

)

|

||||

|

||||

# Configure and run the crew

|

||||

crew = Crew(

|

||||

agents=[researcher],

|

||||

tasks=[research_task],

|

||||

verbose=True

|

||||

)

|

||||

|

||||

try:

|

||||

result = crew.kickoff()

|

||||

finally:

|

||||

maxim.cleanup() # Ensure cleanup happens even if errors occur

|

||||

```

|

||||

|

||||

That's it! All your CrewAI agent interactions will now be logged and available in your Maxim dashboard.

|

||||

|

||||

Check this Google Colab Notebook for a quick reference - [Notebook](https://colab.research.google.com/drive/1ZKIZWsmgQQ46n8TH9zLsT1negKkJA6K8?usp=sharing)

|

||||

|

||||

## Viewing Your Traces

|

||||

|

||||

After running your CrewAI application:

|

||||

|

||||

|

||||

|

||||

1. Log in to your [Maxim Dashboard](https://getmaxim.ai/dashboard)

|

||||

2. Navigate to your repository

|

||||

3. View detailed agent traces, including:

|

||||

- Agent conversations

|

||||

- Tool usage patterns

|

||||

- Performance metrics

|

||||

- Cost analytics

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Common Issues

|

||||

|

||||

- **No traces appearing**: Ensure your API key and repository ID are correc

|

||||

- Ensure you've **called `instrument_crewai()`** ***before*** running your crew. This initializes logging hooks correctly.

|

||||

- Set `debug=True` in your `instrument_crewai()` call to surface any internal errors:

|

||||

|

||||

```python

|

||||

instrument_crewai(logger, debug=True)

|

||||

```

|

||||

|

||||

- Configure your agents with `verbose=True` to capture detailed logs:

|

||||

|

||||

```python

|

||||

|

||||

agent = CrewAgent(..., verbose=True)

|

||||

```

|

||||

|

||||

- Double-check that `instrument_crewai()` is called **before** creating or executing agents. This might be obvious, but it's a common oversight.

|

||||

|

||||

### Support

|

||||

|

||||

If you encounter any issues:

|

||||

|

||||

- Check the [Maxim Documentation](https://getmaxim.ai/docs)

|

||||

- Maxim Github [Link](https://github.com/maximhq)

|

||||

@@ -1,64 +0,0 @@

|

||||

"""Example: CrewAI A2A Integration

|

||||

|

||||

This example demonstrates how to expose a CrewAI crew as an A2A (Agent-to-Agent)

|

||||

protocol server for remote interoperability.

|

||||

|

||||

Requirements:

|

||||

pip install crewai[a2a]

|

||||

"""

|

||||

|

||||

from crewai import Agent, Crew, Task

|

||||

from crewai.a2a import CrewAgentExecutor, start_a2a_server

|

||||

|

||||

|

||||

def main():

|

||||

"""Create and start an A2A server with a CrewAI crew."""

|

||||

|

||||

researcher = Agent(

|

||||

role="Research Analyst",

|

||||

goal="Provide comprehensive research and analysis on any topic",

|

||||

backstory=(

|

||||

"You are an experienced research analyst with expertise in "

|

||||

"gathering, analyzing, and synthesizing information from various sources."

|

||||

),

|

||||

verbose=True

|

||||

)

|

||||

|

||||

research_task = Task(

|

||||

description=(

|

||||

"Research and analyze the topic: {query}\n"

|

||||

"Provide a comprehensive overview including:\n"

|

||||

"- Key concepts and definitions\n"

|

||||

"- Current trends and developments\n"

|

||||

"- Important considerations\n"

|

||||

"- Relevant examples or case studies"

|

||||

),

|

||||

agent=researcher,

|

||||

expected_output="A detailed research report with analysis and insights"

|

||||

)

|

||||

|

||||

research_crew = Crew(

|

||||

agents=[researcher],

|

||||

tasks=[research_task],

|

||||

verbose=True

|

||||

)

|

||||

|

||||

executor = CrewAgentExecutor(

|

||||

crew=research_crew,

|

||||

supported_content_types=['text', 'text/plain', 'application/json']

|

||||

)

|

||||

|

||||

print("Starting A2A server with CrewAI research crew...")

|

||||

print("Server will be available at http://localhost:10001")

|

||||

print("Use the A2A CLI or SDK to interact with the crew remotely")

|

||||

|

||||

start_a2a_server(

|

||||

executor,

|

||||

host="0.0.0.0",

|

||||

port=10001,

|

||||

transport="starlette"

|

||||

)

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

main()

|

||||

@@ -11,7 +11,7 @@ dependencies = [

|

||||

# Core Dependencies

|

||||

"pydantic>=2.4.2",

|

||||

"openai>=1.13.3",

|

||||

"litellm==1.68.0",

|

||||

"litellm==1.72.0",

|

||||

"instructor>=1.3.3",

|

||||

# Text Processing

|

||||

"pdfplumber>=0.11.4",

|

||||

@@ -65,8 +65,8 @@ mem0 = ["mem0ai>=0.1.94"]

|

||||

docling = [

|

||||

"docling>=2.12.0",

|

||||

]

|

||||

a2a = [

|

||||

"a2a-sdk>=0.0.1",

|

||||

aisuite = [

|

||||

"aisuite>=0.1.10",

|

||||

]

|

||||

|

||||

[tool.uv]

|

||||

|

||||

@@ -32,13 +32,3 @@ __all__ = [

|

||||

"TaskOutput",

|

||||

"LLMGuardrail",

|

||||

]

|

||||

|

||||

try:

|

||||

from crewai.a2a import ( # noqa: F401

|

||||

CrewAgentExecutor,

|

||||

start_a2a_server,

|

||||

create_a2a_app

|

||||

)

|

||||

__all__.extend(["CrewAgentExecutor", "start_a2a_server", "create_a2a_app"])

|

||||

except ImportError:

|

||||

pass

|

||||

|

||||

@@ -1,62 +0,0 @@

|

||||

"""A2A (Agent-to-Agent) protocol integration for CrewAI.

|

||||

|

||||

This module provides integration with the A2A protocol to enable remote agent

|

||||

interoperability. It allows CrewAI crews to be exposed as A2A-compatible agents

|

||||

that can communicate with other agents following the A2A protocol standard.

|

||||

|

||||

The integration is optional and requires the 'a2a' extra dependency:

|

||||

pip install crewai[a2a]

|

||||

|

||||

Example:

|

||||

from crewai import Agent, Crew, Task

|

||||

from crewai.a2a import CrewAgentExecutor, start_a2a_server

|

||||

|

||||

agent = Agent(role="Assistant", goal="Help users", backstory="Helpful AI")

|

||||

task = Task(description="Help with {query}", agent=agent)

|

||||

crew = Crew(agents=[agent], tasks=[task])

|

||||

|

||||

executor = CrewAgentExecutor(crew)

|

||||

start_a2a_server(executor, host="localhost", port=8080)

|

||||

"""

|

||||

|

||||

try:

|

||||

from .crew_agent_executor import CrewAgentExecutor

|

||||

from .server import start_a2a_server, create_a2a_app

|

||||

from .server_config import ServerConfig

|

||||

from .task_info import TaskInfo

|

||||

from .exceptions import A2AServerError, TransportError, ExecutionError

|

||||

|

||||

__all__ = [

|

||||

"CrewAgentExecutor",

|

||||

"start_a2a_server",

|

||||

"create_a2a_app",

|

||||

"ServerConfig",

|

||||

"TaskInfo",

|

||||

"A2AServerError",

|

||||

"TransportError",

|

||||

"ExecutionError"

|

||||

]

|

||||

except ImportError:

|

||||

import warnings

|

||||

warnings.warn(

|

||||

"A2A integration requires the 'a2a' extra dependency. "

|

||||

"Install with: pip install crewai[a2a]",

|

||||

ImportWarning

|

||||

)

|

||||

|

||||

def _missing_dependency(*args, **kwargs):

|

||||

raise ImportError(

|

||||

"A2A integration requires the 'a2a' extra dependency. "

|

||||

"Install with: pip install crewai[a2a]"

|

||||

)

|

||||

|

||||

CrewAgentExecutor = _missing_dependency # type: ignore

|

||||

start_a2a_server = _missing_dependency # type: ignore

|

||||

create_a2a_app = _missing_dependency # type: ignore

|

||||

ServerConfig = _missing_dependency # type: ignore

|

||||

TaskInfo = _missing_dependency # type: ignore

|

||||

A2AServerError = _missing_dependency # type: ignore

|

||||

TransportError = _missing_dependency # type: ignore

|

||||

ExecutionError = _missing_dependency # type: ignore

|

||||

|

||||

__all__ = []

|

||||

@@ -1,255 +0,0 @@

|

||||

"""CrewAI Agent Executor for A2A Protocol Integration.

|

||||

|

||||

This module implements the A2A AgentExecutor interface to enable CrewAI crews

|

||||

to participate in the Agent-to-Agent protocol for remote interoperability.

|

||||

"""

|

||||

|

||||

import asyncio

|

||||

import json

|

||||

import logging

|

||||

from typing import Any, Dict, Optional

|

||||

|

||||

from crewai import Crew

|

||||

from crewai.crew import CrewOutput

|

||||

|

||||

from .task_info import TaskInfo

|

||||

|

||||

try:

|

||||

from a2a.server.agent_execution.agent_executor import AgentExecutor

|

||||

from a2a.server.agent_execution.context import RequestContext

|

||||

from a2a.server.events.event_queue import EventQueue

|

||||

from a2a.types import (

|

||||

InvalidParamsError,

|

||||

Part,

|

||||

Task,

|

||||

TextPart,

|

||||

UnsupportedOperationError,

|

||||

)

|

||||

from a2a.utils import completed_task, new_artifact

|

||||

from a2a.utils.errors import ServerError

|

||||

except ImportError:

|

||||

raise ImportError(

|

||||

"A2A integration requires the 'a2a' extra dependency. "

|

||||

"Install with: pip install crewai[a2a]"

|

||||

)

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

class CrewAgentExecutor(AgentExecutor):

|

||||

"""A2A Agent Executor that wraps CrewAI crews for remote interoperability.

|

||||

|

||||

This class implements the A2A AgentExecutor interface to enable CrewAI crews

|

||||

to be exposed as remotely interoperable agents following the A2A protocol.

|

||||

|

||||

Args:

|

||||

crew: The CrewAI crew to expose as an A2A agent

|

||||

supported_content_types: List of supported content types for input

|

||||

|

||||

Example:

|

||||

from crewai import Agent, Crew, Task

|

||||

from crewai.a2a import CrewAgentExecutor

|

||||

|

||||

agent = Agent(role="Assistant", goal="Help users", backstory="Helpful AI")

|

||||

task = Task(description="Help with {query}", agent=agent)

|

||||

crew = Crew(agents=[agent], tasks=[task])

|

||||

|

||||

executor = CrewAgentExecutor(crew)

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

crew: Crew,

|

||||

supported_content_types: Optional[list[str]] = None

|

||||

):

|

||||

"""Initialize the CrewAgentExecutor.

|

||||

|

||||

Args:

|

||||

crew: The CrewAI crew to wrap

|

||||

supported_content_types: List of supported content types

|

||||

"""

|

||||

self.crew = crew

|

||||

self.supported_content_types = supported_content_types or [

|

||||

'text', 'text/plain'

|

||||

]

|

||||

self._running_tasks: Dict[str, TaskInfo] = {}

|

||||

|

||||

async def execute(

|

||||

self,

|

||||

context: RequestContext,

|

||||

event_queue: EventQueue,

|

||||

) -> None:

|

||||

"""Execute the crew with the given context and publish results to event queue.

|

||||

|

||||

This method extracts the user input from the request context, executes

|

||||

the CrewAI crew, and publishes the results as A2A artifacts.

|

||||

|

||||

Args:

|

||||

context: The A2A request context containing task details

|

||||

event_queue: Queue for publishing execution events and results

|

||||

|

||||

Raises:

|

||||

ServerError: If validation fails or execution encounters an error

|

||||

"""

|

||||

error = self._validate_request(context)

|

||||

if error:

|

||||

logger.error(f"Request validation failed: {error}")

|

||||

raise ServerError(error=InvalidParamsError())

|

||||

|

||||

query = context.get_user_input()

|

||||

task_id = context.task_id

|

||||

context_id = context.context_id

|

||||

|

||||

if not task_id or not context_id:

|

||||

raise ServerError(error=InvalidParamsError())

|

||||

|

||||

logger.info(f"Executing crew for task {task_id} with query: {query}")

|

||||

|

||||

try:

|

||||

inputs = {"query": query}

|

||||

|

||||

execution_task = asyncio.create_task(

|

||||

self._execute_crew_async(inputs)

|

||||

)

|

||||

from datetime import datetime

|

||||

self._running_tasks[task_id] = TaskInfo(

|

||||

task=execution_task,

|

||||

started_at=datetime.now(),

|

||||

status="running"

|

||||

)

|

||||

|

||||

result = await execution_task

|

||||

|

||||

self._running_tasks.pop(task_id, None)

|

||||

|

||||

logger.info(f"Crew execution completed for task {task_id}")

|

||||

|

||||

parts = self._convert_output_to_parts(result)

|

||||

|

||||

messages = [context.message] if context.message else []

|

||||

event_queue.enqueue_event(

|

||||

completed_task(

|

||||

task_id,

|

||||

context_id,

|

||||

[new_artifact(parts, f"crew_output_{task_id}")],

|

||||

messages,

|

||||

)

|

||||

)

|

||||

|

||||

except asyncio.CancelledError:

|

||||

logger.info(f"Task {task_id} was cancelled")

|

||||

self._running_tasks.pop(task_id, None)

|

||||

raise

|

||||

except Exception as e:

|

||||

logger.error(f"Error executing crew for task {task_id}: {e}")

|

||||

self._running_tasks.pop(task_id, None)

|

||||

|

||||

error_parts = [

|

||||

Part(root=TextPart(text=f"Error executing crew: {str(e)}"))

|

||||

]

|

||||

|

||||

messages = [context.message] if context.message else []

|

||||

event_queue.enqueue_event(

|

||||

completed_task(

|

||||

task_id,

|

||||

context_id,

|

||||

[new_artifact(error_parts, f"error_{task_id}")],

|

||||

messages,

|

||||

)

|

||||

)

|

||||

|

||||

raise ServerError(

|

||||

error=InvalidParamsError()

|

||||

) from e

|

||||

|

||||

async def cancel(

|

||||

self,

|

||||

request: RequestContext,

|

||||

event_queue: EventQueue

|

||||

) -> Task | None:

|

||||

"""Cancel a running crew execution.

|

||||

|

||||

Args:

|

||||

request: The A2A request context for the task to cancel

|

||||

event_queue: Event queue for publishing cancellation events

|

||||

|

||||

Returns:

|

||||

None (cancellation is handled internally)

|

||||

|

||||

Raises:

|

||||

ServerError: If the task cannot be cancelled

|

||||

"""

|

||||

task_id = request.task_id

|

||||

|

||||

if task_id in self._running_tasks:

|

||||

task_info = self._running_tasks[task_id]

|

||||

task_info.task.cancel()

|

||||

task_info.update_status("cancelled")

|

||||

|

||||

try:

|

||||

await task_info.task

|

||||

except asyncio.CancelledError:

|

||||

logger.info(f"Successfully cancelled task {task_id}")

|

||||

pass

|

||||

|

||||

self._running_tasks.pop(task_id, None)

|

||||

return None

|

||||

else:

|

||||

logger.warning(f"Task {task_id} not found for cancellation")

|

||||

raise ServerError(error=UnsupportedOperationError())

|

||||

|

||||

async def _execute_crew_async(self, inputs: Dict[str, Any]) -> CrewOutput:

|

||||

"""Execute the crew asynchronously.

|

||||

|

||||

Args:

|

||||

inputs: Input parameters for the crew

|

||||

|

||||

Returns:

|

||||

The crew execution output

|

||||

"""

|

||||

loop = asyncio.get_event_loop()

|

||||

return await loop.run_in_executor(None, self.crew.kickoff, inputs)

|

||||

|

||||

def _convert_output_to_parts(self, result: CrewOutput) -> list[Part]:

|

||||

"""Convert CrewAI output to A2A Parts.

|

||||

|

||||

Args:

|

||||

result: The crew execution result

|

||||

|

||||

Returns:

|

||||

List of A2A Parts representing the output

|

||||

"""

|

||||

parts = []

|

||||

|

||||

if hasattr(result, 'raw') and result.raw:

|

||||

parts.append(Part(root=TextPart(text=str(result.raw))))

|

||||

elif result:

|

||||

parts.append(Part(root=TextPart(text=str(result))))

|

||||

|

||||

if hasattr(result, 'json_dict') and result.json_dict:

|

||||

json_output = json.dumps(result.json_dict, indent=2)

|

||||

parts.append(Part(root=TextPart(text=json_output)))

|

||||

|

||||

if not parts:

|

||||

parts.append(Part(root=TextPart(text="Crew execution completed successfully")))

|

||||

|

||||

return parts

|

||||

|

||||

def _validate_request(self, context: RequestContext) -> Optional[str]:

|

||||

"""Validate the incoming request context.

|

||||

|

||||

Args:

|

||||

context: The A2A request context to validate

|

||||

|

||||

Returns:

|

||||

Error message if validation fails, None if valid

|

||||

"""

|

||||

try:

|

||||

user_input = context.get_user_input()

|

||||

if not user_input or not user_input.strip():

|

||||

return "Empty or missing user input"

|

||||

|

||||

return None

|

||||

|

||||

except Exception as e:

|

||||

return f"Failed to extract user input: {e}"

|

||||

@@ -1,16 +0,0 @@

|

||||

"""Custom exceptions for A2A integration."""

|

||||

|

||||

|

||||

class A2AServerError(Exception):

|

||||

"""Base exception for A2A server errors."""

|

||||

pass

|

||||

|

||||

|

||||

class TransportError(A2AServerError):

|

||||

"""Error related to transport configuration."""

|

||||

pass

|

||||

|

||||

|

||||

class ExecutionError(A2AServerError):

|

||||

"""Error during crew execution."""

|

||||

pass

|

||||

@@ -1,151 +0,0 @@

|

||||

"""A2A Server utilities for CrewAI integration.

|

||||

|

||||

This module provides convenience functions for starting A2A servers with CrewAI

|

||||

crews, supporting multiple transport protocols and configurations.

|

||||

"""

|

||||

|

||||

import logging

|

||||

from typing import Optional

|

||||

|

||||

from .exceptions import TransportError

|

||||

from .server_config import ServerConfig

|

||||

|

||||

try:

|

||||

from a2a.server.agent_execution.agent_executor import AgentExecutor

|

||||

from a2a.server.apps import A2AStarletteApplication

|

||||

from a2a.server.request_handlers.default_request_handler import DefaultRequestHandler

|

||||

from a2a.server.tasks import InMemoryTaskStore

|

||||

from a2a.types import AgentCard, AgentCapabilities, AgentSkill

|

||||

except ImportError:

|

||||

raise ImportError(

|

||||

"A2A integration requires the 'a2a' extra dependency. "

|

||||

"Install with: pip install crewai[a2a]"

|

||||

)

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

def start_a2a_server(

|

||||

agent_executor: AgentExecutor,

|

||||

host: str = "localhost",

|

||||

port: int = 10001,

|

||||

transport: str = "starlette",

|

||||

config: Optional[ServerConfig] = None,

|

||||

**kwargs

|

||||

) -> None:

|

||||

"""Start an A2A server with the given agent executor.

|

||||

|

||||

This is a convenience function that creates and starts an A2A server

|

||||

with the specified configuration.

|

||||

|

||||

Args:

|

||||

agent_executor: The A2A agent executor to serve

|

||||

host: Host address to bind the server to

|

||||

port: Port number to bind the server to

|

||||

transport: Transport protocol to use ("starlette" or "fastapi")

|

||||

config: Optional ServerConfig object to override individual parameters

|

||||

**kwargs: Additional arguments passed to the server

|

||||

|

||||

Example:

|

||||

from crewai import Agent, Crew, Task

|

||||

from crewai.a2a import CrewAgentExecutor, start_a2a_server

|

||||

|

||||

agent = Agent(role="Assistant", goal="Help users", backstory="Helpful AI")

|

||||

task = Task(description="Help with {query}", agent=agent)

|

||||

crew = Crew(agents=[agent], tasks=[task])

|

||||

|

||||

executor = CrewAgentExecutor(crew)

|

||||

start_a2a_server(executor, host="0.0.0.0", port=8080)

|

||||

"""

|

||||

if config:

|

||||

host = config.host

|

||||

port = config.port

|

||||

transport = config.transport

|

||||

|

||||

app = create_a2a_app(

|

||||

agent_executor,

|

||||

transport=transport,

|

||||

agent_name=config.agent_name if config else None,

|

||||

agent_description=config.agent_description if config else None,

|

||||

**kwargs

|

||||

)

|

||||

|

||||

logger.info(f"Starting A2A server on {host}:{port} using {transport} transport")

|

||||

|

||||

try:

|

||||

import uvicorn

|

||||

uvicorn.run(app, host=host, port=port)

|

||||

except ImportError:

|

||||

raise ImportError("uvicorn is required to run the A2A server. Install with: pip install uvicorn")

|

||||

|

||||

|

||||

def create_a2a_app(

|

||||

agent_executor: AgentExecutor,

|

||||

transport: str = "starlette",

|

||||

agent_name: Optional[str] = None,

|

||||

agent_description: Optional[str] = None,

|

||||

**kwargs

|

||||

):

|

||||

"""Create an A2A application with the given agent executor.

|

||||

|

||||

This function creates an A2A server application that can be run

|

||||

with any ASGI server.

|

||||

|

||||

Args:

|

||||

agent_executor: The A2A agent executor to serve

|

||||

transport: Transport protocol to use ("starlette" or "fastapi")

|

||||

agent_name: Optional name for the agent

|

||||

agent_description: Optional description for the agent

|

||||

**kwargs: Additional arguments passed to the transport

|

||||

|

||||

Returns:

|

||||

ASGI application ready to be served

|

||||

|

||||

Example:

|

||||

from crewai.a2a import CrewAgentExecutor, create_a2a_app

|

||||

|

||||

executor = CrewAgentExecutor(crew)

|

||||

app = create_a2a_app(

|

||||

executor,

|

||||

agent_name="My Crew Agent",

|

||||

agent_description="A helpful CrewAI agent"

|

||||

)

|

||||

|

||||

import uvicorn

|

||||

uvicorn.run(app, host="0.0.0.0", port=8080)

|

||||

"""

|

||||

agent_card = AgentCard(

|

||||

name=agent_name or "CrewAI Agent",

|

||||

description=agent_description or "A CrewAI agent exposed via A2A protocol",

|

||||

version="1.0.0",

|

||||

capabilities=AgentCapabilities(

|

||||

streaming=True,

|

||||

pushNotifications=False

|

||||

),

|

||||

defaultInputModes=["text"],

|

||||

defaultOutputModes=["text"],

|

||||

skills=[

|

||||

AgentSkill(

|

||||

id="crew_execution",

|

||||

name="Crew Execution",

|

||||

description="Execute CrewAI crew tasks with multiple agents",

|

||||

examples=["Process user queries", "Coordinate multi-agent workflows"],

|

||||

tags=["crewai", "multi-agent", "workflow"]

|

||||

)

|

||||

],

|

||||

url="https://github.com/crewAIInc/crewAI"

|

||||

)

|

||||

|

||||

task_store = InMemoryTaskStore()

|

||||

request_handler = DefaultRequestHandler(agent_executor, task_store)

|

||||

|

||||

if transport.lower() == "fastapi":

|

||||

raise TransportError("FastAPI transport is not available in the current A2A SDK version")

|

||||

else:

|

||||

app_instance = A2AStarletteApplication(

|

||||

agent_card=agent_card,

|

||||

http_handler=request_handler,

|

||||

**kwargs

|

||||

)

|

||||

|

||||

return app_instance.build()

|

||||

@@ -1,25 +0,0 @@

|

||||

"""Server configuration for A2A integration."""

|

||||

|

||||

from dataclasses import dataclass

|

||||

from typing import Optional

|

||||

|

||||

|

||||

@dataclass

|

||||

class ServerConfig:

|

||||

"""Configuration for A2A server.

|

||||

|

||||

This class encapsulates server settings to improve readability

|

||||

and flexibility for server setups.

|

||||

|

||||

Attributes:

|

||||

host: Host address to bind the server to

|

||||

port: Port number to bind the server to

|

||||

transport: Transport protocol to use ("starlette" or "fastapi")

|

||||

agent_name: Optional name for the agent

|

||||

agent_description: Optional description for the agent

|

||||

"""

|

||||

host: str = "localhost"

|

||||

port: int = 10001

|

||||

transport: str = "starlette"

|

||||

agent_name: Optional[str] = None

|

||||

agent_description: Optional[str] = None

|

||||

@@ -1,47 +0,0 @@

|

||||

"""Task information tracking for A2A integration."""

|

||||

|

||||

from dataclasses import dataclass

|

||||

from datetime import datetime

|

||||

from typing import Optional

|

||||

import asyncio

|

||||

|

||||

|

||||

@dataclass

|

||||

class TaskInfo:

|

||||

"""Information about a running task in the A2A executor.

|

||||

|

||||

This class tracks the lifecycle and status of tasks being executed

|

||||

by the CrewAgentExecutor, providing better task management capabilities.

|

||||

|

||||

Attributes:

|

||||

task: The asyncio task being executed

|

||||

started_at: When the task was started

|

||||

status: Current status of the task ("running", "completed", "cancelled", "failed")

|

||||

"""

|

||||

task: asyncio.Task

|

||||

started_at: datetime

|

||||

status: str = "running"

|

||||

|

||||

def update_status(self, new_status: str) -> None:

|

||||

"""Update the task status.

|

||||

|

||||

Args:

|

||||

new_status: The new status to set

|

||||

"""

|

||||

self.status = new_status

|

||||

|

||||

@property

|

||||

def is_running(self) -> bool:

|

||||

"""Check if the task is currently running."""

|

||||

return self.status == "running" and not self.task.done()

|

||||

|

||||

@property

|

||||

def duration(self) -> Optional[float]:

|

||||

"""Get the duration of the task in seconds.

|

||||

|

||||

Returns:

|

||||

Duration in seconds if task is completed, None if still running

|

||||

"""

|

||||

if self.task.done():

|

||||

return (datetime.now() - self.started_at).total_seconds()

|

||||

return None

|

||||

@@ -1,6 +1,7 @@

|

||||

from rich.console import Console

|

||||

from rich.table import Table

|

||||

|

||||

from requests import HTTPError

|

||||

from crewai.cli.command import BaseCommand, PlusAPIMixin

|

||||

from crewai.cli.config import Settings

|

||||

|

||||

@@ -16,7 +17,7 @@ class OrganizationCommand(BaseCommand, PlusAPIMixin):

|

||||

response = self.plus_api_client.get_organizations()

|

||||

response.raise_for_status()

|

||||

orgs = response.json()

|

||||

|

||||

|

||||

if not orgs:

|

||||

console.print("You don't belong to any organizations yet.", style="yellow")

|

||||

return

|

||||

@@ -26,8 +27,14 @@ class OrganizationCommand(BaseCommand, PlusAPIMixin):

|

||||

table.add_column("ID", style="green")

|

||||

for org in orgs:

|

||||

table.add_row(org["name"], org["uuid"])

|

||||

|

||||

|

||||

console.print(table)

|

||||

except HTTPError as e:

|

||||

if e.response.status_code == 401:

|

||||

console.print("You are not logged in to any organization. Use 'crewai login' to login.", style="bold red")

|

||||

return

|

||||

console.print(f"Failed to retrieve organization list: {str(e)}", style="bold red")

|

||||

raise SystemExit(1)

|

||||

except Exception as e:

|

||||

console.print(f"Failed to retrieve organization list: {str(e)}", style="bold red")

|

||||

raise SystemExit(1)

|

||||

@@ -37,18 +44,24 @@ class OrganizationCommand(BaseCommand, PlusAPIMixin):

|

||||

response = self.plus_api_client.get_organizations()

|

||||

response.raise_for_status()

|

||||

orgs = response.json()

|

||||

|

||||

|

||||

org = next((o for o in orgs if o["uuid"] == org_id), None)

|

||||

if not org:

|

||||

console.print(f"Organization with id '{org_id}' not found.", style="bold red")

|

||||

return

|

||||

|

||||

|

||||

settings = Settings()

|

||||

settings.org_name = org["name"]

|

||||

settings.org_uuid = org["uuid"]

|

||||

settings.dump()

|

||||

|

||||

|

||||

console.print(f"Successfully switched to {org['name']} ({org['uuid']})", style="bold green")

|

||||

except HTTPError as e:

|

||||

if e.response.status_code == 401:

|

||||

console.print("You are not logged in to any organization. Use 'crewai login' to login.", style="bold red")

|

||||

return

|

||||

console.print(f"Failed to retrieve organization list: {str(e)}", style="bold red")

|

||||

raise SystemExit(1)

|

||||

except Exception as e:

|

||||

console.print(f"Failed to switch organization: {str(e)}", style="bold red")

|

||||

raise SystemExit(1)

|

||||

|

||||

@@ -91,6 +91,7 @@ class ToolCommand(BaseCommand, PlusAPIMixin):

|

||||

console.print(

|

||||

f"[green]Found these tools to publish: {', '.join([e['name'] for e in available_exports])}[/green]"

|

||||

)

|

||||

self._print_current_organization()

|

||||

|

||||

with tempfile.TemporaryDirectory() as temp_build_dir:

|

||||

subprocess.run(

|

||||

@@ -136,6 +137,7 @@ class ToolCommand(BaseCommand, PlusAPIMixin):

|

||||

)

|

||||

|

||||