mirror of

https://github.com/crewAIInc/crewAI.git

synced 2025-12-24 00:08:29 +00:00

Compare commits

24 Commits

brandon/fi

...

devin/1739

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

60a2b9842d | ||

|

|

b1860cbb12 | ||

|

|

1b488b6da7 | ||

|

|

d3b398ed52 | ||

|

|

d52fd09602 | ||

|

|

d6800d8957 | ||

|

|

2fd7506ed9 | ||

|

|

161084aff2 | ||

|

|

b145cb3247 | ||

|

|

1adbcf697d | ||

|

|

e51355200a | ||

|

|

47818f4f41 | ||

|

|

9b10fd47b0 | ||

|

|

c408368267 | ||

|

|

90b3145e92 | ||

|

|

fbd0e015d5 | ||

|

|

17e25fb842 | ||

|

|

d6d98ee969 | ||

|

|

e0600e3bb9 | ||

|

|

a79d77dfd7 | ||

|

|

56ec9bc224 | ||

|

|

8eef02739a | ||

|

|

6f4ad532e6 | ||

|

|

74a1de8550 |

16

README.md

16

README.md

@@ -1,10 +1,18 @@

|

||||

<div align="center">

|

||||

|

||||

|

||||

|

||||

|

||||

# **CrewAI**

|

||||

|

||||

🤖 **CrewAI**: Production-grade framework for orchestrating sophisticated AI agent systems. From simple automations to complex real-world applications, CrewAI provides precise control and deep customization. By fostering collaborative intelligence through flexible, production-ready architecture, CrewAI empowers agents to work together seamlessly, tackling complex business challenges with predictable, consistent results.

|

||||

**CrewAI**: Production-grade framework for orchestrating sophisticated AI agent systems. From simple automations to complex real-world applications, CrewAI provides precise control and deep customization. By fostering collaborative intelligence through flexible, production-ready architecture, CrewAI empowers agents to work together seamlessly, tackling complex business challenges with predictable, consistent results.

|

||||

|

||||

**CrewAI Enterprise**

|

||||

Want to plan, build (+ no code), deploy, monitor and interare your agents: [CrewAI Enterprise](https://www.crewai.com/enterprise). Designed for complex, real-world applications, our enterprise solution offers:

|

||||

|

||||

- **Seamless Integrations**

|

||||

- **Scalable & Secure Deployment**

|

||||

- **Actionable Insights**

|

||||

- **24/7 Support**

|

||||

|

||||

<h3>

|

||||

|

||||

@@ -392,7 +400,7 @@ class AdvancedAnalysisFlow(Flow[MarketState]):

|

||||

goal="Gather and validate supporting market data",

|

||||

backstory="You excel at finding and correlating multiple data sources"

|

||||

)

|

||||

|

||||

|

||||

analysis_task = Task(

|

||||

description="Analyze {sector} sector data for the past {timeframe}",

|

||||

expected_output="Detailed market analysis with confidence score",

|

||||

@@ -403,7 +411,7 @@ class AdvancedAnalysisFlow(Flow[MarketState]):

|

||||

expected_output="Corroborating evidence and potential contradictions",

|

||||

agent=researcher

|

||||

)

|

||||

|

||||

|

||||

# Demonstrate crew autonomy

|

||||

analysis_crew = Crew(

|

||||

agents=[analyst, researcher],

|

||||

|

||||

@@ -91,7 +91,7 @@ result = crew.kickoff(inputs={"question": "What city does John live in and how o

|

||||

```

|

||||

|

||||

|

||||

Here's another example with the `CrewDoclingSource`. The CrewDoclingSource is actually quite versatile and can handle multiple file formats including TXT, PDF, DOCX, HTML, and more.

|

||||

Here's another example with the `CrewDoclingSource`. The CrewDoclingSource is actually quite versatile and can handle multiple file formats including MD, PDF, DOCX, HTML, and more.

|

||||

|

||||

<Note>

|

||||

You need to install `docling` for the following example to work: `uv add docling`

|

||||

@@ -152,10 +152,10 @@ Here are examples of how to use different types of knowledge sources:

|

||||

|

||||

### Text File Knowledge Source

|

||||

```python

|

||||

from crewai.knowledge.source.crew_docling_source import CrewDoclingSource

|

||||

from crewai.knowledge.source.text_file_knowledge_source import TextFileKnowledgeSource

|

||||

|

||||

# Create a text file knowledge source

|

||||

text_source = CrewDoclingSource(

|

||||

text_source = TextFileKnowledgeSource(

|

||||

file_paths=["document.txt", "another.txt"]

|

||||

)

|

||||

|

||||

|

||||

@@ -463,26 +463,32 @@ Learn how to get the most out of your LLM configuration:

|

||||

|

||||

<Accordion title="Google">

|

||||

```python Code

|

||||

# Option 1. Gemini accessed with an API key.

|

||||

# Option 1: Gemini accessed with an API key.

|

||||

# https://ai.google.dev/gemini-api/docs/api-key

|

||||

GEMINI_API_KEY=<your-api-key>

|

||||

|

||||

# Option 2. Vertex AI IAM credentials for Gemini, Anthropic, and anything in the Model Garden.

|

||||

# Option 2: Vertex AI IAM credentials for Gemini, Anthropic, and Model Garden.

|

||||

# https://cloud.google.com/vertex-ai/generative-ai/docs/overview

|

||||

```

|

||||

|

||||

## GET CREDENTIALS

|

||||

Get credentials:

|

||||

```python Code

|

||||

import json

|

||||

|

||||

file_path = 'path/to/vertex_ai_service_account.json'

|

||||

|

||||

# Load the JSON file

|

||||

with open(file_path, 'r') as file:

|

||||

vertex_credentials = json.load(file)

|

||||

|

||||

# Convert to JSON string

|

||||

# Convert the credentials to a JSON string

|

||||

vertex_credentials_json = json.dumps(vertex_credentials)

|

||||

```

|

||||

|

||||

Example usage:

|

||||

```python Code

|

||||

from crewai import LLM

|

||||

|

||||

llm = LLM(

|

||||

model="gemini/gemini-1.5-pro-latest",

|

||||

temperature=0.7,

|

||||

|

||||

@@ -58,41 +58,107 @@ my_crew = Crew(

|

||||

### Example: Use Custom Memory Instances e.g FAISS as the VectorDB

|

||||

|

||||

```python Code

|

||||

from crewai import Crew, Agent, Task, Process

|

||||

from crewai import Crew, Process

|

||||

from crewai.memory import LongTermMemory, ShortTermMemory, EntityMemory

|

||||

from crewai.memory.storage import LTMSQLiteStorage, RAGStorage

|

||||

from typing import List, Optional

|

||||

|

||||

# Assemble your crew with memory capabilities

|

||||

my_crew = Crew(

|

||||

agents=[...],

|

||||

tasks=[...],

|

||||

process="Process.sequential",

|

||||

memory=True,

|

||||

long_term_memory=EnhanceLongTermMemory(

|

||||

my_crew: Crew = Crew(

|

||||

agents = [...],

|

||||

tasks = [...],

|

||||

process = Process.sequential,

|

||||

memory = True,

|

||||

# Long-term memory for persistent storage across sessions

|

||||

long_term_memory = LongTermMemory(

|

||||

storage=LTMSQLiteStorage(

|

||||

db_path="/my_data_dir/my_crew1/long_term_memory_storage.db"

|

||||

db_path="/my_crew1/long_term_memory_storage.db"

|

||||

)

|

||||

),

|

||||

short_term_memory=EnhanceShortTermMemory(

|

||||

storage=CustomRAGStorage(

|

||||

crew_name="my_crew",

|

||||

storage_type="short_term",

|

||||

data_dir="//my_data_dir",

|

||||

model=embedder["model"],

|

||||

dimension=embedder["dimension"],

|

||||

# Short-term memory for current context using RAG

|

||||

short_term_memory = ShortTermMemory(

|

||||

storage = RAGStorage(

|

||||

embedder_config={

|

||||

"provider": "openai",

|

||||

"config": {

|

||||

"model": 'text-embedding-3-small'

|

||||

}

|

||||

},

|

||||

type="short_term",

|

||||

path="/my_crew1/"

|

||||

)

|

||||

),

|

||||

),

|

||||

entity_memory=EnhanceEntityMemory(

|

||||

storage=CustomRAGStorage(

|

||||

crew_name="my_crew",

|

||||

storage_type="entities",

|

||||

data_dir="//my_data_dir",

|

||||

model=embedder["model"],

|

||||

dimension=embedder["dimension"],

|

||||

),

|

||||

# Entity memory for tracking key information about entities

|

||||

entity_memory = EntityMemory(

|

||||

storage=RAGStorage(

|

||||

embedder_config={

|

||||

"provider": "openai",

|

||||

"config": {

|

||||

"model": 'text-embedding-3-small'

|

||||

}

|

||||

},

|

||||

type="short_term",

|

||||

path="/my_crew1/"

|

||||

)

|

||||

),

|

||||

verbose=True,

|

||||

)

|

||||

```

|

||||

|

||||

## Security Considerations

|

||||

|

||||

When configuring memory storage:

|

||||

- Use environment variables for storage paths (e.g., `CREWAI_STORAGE_DIR`)

|

||||

- Never hardcode sensitive information like database credentials

|

||||

- Consider access permissions for storage directories

|

||||

- Use relative paths when possible to maintain portability

|

||||

|

||||

Example using environment variables:

|

||||

```python

|

||||

import os

|

||||

from crewai import Crew

|

||||

from crewai.memory import LongTermMemory

|

||||

from crewai.memory.storage import LTMSQLiteStorage

|

||||

|

||||

# Configure storage path using environment variable

|

||||

storage_path = os.getenv("CREWAI_STORAGE_DIR", "./storage")

|

||||

crew = Crew(

|

||||

memory=True,

|

||||

long_term_memory=LongTermMemory(

|

||||

storage=LTMSQLiteStorage(

|

||||

db_path="{storage_path}/memory.db".format(storage_path=storage_path)

|

||||

)

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

## Configuration Examples

|

||||

|

||||

### Basic Memory Configuration

|

||||

```python

|

||||

from crewai import Crew

|

||||

from crewai.memory import LongTermMemory

|

||||

|

||||

# Simple memory configuration

|

||||

crew = Crew(memory=True) # Uses default storage locations

|

||||

```

|

||||

|

||||

### Custom Storage Configuration

|

||||

```python

|

||||

from crewai import Crew

|

||||

from crewai.memory import LongTermMemory

|

||||

from crewai.memory.storage import LTMSQLiteStorage

|

||||

|

||||

# Configure custom storage paths

|

||||

crew = Crew(

|

||||

memory=True,

|

||||

long_term_memory=LongTermMemory(

|

||||

storage=LTMSQLiteStorage(db_path="./memory.db")

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

## Integrating Mem0 for Enhanced User Memory

|

||||

|

||||

[Mem0](https://mem0.ai/) is a self-improving memory layer for LLM applications, enabling personalized AI experiences.

|

||||

@@ -216,6 +282,19 @@ my_crew = Crew(

|

||||

|

||||

### Using Google AI embeddings

|

||||

|

||||

#### Prerequisites

|

||||

Before using Google AI embeddings, ensure you have:

|

||||

- Access to the Gemini API

|

||||

- The necessary API keys and permissions

|

||||

|

||||

You will need to update your *pyproject.toml* dependencies:

|

||||

```YAML

|

||||

dependencies = [

|

||||

"google-generativeai>=0.8.4", #main version in January/2025 - crewai v.0.100.0 and crewai-tools 0.33.0

|

||||

"crewai[tools]>=0.100.0,<1.0.0"

|

||||

]

|

||||

```

|

||||

|

||||

```python Code

|

||||

from crewai import Crew, Agent, Task, Process

|

||||

|

||||

@@ -368,6 +447,38 @@ my_crew = Crew(

|

||||

)

|

||||

```

|

||||

|

||||

### Using Amazon Bedrock embeddings

|

||||

|

||||

```python Code

|

||||

# Note: Ensure you have installed `boto3` for Bedrock embeddings to work.

|

||||

|

||||

import os

|

||||

import boto3

|

||||

from crewai import Crew, Agent, Task, Process

|

||||

|

||||

boto3_session = boto3.Session(

|

||||

region_name=os.environ.get("AWS_REGION_NAME"),

|

||||

aws_access_key_id=os.environ.get("AWS_ACCESS_KEY_ID"),

|

||||

aws_secret_access_key=os.environ.get("AWS_SECRET_ACCESS_KEY")

|

||||

)

|

||||

|

||||

my_crew = Crew(

|

||||

agents=[...],

|

||||

tasks=[...],

|

||||

process=Process.sequential,

|

||||

memory=True,

|

||||

embedder={

|

||||

"provider": "bedrock",

|

||||

"config":{

|

||||

"session": boto3_session,

|

||||

"model": "amazon.titan-embed-text-v2:0",

|

||||

"vector_dimension": 1024

|

||||

}

|

||||

}

|

||||

verbose=True

|

||||

)

|

||||

```

|

||||

|

||||

### Adding Custom Embedding Function

|

||||

|

||||

```python Code

|

||||

|

||||

@@ -268,7 +268,7 @@ analysis_task = Task(

|

||||

|

||||

Task guardrails provide a way to validate and transform task outputs before they

|

||||

are passed to the next task. This feature helps ensure data quality and provides

|

||||

efeedback to agents when their output doesn't meet specific criteria.

|

||||

feedback to agents when their output doesn't meet specific criteria.

|

||||

|

||||

### Using Task Guardrails

|

||||

|

||||

|

||||

98

docs/how-to/langfuse-observability.mdx

Normal file

98

docs/how-to/langfuse-observability.mdx

Normal file

@@ -0,0 +1,98 @@

|

||||

---

|

||||

title: Agent Monitoring with Langfuse

|

||||

description: Learn how to integrate Langfuse with CrewAI via OpenTelemetry using OpenLit

|

||||

icon: magnifying-glass-chart

|

||||

---

|

||||

|

||||

# Integrate Langfuse with CrewAI

|

||||

|

||||

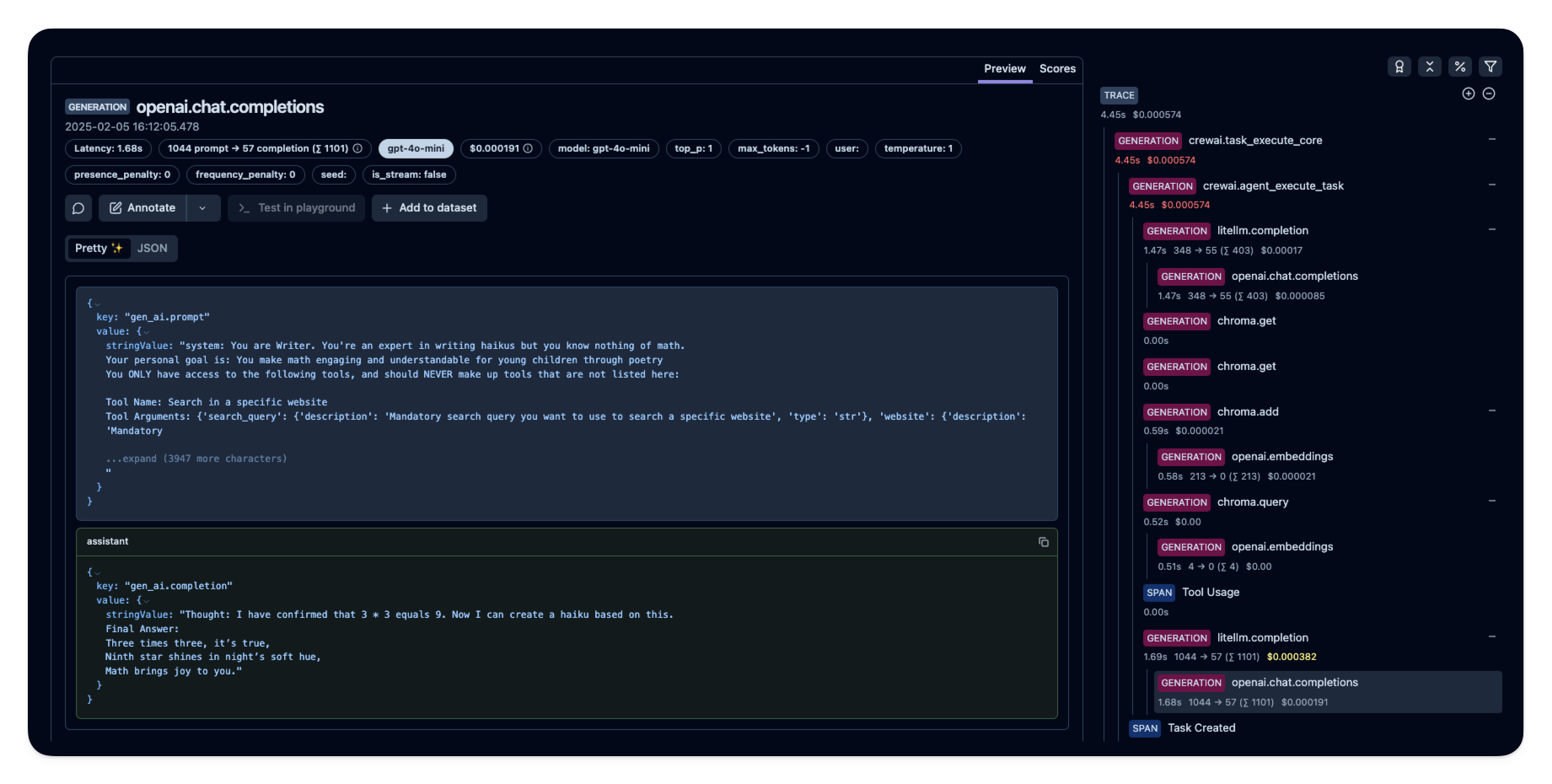

This notebook demonstrates how to integrate **Langfuse** with **CrewAI** using OpenTelemetry via the **OpenLit** SDK. By the end of this notebook, you will be able to trace your CrewAI applications with Langfuse for improved observability and debugging.

|

||||

|

||||

> **What is Langfuse?** [Langfuse](https://langfuse.com) is an open-source LLM engineering platform. It provides tracing and monitoring capabilities for LLM applications, helping developers debug, analyze, and optimize their AI systems. Langfuse integrates with various tools and frameworks via native integrations, OpenTelemetry, and APIs/SDKs.

|

||||

|

||||

## Get Started

|

||||

|

||||

We'll walk through a simple example of using CrewAI and integrating it with Langfuse via OpenTelemetry using OpenLit.

|

||||

|

||||

### Step 1: Install Dependencies

|

||||

|

||||

|

||||

```python

|

||||

%pip install langfuse openlit crewai crewai_tools

|

||||

```

|

||||

|

||||

### Step 2: Set Up Environment Variables

|

||||

|

||||

Set your Langfuse API keys and configure OpenTelemetry export settings to send traces to Langfuse. Please refer to the [Langfuse OpenTelemetry Docs](https://langfuse.com/docs/opentelemetry/get-started) for more information on the Langfuse OpenTelemetry endpoint `/api/public/otel` and authentication.

|

||||

|

||||

|

||||

```python

|

||||

import os

|

||||

import base64

|

||||

|

||||

LANGFUSE_PUBLIC_KEY="pk-lf-..."

|

||||

LANGFUSE_SECRET_KEY="sk-lf-..."

|

||||

LANGFUSE_AUTH=base64.b64encode(f"{LANGFUSE_PUBLIC_KEY}:{LANGFUSE_SECRET_KEY}".encode()).decode()

|

||||

|

||||

os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = "https://cloud.langfuse.com/api/public/otel" # EU data region

|

||||

# os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = "https://us.cloud.langfuse.com/api/public/otel" # US data region

|

||||

os.environ["OTEL_EXPORTER_OTLP_HEADERS"] = f"Authorization=Basic {LANGFUSE_AUTH}"

|

||||

|

||||

# your openai key

|

||||

os.environ["OPENAI_API_KEY"] = "sk-..."

|

||||

```

|

||||

|

||||

### Step 3: Initialize OpenLit

|

||||

|

||||

Initialize the OpenLit OpenTelemetry instrumentation SDK to start capturing OpenTelemetry traces.

|

||||

|

||||

|

||||

```python

|

||||

import openlit

|

||||

|

||||

openlit.init()

|

||||

```

|

||||

|

||||

### Step 4: Create a Simple CrewAI Application

|

||||

|

||||

We'll create a simple CrewAI application where multiple agents collaborate to answer a user's question.

|

||||

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew

|

||||

|

||||

from crewai_tools import (

|

||||

WebsiteSearchTool

|

||||

)

|

||||

|

||||

web_rag_tool = WebsiteSearchTool()

|

||||

|

||||

writer = Agent(

|

||||

role="Writer",

|

||||

goal="You make math engaging and understandable for young children through poetry",

|

||||

backstory="You're an expert in writing haikus but you know nothing of math.",

|

||||

tools=[web_rag_tool],

|

||||

)

|

||||

|

||||

task = Task(description=("What is {multiplication}?"),

|

||||

expected_output=("Compose a haiku that includes the answer."),

|

||||

agent=writer)

|

||||

|

||||

crew = Crew(

|

||||

agents=[writer],

|

||||

tasks=[task],

|

||||

share_crew=False

|

||||

)

|

||||

```

|

||||

|

||||

### Step 5: See Traces in Langfuse

|

||||

|

||||

After running the agent, you can view the traces generated by your CrewAI application in [Langfuse](https://cloud.langfuse.com). You should see detailed steps of the LLM interactions, which can help you debug and optimize your AI agent.

|

||||

|

||||

|

||||

|

||||

_[Public example trace in Langfuse](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/e2cf380ffc8d47d28da98f136140642b?timestamp=2025-02-05T15%3A12%3A02.717Z&observation=3b32338ee6a5d9af)_

|

||||

|

||||

## References

|

||||

|

||||

- [Langfuse OpenTelemetry Docs](https://langfuse.com/docs/opentelemetry/get-started)

|

||||

@@ -1,211 +0,0 @@

|

||||

# Portkey Integration with CrewAI

|

||||

<img src="https://raw.githubusercontent.com/siddharthsambharia-portkey/Portkey-Product-Images/main/Portkey-CrewAI.png" alt="Portkey CrewAI Header Image" width="70%" />

|

||||

|

||||

|

||||

[Portkey](https://portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai) is a 2-line upgrade to make your CrewAI agents reliable, cost-efficient, and fast.

|

||||

|

||||

Portkey adds 4 core production capabilities to any CrewAI agent:

|

||||

1. Routing to **200+ LLMs**

|

||||

2. Making each LLM call more robust

|

||||

3. Full-stack tracing & cost, performance analytics

|

||||

4. Real-time guardrails to enforce behavior

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Getting Started

|

||||

|

||||

1. **Install Required Packages:**

|

||||

|

||||

```bash

|

||||

pip install -qU crewai portkey-ai

|

||||

```

|

||||

|

||||

2. **Configure the LLM Client:**

|

||||

|

||||

To build CrewAI Agents with Portkey, you'll need two keys:

|

||||

- **Portkey API Key**: Sign up on the [Portkey app](https://app.portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai) and copy your API key

|

||||

- **Virtual Key**: Virtual Keys securely manage your LLM API keys in one place. Store your LLM provider API keys securely in Portkey's vault

|

||||

|

||||

```python

|

||||

from crewai import LLM

|

||||

from portkey_ai import createHeaders, PORTKEY_GATEWAY_URL

|

||||

|

||||

gpt_llm = LLM(

|

||||

model="gpt-4",

|

||||

base_url=PORTKEY_GATEWAY_URL,

|

||||

api_key="dummy", # We are using Virtual key

|

||||

extra_headers=createHeaders(

|

||||

api_key="YOUR_PORTKEY_API_KEY",

|

||||

virtual_key="YOUR_VIRTUAL_KEY", # Enter your Virtual key from Portkey

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

3. **Create and Run Your First Agent:**

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew

|

||||

|

||||

# Define your agents with roles and goals

|

||||

coder = Agent(

|

||||

role='Software developer',

|

||||

goal='Write clear, concise code on demand',

|

||||

backstory='An expert coder with a keen eye for software trends.',

|

||||

llm=gpt_llm

|

||||

)

|

||||

|

||||

# Create tasks for your agents

|

||||

task1 = Task(

|

||||

description="Define the HTML for making a simple website with heading- Hello World! Portkey is working!",

|

||||

expected_output="A clear and concise HTML code",

|

||||

agent=coder

|

||||

)

|

||||

|

||||

# Instantiate your crew

|

||||

crew = Crew(

|

||||

agents=[coder],

|

||||

tasks=[task1],

|

||||

)

|

||||

|

||||

result = crew.kickoff()

|

||||

print(result)

|

||||

```

|

||||

|

||||

|

||||

## Key Features

|

||||

|

||||

| Feature | Description |

|

||||

|---------|-------------|

|

||||

| 🌐 Multi-LLM Support | Access OpenAI, Anthropic, Gemini, Azure, and 250+ providers through a unified interface |

|

||||

| 🛡️ Production Reliability | Implement retries, timeouts, load balancing, and fallbacks |

|

||||

| 📊 Advanced Observability | Track 40+ metrics including costs, tokens, latency, and custom metadata |

|

||||

| 🔍 Comprehensive Logging | Debug with detailed execution traces and function call logs |

|

||||

| 🚧 Security Controls | Set budget limits and implement role-based access control |

|

||||

| 🔄 Performance Analytics | Capture and analyze feedback for continuous improvement |

|

||||

| 💾 Intelligent Caching | Reduce costs and latency with semantic or simple caching |

|

||||

|

||||

|

||||

## Production Features with Portkey Configs

|

||||

|

||||

All features mentioned below are through Portkey's Config system. Portkey's Config system allows you to define routing strategies using simple JSON objects in your LLM API calls. You can create and manage Configs directly in your code or through the Portkey Dashboard. Each Config has a unique ID for easy reference.

|

||||

|

||||

<Frame>

|

||||

<img src="https://raw.githubusercontent.com/Portkey-AI/docs-core/refs/heads/main/images/libraries/libraries-3.avif"/>

|

||||

</Frame>

|

||||

|

||||

|

||||

### 1. Use 250+ LLMs

|

||||

Access various LLMs like Anthropic, Gemini, Mistral, Azure OpenAI, and more with minimal code changes. Switch between providers or use them together seamlessly. [Learn more about Universal API](https://portkey.ai/docs/product/ai-gateway/universal-api)

|

||||

|

||||

|

||||

Easily switch between different LLM providers:

|

||||

|

||||

```python

|

||||

# Anthropic Configuration

|

||||

anthropic_llm = LLM(

|

||||

model="claude-3-5-sonnet-latest",

|

||||

base_url=PORTKEY_GATEWAY_URL,

|

||||

api_key="dummy",

|

||||

extra_headers=createHeaders(

|

||||

api_key="YOUR_PORTKEY_API_KEY",

|

||||

virtual_key="YOUR_ANTHROPIC_VIRTUAL_KEY", #You don't need provider when using Virtual keys

|

||||

trace_id="anthropic_agent"

|

||||

)

|

||||

)

|

||||

|

||||

# Azure OpenAI Configuration

|

||||

azure_llm = LLM(

|

||||

model="gpt-4",

|

||||

base_url=PORTKEY_GATEWAY_URL,

|

||||

api_key="dummy",

|

||||

extra_headers=createHeaders(

|

||||

api_key="YOUR_PORTKEY_API_KEY",

|

||||

virtual_key="YOUR_AZURE_VIRTUAL_KEY", #You don't need provider when using Virtual keys

|

||||

trace_id="azure_agent"

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

### 2. Caching

|

||||

Improve response times and reduce costs with two powerful caching modes:

|

||||

- **Simple Cache**: Perfect for exact matches

|

||||

- **Semantic Cache**: Matches responses for requests that are semantically similar

|

||||

[Learn more about Caching](https://portkey.ai/docs/product/ai-gateway/cache-simple-and-semantic)

|

||||

|

||||

```py

|

||||

config = {

|

||||

"cache": {

|

||||

"mode": "semantic", # or "simple" for exact matching

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 3. Production Reliability

|

||||

Portkey provides comprehensive reliability features:

|

||||

- **Automatic Retries**: Handle temporary failures gracefully

|

||||

- **Request Timeouts**: Prevent hanging operations

|

||||

- **Conditional Routing**: Route requests based on specific conditions

|

||||

- **Fallbacks**: Set up automatic provider failovers

|

||||

- **Load Balancing**: Distribute requests efficiently

|

||||

|

||||

[Learn more about Reliability Features](https://portkey.ai/docs/product/ai-gateway/)

|

||||

|

||||

|

||||

|

||||

### 4. Metrics

|

||||

|

||||

Agent runs are complex. Portkey automatically logs **40+ comprehensive metrics** for your AI agents, including cost, tokens used, latency, etc. Whether you need a broad overview or granular insights into your agent runs, Portkey's customizable filters provide the metrics you need.

|

||||

|

||||

|

||||

- Cost per agent interaction

|

||||

- Response times and latency

|

||||

- Token usage and efficiency

|

||||

- Success/failure rates

|

||||

- Cache hit rates

|

||||

|

||||

<img src="https://github.com/siddharthsambharia-portkey/Portkey-Product-Images/blob/main/Portkey-Dashboard.png?raw=true" width="70%" alt="Portkey Dashboard" />

|

||||

|

||||

### 5. Detailed Logging

|

||||

Logs are essential for understanding agent behavior, diagnosing issues, and improving performance. They provide a detailed record of agent activities and tool use, which is crucial for debugging and optimizing processes.

|

||||

|

||||

|

||||

Access a dedicated section to view records of agent executions, including parameters, outcomes, function calls, and errors. Filter logs based on multiple parameters such as trace ID, model, tokens used, and metadata.

|

||||

|

||||

<details>

|

||||

<summary><b>Traces</b></summary>

|

||||

<img src="https://raw.githubusercontent.com/siddharthsambharia-portkey/Portkey-Product-Images/main/Portkey-Traces.png" alt="Portkey Traces" width="70%" />

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><b>Logs</b></summary>

|

||||

<img src="https://raw.githubusercontent.com/siddharthsambharia-portkey/Portkey-Product-Images/main/Portkey-Logs.png" alt="Portkey Logs" width="70%" />

|

||||

</details>

|

||||

|

||||

### 6. Enterprise Security Features

|

||||

- Set budget limit and rate limts per Virtual Key (disposable API keys)

|

||||

- Implement role-based access control

|

||||

- Track system changes with audit logs

|

||||

- Configure data retention policies

|

||||

|

||||

|

||||

|

||||

For detailed information on creating and managing Configs, visit the [Portkey documentation](https://docs.portkey.ai/product/ai-gateway/configs).

|

||||

|

||||

## Resources

|

||||

|

||||

- [📘 Portkey Documentation](https://docs.portkey.ai)

|

||||

- [📊 Portkey Dashboard](https://app.portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai)

|

||||

- [🐦 Twitter](https://twitter.com/portkeyai)

|

||||

- [💬 Discord Community](https://discord.gg/DD7vgKK299)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

---

|

||||

title: Portkey Observability and Guardrails

|

||||

title: Agent Monitoring with Portkey

|

||||

description: How to use Portkey with CrewAI

|

||||

icon: key

|

||||

---

|

||||

|

||||

@@ -103,7 +103,8 @@

|

||||

"how-to/langtrace-observability",

|

||||

"how-to/mlflow-observability",

|

||||

"how-to/openlit-observability",

|

||||

"how-to/portkey-observability"

|

||||

"how-to/portkey-observability",

|

||||

"how-to/langfuse-observability"

|

||||

]

|

||||

},

|

||||

{

|

||||

|

||||

@@ -8,9 +8,9 @@ icon: file-pen

|

||||

|

||||

## Description

|

||||

|

||||

The `FileWriterTool` is a component of the crewai_tools package, designed to simplify the process of writing content to files.

|

||||

The `FileWriterTool` is a component of the crewai_tools package, designed to simplify the process of writing content to files with cross-platform compatibility (Windows, Linux, macOS).

|

||||

It is particularly useful in scenarios such as generating reports, saving logs, creating configuration files, and more.

|

||||

This tool supports creating new directories if they don't exist, making it easier to organize your output.

|

||||

This tool handles path differences across operating systems, supports UTF-8 encoding, and automatically creates directories if they don't exist, making it easier to organize your output reliably across different platforms.

|

||||

|

||||

## Installation

|

||||

|

||||

@@ -43,6 +43,8 @@ print(result)

|

||||

|

||||

## Conclusion

|

||||

|

||||

By integrating the `FileWriterTool` into your crews, the agents can execute the process of writing content to files and creating directories.

|

||||

This tool is essential for tasks that require saving output data, creating structured file systems, and more. By adhering to the setup and usage guidelines provided,

|

||||

incorporating this tool into projects is straightforward and efficient.

|

||||

By integrating the `FileWriterTool` into your crews, the agents can reliably write content to files across different operating systems.

|

||||

This tool is essential for tasks that require saving output data, creating structured file systems, and handling cross-platform file operations.

|

||||

It's particularly recommended for Windows users who may encounter file writing issues with standard Python file operations.

|

||||

|

||||

By adhering to the setup and usage guidelines provided, incorporating this tool into projects is straightforward and ensures consistent file writing behavior across all platforms.

|

||||

|

||||

@@ -17,12 +17,51 @@ The SeleniumScrapingTool is crafted for high-efficiency web scraping tasks.

|

||||

It allows for precise extraction of content from web pages by using CSS selectors to target specific elements.

|

||||

Its design caters to a wide range of scraping needs, offering flexibility to work with any provided website URL.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- Python 3.7 or higher

|

||||

- Chrome browser installed (for ChromeDriver)

|

||||

|

||||

## Installation

|

||||

|

||||

To get started with the SeleniumScrapingTool, install the crewai_tools package using pip:

|

||||

### Option 1: All-in-one installation

|

||||

```shell

|

||||

pip install 'crewai[tools]' selenium>=4.0.0 webdriver-manager>=3.8.0

|

||||

```

|

||||

|

||||

### Option 2: Step-by-step installation

|

||||

```shell

|

||||

pip install 'crewai[tools]'

|

||||

pip install selenium>=4.0.0

|

||||

pip install webdriver-manager>=3.8.0

|

||||

```

|

||||

|

||||

### Common Installation Issues

|

||||

|

||||

1. If you encounter WebDriver issues, ensure your Chrome browser is up-to-date

|

||||

2. For Linux users, you might need to install additional system packages:

|

||||

```shell

|

||||

sudo apt-get install chromium-chromedriver

|

||||

```

|

||||

|

||||

## Basic Usage

|

||||

|

||||

Here's a simple example to get you started with error handling:

|

||||

|

||||

```python

|

||||

from crewai_tools import SeleniumScrapingTool

|

||||

|

||||

try:

|

||||

# Initialize the tool with a specific website

|

||||

tool = SeleniumScrapingTool(website_url='https://example.com')

|

||||

|

||||

# Extract content

|

||||

content = tool.run()

|

||||

print(content)

|

||||

except Exception as e:

|

||||

print(f"Error during scraping: {str(e)}")

|

||||

# Ensure proper cleanup in case of errors

|

||||

tool.cleanup()

|

||||

```

|

||||

|

||||

## Usage Examples

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

[project]

|

||||

name = "crewai"

|

||||

version = "0.100.1"

|

||||

version = "0.102.0"

|

||||

description = "Cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks."

|

||||

readme = "README.md"

|

||||

requires-python = ">=3.10,<3.13"

|

||||

@@ -45,7 +45,7 @@ Documentation = "https://docs.crewai.com"

|

||||

Repository = "https://github.com/crewAIInc/crewAI"

|

||||

|

||||

[project.optional-dependencies]

|

||||

tools = ["crewai-tools>=0.32.1"]

|

||||

tools = ["crewai-tools>=0.36.0"]

|

||||

embeddings = [

|

||||

"tiktoken~=0.7.0"

|

||||

]

|

||||

|

||||

@@ -14,7 +14,7 @@ warnings.filterwarnings(

|

||||

category=UserWarning,

|

||||

module="pydantic.main",

|

||||

)

|

||||

__version__ = "0.100.1"

|

||||

__version__ = "0.102.0"

|

||||

__all__ = [

|

||||

"Agent",

|

||||

"Crew",

|

||||

|

||||

@@ -16,7 +16,6 @@ from crewai.memory.contextual.contextual_memory import ContextualMemory

|

||||

from crewai.task import Task

|

||||

from crewai.tools import BaseTool

|

||||

from crewai.tools.agent_tools.agent_tools import AgentTools

|

||||

from crewai.tools.base_tool import Tool

|

||||

from crewai.utilities import Converter, Prompts

|

||||

from crewai.utilities.constants import TRAINED_AGENTS_DATA_FILE, TRAINING_DATA_FILE

|

||||

from crewai.utilities.converter import generate_model_description

|

||||

@@ -146,7 +145,7 @@ class Agent(BaseAgent):

|

||||

def _set_knowledge(self):

|

||||

try:

|

||||

if self.knowledge_sources:

|

||||

full_pattern = re.compile(r'[^a-zA-Z0-9\-_\r\n]|(\.\.)')

|

||||

full_pattern = re.compile(r"[^a-zA-Z0-9\-_\r\n]|(\.\.)")

|

||||

knowledge_agent_name = f"{re.sub(full_pattern, '_', self.role)}"

|

||||

if isinstance(self.knowledge_sources, list) and all(

|

||||

isinstance(k, BaseKnowledgeSource) for k in self.knowledge_sources

|

||||

|

||||

@@ -3,11 +3,6 @@ import subprocess

|

||||

import click

|

||||

|

||||

from crewai.cli.utils import get_crew

|

||||

from crewai.knowledge.storage.knowledge_storage import KnowledgeStorage

|

||||

from crewai.memory.entity.entity_memory import EntityMemory

|

||||

from crewai.memory.long_term.long_term_memory import LongTermMemory

|

||||

from crewai.memory.short_term.short_term_memory import ShortTermMemory

|

||||

from crewai.utilities.task_output_storage_handler import TaskOutputStorageHandler

|

||||

|

||||

|

||||

def reset_memories_command(

|

||||

|

||||

@@ -56,7 +56,8 @@ def test():

|

||||

Test the crew execution and returns the results.

|

||||

"""

|

||||

inputs = {

|

||||

"topic": "AI LLMs"

|

||||

"topic": "AI LLMs",

|

||||

"current_year": str(datetime.now().year)

|

||||

}

|

||||

try:

|

||||

{{crew_name}}().crew().test(n_iterations=int(sys.argv[1]), openai_model_name=sys.argv[2], inputs=inputs)

|

||||

|

||||

@@ -5,7 +5,7 @@ description = "{{name}} using crewAI"

|

||||

authors = [{ name = "Your Name", email = "you@example.com" }]

|

||||

requires-python = ">=3.10,<3.13"

|

||||

dependencies = [

|

||||

"crewai[tools]>=0.100.1,<1.0.0"

|

||||

"crewai[tools]>=0.102.0,<1.0.0"

|

||||

]

|

||||

|

||||

[project.scripts]

|

||||

|

||||

@@ -5,7 +5,7 @@ description = "{{name}} using crewAI"

|

||||

authors = [{ name = "Your Name", email = "you@example.com" }]

|

||||

requires-python = ">=3.10,<3.13"

|

||||

dependencies = [

|

||||

"crewai[tools]>=0.100.1,<1.0.0",

|

||||

"crewai[tools]>=0.102.0,<1.0.0",

|

||||

]

|

||||

|

||||

[project.scripts]

|

||||

|

||||

@@ -5,7 +5,7 @@ description = "Power up your crews with {{folder_name}}"

|

||||

readme = "README.md"

|

||||

requires-python = ">=3.10,<3.13"

|

||||

dependencies = [

|

||||

"crewai[tools]>=0.100.1"

|

||||

"crewai[tools]>=0.102.0"

|

||||

]

|

||||

|

||||

[tool.crewai]

|

||||

|

||||

@@ -1,7 +1,6 @@

|

||||

import asyncio

|

||||

import json

|

||||

import re

|

||||

import sys

|

||||

import uuid

|

||||

import warnings

|

||||

from concurrent.futures import Future

|

||||

@@ -381,6 +380,22 @@ class Crew(BaseModel):

|

||||

|

||||

return self

|

||||

|

||||

@model_validator(mode="after")

|

||||

def validate_must_have_non_conditional_task(self) -> "Crew":

|

||||

"""Ensure that a crew has at least one non-conditional task."""

|

||||

if not self.tasks:

|

||||

return self

|

||||

non_conditional_count = sum(

|

||||

1 for task in self.tasks if not isinstance(task, ConditionalTask)

|

||||

)

|

||||

if non_conditional_count == 0:

|

||||

raise PydanticCustomError(

|

||||

"only_conditional_tasks",

|

||||

"Crew must include at least one non-conditional task",

|

||||

{},

|

||||

)

|

||||

return self

|

||||

|

||||

@model_validator(mode="after")

|

||||

def validate_first_task(self) -> "Crew":

|

||||

"""Ensure the first task is not a ConditionalTask."""

|

||||

@@ -441,6 +456,7 @@ class Crew(BaseModel):

|

||||

return self

|

||||

|

||||

|

||||

|

||||

@property

|

||||

def key(self) -> str:

|

||||

source = [agent.key for agent in self.agents] + [

|

||||

@@ -743,6 +759,7 @@ class Crew(BaseModel):

|

||||

task, task_outputs, futures, task_index, was_replayed

|

||||

)

|

||||

if skipped_task_output:

|

||||

task_outputs.append(skipped_task_output)

|

||||

continue

|

||||

|

||||

if task.async_execution:

|

||||

@@ -766,7 +783,7 @@ class Crew(BaseModel):

|

||||

context=context,

|

||||

tools=tools_for_task,

|

||||

)

|

||||

task_outputs = [task_output]

|

||||

task_outputs.append(task_output)

|

||||

self._process_task_result(task, task_output)

|

||||

self._store_execution_log(task, task_output, task_index, was_replayed)

|

||||

|

||||

@@ -787,7 +804,7 @@ class Crew(BaseModel):

|

||||

task_outputs = self._process_async_tasks(futures, was_replayed)

|

||||

futures.clear()

|

||||

|

||||

previous_output = task_outputs[task_index - 1] if task_outputs else None

|

||||

previous_output = task_outputs[-1] if task_outputs else None

|

||||

if previous_output is not None and not task.should_execute(previous_output):

|

||||

self._logger.log(

|

||||

"debug",

|

||||

@@ -909,11 +926,15 @@ class Crew(BaseModel):

|

||||

)

|

||||

|

||||

def _create_crew_output(self, task_outputs: List[TaskOutput]) -> CrewOutput:

|

||||

if len(task_outputs) != 1:

|

||||

raise ValueError(

|

||||

"Something went wrong. Kickoff should return only one task output."

|

||||

)

|

||||

final_task_output = task_outputs[0]

|

||||

if not task_outputs:

|

||||

raise ValueError("No task outputs available to create crew output.")

|

||||

|

||||

# Filter out empty outputs and get the last valid one as the main output

|

||||

valid_outputs = [t for t in task_outputs if t.raw]

|

||||

if not valid_outputs:

|

||||

raise ValueError("No valid task outputs available to create crew output.")

|

||||

final_task_output = valid_outputs[-1]

|

||||

|

||||

final_string_output = final_task_output.raw

|

||||

self._finish_execution(final_string_output)

|

||||

token_usage = self.calculate_usage_metrics()

|

||||

@@ -922,7 +943,7 @@ class Crew(BaseModel):

|

||||

raw=final_task_output.raw,

|

||||

pydantic=final_task_output.pydantic,

|

||||

json_dict=final_task_output.json_dict,

|

||||

tasks_output=[task.output for task in self.tasks if task.output],

|

||||

tasks_output=task_outputs,

|

||||

token_usage=token_usage,

|

||||

)

|

||||

|

||||

|

||||

@@ -1,4 +1,5 @@

|

||||

import asyncio

|

||||

import copy

|

||||

import inspect

|

||||

import logging

|

||||

from typing import (

|

||||

@@ -394,7 +395,6 @@ class FlowMeta(type):

|

||||

or hasattr(attr_value, "__trigger_methods__")

|

||||

or hasattr(attr_value, "__is_router__")

|

||||

):

|

||||

|

||||

# Register start methods

|

||||

if hasattr(attr_value, "__is_start_method__"):

|

||||

start_methods.append(attr_name)

|

||||

@@ -569,6 +569,9 @@ class Flow(Generic[T], metaclass=FlowMeta):

|

||||

f"Initial state must be dict or BaseModel, got {type(self.initial_state)}"

|

||||

)

|

||||

|

||||

def _copy_state(self) -> T:

|

||||

return copy.deepcopy(self._state)

|

||||

|

||||

@property

|

||||

def state(self) -> T:

|

||||

return self._state

|

||||

@@ -740,6 +743,7 @@ class Flow(Generic[T], metaclass=FlowMeta):

|

||||

event=FlowStartedEvent(

|

||||

type="flow_started",

|

||||

flow_name=self.__class__.__name__,

|

||||

inputs=inputs,

|

||||

),

|

||||

)

|

||||

self._log_flow_event(

|

||||

@@ -803,6 +807,18 @@ class Flow(Generic[T], metaclass=FlowMeta):

|

||||

async def _execute_method(

|

||||

self, method_name: str, method: Callable, *args: Any, **kwargs: Any

|

||||

) -> Any:

|

||||

dumped_params = {f"_{i}": arg for i, arg in enumerate(args)} | (kwargs or {})

|

||||

self.event_emitter.send(

|

||||

self,

|

||||

event=MethodExecutionStartedEvent(

|

||||

type="method_execution_started",

|

||||

method_name=method_name,

|

||||

flow_name=self.__class__.__name__,

|

||||

params=dumped_params,

|

||||

state=self._copy_state(),

|

||||

),

|

||||

)

|

||||

|

||||

result = (

|

||||

await method(*args, **kwargs)

|

||||

if asyncio.iscoroutinefunction(method)

|

||||

@@ -812,6 +828,18 @@ class Flow(Generic[T], metaclass=FlowMeta):

|

||||

self._method_execution_counts[method_name] = (

|

||||

self._method_execution_counts.get(method_name, 0) + 1

|

||||

)

|

||||

|

||||

self.event_emitter.send(

|

||||

self,

|

||||

event=MethodExecutionFinishedEvent(

|

||||

type="method_execution_finished",

|

||||

method_name=method_name,

|

||||

flow_name=self.__class__.__name__,

|

||||

state=self._copy_state(),

|

||||

result=result,

|

||||

),

|

||||

)

|

||||

|

||||

return result

|

||||

|

||||

async def _execute_listeners(self, trigger_method: str, result: Any) -> None:

|

||||

@@ -950,16 +978,6 @@ class Flow(Generic[T], metaclass=FlowMeta):

|

||||

"""

|

||||

try:

|

||||

method = self._methods[listener_name]

|

||||

|

||||

self.event_emitter.send(

|

||||

self,

|

||||

event=MethodExecutionStartedEvent(

|

||||

type="method_execution_started",

|

||||

method_name=listener_name,

|

||||

flow_name=self.__class__.__name__,

|

||||

),

|

||||

)

|

||||

|

||||

sig = inspect.signature(method)

|

||||

params = list(sig.parameters.values())

|

||||

method_params = [p for p in params if p.name != "self"]

|

||||

@@ -971,15 +989,6 @@ class Flow(Generic[T], metaclass=FlowMeta):

|

||||

else:

|

||||

listener_result = await self._execute_method(listener_name, method)

|

||||

|

||||

self.event_emitter.send(

|

||||

self,

|

||||

event=MethodExecutionFinishedEvent(

|

||||

type="method_execution_finished",

|

||||

method_name=listener_name,

|

||||

flow_name=self.__class__.__name__,

|

||||

),

|

||||

)

|

||||

|

||||

# Execute listeners (and possibly routers) of this listener

|

||||

await self._execute_listeners(listener_name, listener_result)

|

||||

|

||||

|

||||

@@ -1,6 +1,8 @@

|

||||

from dataclasses import dataclass, field

|

||||

from datetime import datetime

|

||||

from typing import Any, Optional

|

||||

from typing import Any, Dict, Optional, Union

|

||||

|

||||

from pydantic import BaseModel

|

||||

|

||||

|

||||

@dataclass

|

||||

@@ -15,17 +17,21 @@ class Event:

|

||||

|

||||

@dataclass

|

||||

class FlowStartedEvent(Event):

|

||||

pass

|

||||

inputs: Optional[Dict[str, Any]] = None

|

||||

|

||||

|

||||

@dataclass

|

||||

class MethodExecutionStartedEvent(Event):

|

||||

method_name: str

|

||||

state: Union[Dict[str, Any], BaseModel]

|

||||

params: Optional[Dict[str, Any]] = None

|

||||

|

||||

|

||||

@dataclass

|

||||

class MethodExecutionFinishedEvent(Event):

|

||||

method_name: str

|

||||

state: Union[Dict[str, Any], BaseModel]

|

||||

result: Any = None

|

||||

|

||||

|

||||

@dataclass

|

||||

|

||||

@@ -1,28 +1,138 @@

|

||||

from pathlib import Path

|

||||

from typing import Dict, List

|

||||

from typing import Dict, Iterator, List, Optional, Union

|

||||

from urllib.parse import urlparse

|

||||

|

||||

from crewai.knowledge.source.base_file_knowledge_source import BaseFileKnowledgeSource

|

||||

from pydantic import Field, field_validator

|

||||

|

||||

from crewai.knowledge.source.base_knowledge_source import BaseKnowledgeSource

|

||||

from crewai.utilities.constants import KNOWLEDGE_DIRECTORY

|

||||

from crewai.utilities.logger import Logger

|

||||

|

||||

|

||||

class ExcelKnowledgeSource(BaseFileKnowledgeSource):

|

||||

class ExcelKnowledgeSource(BaseKnowledgeSource):

|

||||

"""A knowledge source that stores and queries Excel file content using embeddings."""

|

||||

|

||||

def load_content(self) -> Dict[Path, str]:

|

||||

"""Load and preprocess Excel file content."""

|

||||

pd = self._import_dependencies()

|

||||

# override content to be a dict of file paths to sheet names to csv content

|

||||

|

||||

_logger: Logger = Logger(verbose=True)

|

||||

|

||||

file_path: Optional[Union[Path, List[Path], str, List[str]]] = Field(

|

||||

default=None,

|

||||

description="[Deprecated] The path to the file. Use file_paths instead.",

|

||||

)

|

||||

file_paths: Optional[Union[Path, List[Path], str, List[str]]] = Field(

|

||||

default_factory=list, description="The path to the file"

|

||||

)

|

||||

chunks: List[str] = Field(default_factory=list)

|

||||

content: Dict[Path, Dict[str, str]] = Field(default_factory=dict)

|

||||

safe_file_paths: List[Path] = Field(default_factory=list)

|

||||

|

||||

@field_validator("file_path", "file_paths", mode="before")

|

||||

def validate_file_path(cls, v, info):

|

||||

"""Validate that at least one of file_path or file_paths is provided."""

|

||||

# Single check if both are None, O(1) instead of nested conditions

|

||||

if (

|

||||

v is None

|

||||

and info.data.get(

|

||||

"file_path" if info.field_name == "file_paths" else "file_paths"

|

||||

)

|

||||

is None

|

||||

):

|

||||

raise ValueError("Either file_path or file_paths must be provided")

|

||||

return v

|

||||

|

||||

def _process_file_paths(self) -> List[Path]:

|

||||

"""Convert file_path to a list of Path objects."""

|

||||

|

||||

if hasattr(self, "file_path") and self.file_path is not None:

|

||||

self._logger.log(

|

||||

"warning",

|

||||

"The 'file_path' attribute is deprecated and will be removed in a future version. Please use 'file_paths' instead.",

|

||||

color="yellow",

|

||||

)

|

||||

self.file_paths = self.file_path

|

||||

|

||||

if self.file_paths is None:

|

||||

raise ValueError("Your source must be provided with a file_paths: []")

|

||||

|

||||

# Convert single path to list

|

||||

path_list: List[Union[Path, str]] = (

|

||||

[self.file_paths]

|

||||

if isinstance(self.file_paths, (str, Path))

|

||||

else list(self.file_paths)

|

||||

if isinstance(self.file_paths, list)

|

||||

else []

|

||||

)

|

||||

|

||||

if not path_list:

|

||||

raise ValueError(

|

||||

"file_path/file_paths must be a Path, str, or a list of these types"

|

||||

)

|

||||

|

||||

return [self.convert_to_path(path) for path in path_list]

|

||||

|

||||

def validate_content(self):

|

||||

"""Validate the paths."""

|

||||

for path in self.safe_file_paths:

|

||||

if not path.exists():

|

||||

self._logger.log(

|

||||

"error",

|

||||

f"File not found: {path}. Try adding sources to the knowledge directory. If it's inside the knowledge directory, use the relative path.",

|

||||

color="red",

|

||||

)

|

||||

raise FileNotFoundError(f"File not found: {path}")

|

||||

if not path.is_file():

|

||||

self._logger.log(

|

||||

"error",

|

||||

f"Path is not a file: {path}",

|

||||

color="red",

|

||||

)

|

||||

|

||||

def model_post_init(self, _) -> None:

|

||||

if self.file_path:

|

||||

self._logger.log(

|

||||

"warning",

|

||||

"The 'file_path' attribute is deprecated and will be removed in a future version. Please use 'file_paths' instead.",

|

||||

color="yellow",

|

||||

)

|

||||

self.file_paths = self.file_path

|

||||

self.safe_file_paths = self._process_file_paths()

|

||||

self.validate_content()

|

||||

self.content = self._load_content()

|

||||

|

||||

def _load_content(self) -> Dict[Path, Dict[str, str]]:

|

||||

"""Load and preprocess Excel file content from multiple sheets.

|

||||

|

||||

Each sheet's content is converted to CSV format and stored.

|

||||

|

||||

Returns:

|

||||

Dict[Path, Dict[str, str]]: A mapping of file paths to their respective sheet contents.

|

||||

|

||||

Raises:

|

||||

ImportError: If required dependencies are missing.

|

||||

FileNotFoundError: If the specified Excel file cannot be opened.

|

||||

"""

|

||||

pd = self._import_dependencies()

|

||||

content_dict = {}

|

||||

for file_path in self.safe_file_paths:

|

||||

file_path = self.convert_to_path(file_path)

|

||||

df = pd.read_excel(file_path)

|

||||

content = df.to_csv(index=False)

|

||||

content_dict[file_path] = content

|

||||

with pd.ExcelFile(file_path) as xl:

|

||||

sheet_dict = {

|

||||

str(sheet_name): str(

|

||||

pd.read_excel(xl, sheet_name).to_csv(index=False)

|

||||

)

|

||||

for sheet_name in xl.sheet_names

|

||||

}

|

||||

content_dict[file_path] = sheet_dict

|

||||

return content_dict

|

||||

|

||||

def convert_to_path(self, path: Union[Path, str]) -> Path:

|

||||

"""Convert a path to a Path object."""

|

||||

return Path(KNOWLEDGE_DIRECTORY + "/" + path) if isinstance(path, str) else path

|

||||

|

||||

def _import_dependencies(self):

|

||||

"""Dynamically import dependencies."""

|

||||

try:

|

||||

import openpyxl # noqa

|

||||

import pandas as pd

|

||||

|

||||

return pd

|

||||

@@ -38,10 +148,14 @@ class ExcelKnowledgeSource(BaseFileKnowledgeSource):

|

||||

and save the embeddings.

|

||||

"""

|

||||

# Convert dictionary values to a single string if content is a dictionary

|

||||

if isinstance(self.content, dict):

|

||||

content_str = "\n".join(str(value) for value in self.content.values())

|

||||

else:

|

||||

content_str = str(self.content)

|

||||

# Updated to account for .xlsx workbooks with multiple tabs/sheets

|

||||

content_str = ""

|

||||

for value in self.content.values():

|

||||

if isinstance(value, dict):

|

||||

for sheet_value in value.values():

|

||||

content_str += str(sheet_value) + "\n"

|

||||

else:

|

||||

content_str += str(value) + "\n"

|

||||

|

||||

new_chunks = self._chunk_text(content_str)

|

||||

self.chunks.extend(new_chunks)

|

||||

|

||||

@@ -164,6 +164,7 @@ class LLM:

|

||||

self.context_window_size = 0

|

||||

self.reasoning_effort = reasoning_effort

|

||||

self.additional_params = kwargs

|

||||

self.is_anthropic = self._is_anthropic_model(model)

|

||||

|

||||

litellm.drop_params = True

|

||||

|

||||

@@ -178,42 +179,62 @@ class LLM:

|

||||

self.set_callbacks(callbacks)

|

||||

self.set_env_callbacks()

|

||||

|

||||

def _is_anthropic_model(self, model: str) -> bool:

|

||||

"""Determine if the model is from Anthropic provider.

|

||||

|

||||

Args:

|

||||

model: The model identifier string.

|

||||

|

||||

Returns:

|

||||

bool: True if the model is from Anthropic, False otherwise.

|

||||

"""

|

||||

ANTHROPIC_PREFIXES = ('anthropic/', 'claude-', 'claude/')

|

||||

return any(prefix in model.lower() for prefix in ANTHROPIC_PREFIXES)

|

||||

|

||||

def call(

|

||||

self,

|

||||

messages: Union[str, List[Dict[str, str]]],

|

||||

tools: Optional[List[dict]] = None,

|

||||

callbacks: Optional[List[Any]] = None,

|

||||

available_functions: Optional[Dict[str, Any]] = None,

|

||||

) -> str:

|

||||

"""

|

||||

High-level llm call method that:

|

||||

1) Accepts either a string or a list of messages

|

||||

2) Converts string input to the required message format

|

||||

3) Calls litellm.completion

|

||||

4) Handles function/tool calls if any

|

||||

5) Returns the final text response or tool result

|

||||

|

||||

Parameters:

|

||||

- messages (Union[str, List[Dict[str, str]]]): The input messages for the LLM.

|

||||

- If a string is provided, it will be converted into a message list with a single entry.

|

||||

- If a list of dictionaries is provided, each dictionary should have 'role' and 'content' keys.

|

||||

- tools (Optional[List[dict]]): A list of tool schemas for function calling.

|

||||

- callbacks (Optional[List[Any]]): A list of callback functions to be executed.

|

||||

- available_functions (Optional[Dict[str, Any]]): A dictionary mapping function names to actual Python functions.

|

||||

|

||||

) -> Union[str, Any]:

|

||||

"""High-level LLM call method.

|

||||

|

||||

Args:

|

||||

messages: Input messages for the LLM.

|

||||

Can be a string or list of message dictionaries.

|

||||

If string, it will be converted to a single user message.

|

||||

If list, each dict must have 'role' and 'content' keys.

|

||||

tools: Optional list of tool schemas for function calling.

|

||||

Each tool should define its name, description, and parameters.

|

||||

callbacks: Optional list of callback functions to be executed

|

||||

during and after the LLM call.

|

||||

available_functions: Optional dict mapping function names to callables

|

||||

that can be invoked by the LLM.

|

||||

|

||||

Returns:

|

||||

- str: The final text response from the LLM or the result of a tool function call.

|

||||

|

||||

Union[str, Any]: Either a text response from the LLM (str) or

|

||||

the result of a tool function call (Any).

|

||||

|

||||

Raises:

|

||||

TypeError: If messages format is invalid

|

||||

ValueError: If response format is not supported

|

||||

LLMContextLengthExceededException: If input exceeds model's context limit

|

||||

|

||||

Examples:

|

||||

---------

|

||||

# Example 1: Using a string input

|

||||

response = llm.call("Return the name of a random city in the world.")

|

||||

print(response)

|

||||

|

||||

# Example 2: Using a list of messages

|

||||

messages = [{"role": "user", "content": "What is the capital of France?"}]

|

||||

response = llm.call(messages)

|

||||

print(response)

|

||||

# Example 1: Simple string input

|

||||

>>> response = llm.call("Return the name of a random city.")

|

||||

>>> print(response)

|

||||

"Paris"

|

||||

|

||||

# Example 2: Message list with system and user messages

|

||||

>>> messages = [

|

||||

... {"role": "system", "content": "You are a geography expert"},

|

||||

... {"role": "user", "content": "What is France's capital?"}

|

||||

... ]

|

||||

>>> response = llm.call(messages)

|

||||

>>> print(response)

|

||||

"The capital of France is Paris."

|

||||

"""

|

||||

# Validate parameters before proceeding with the call.

|

||||

self._validate_call_params()

|

||||

@@ -233,10 +254,13 @@ class LLM:

|

||||

self.set_callbacks(callbacks)

|

||||

|

||||

try:

|

||||

# --- 1) Prepare the parameters for the completion call

|

||||

# --- 1) Format messages according to provider requirements

|

||||

formatted_messages = self._format_messages_for_provider(messages)

|

||||

|

||||

# --- 2) Prepare the parameters for the completion call

|

||||

params = {

|

||||

"model": self.model,

|

||||

"messages": messages,

|

||||

"messages": formatted_messages,

|

||||

"timeout": self.timeout,

|

||||

"temperature": self.temperature,

|

||||

"top_p": self.top_p,

|

||||

@@ -324,6 +348,38 @@ class LLM:

|

||||

logging.error(f"LiteLLM call failed: {str(e)}")

|

||||

raise

|

||||

|

||||

def _format_messages_for_provider(self, messages: List[Dict[str, str]]) -> List[Dict[str, str]]:

|

||||

"""Format messages according to provider requirements.

|

||||

|

||||

Args:

|

||||

messages: List of message dictionaries with 'role' and 'content' keys.

|

||||

Can be empty or None.

|

||||

|

||||

Returns:

|

||||

List of formatted messages according to provider requirements.

|

||||

For Anthropic models, ensures first message has 'user' role.

|

||||

|

||||

Raises:

|

||||

TypeError: If messages is None or contains invalid message format.

|

||||

"""

|

||||

if messages is None:

|

||||

raise TypeError("Messages cannot be None")

|

||||

|

||||

# Validate message format first

|

||||

for msg in messages:

|

||||

if not isinstance(msg, dict) or "role" not in msg or "content" not in msg:

|

||||

raise TypeError("Invalid message format. Each message must be a dict with 'role' and 'content' keys")

|

||||

|

||||

if not self.is_anthropic:

|

||||

return messages

|

||||

|

||||

# Anthropic requires messages to start with 'user' role

|

||||

if not messages or messages[0]["role"] == "system":

|

||||

# If first message is system or empty, add a placeholder user message

|

||||

return [{"role": "user", "content": "."}, *messages]

|

||||

|

||||

return messages

|

||||

|

||||

def _get_custom_llm_provider(self) -> str:

|

||||

"""

|

||||

Derives the custom_llm_provider from the model string.

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

from typing import Any, Optional

|

||||

from typing import Optional

|

||||

|

||||

from pydantic import PrivateAttr

|

||||

|

||||

|

||||

@@ -1,9 +1,7 @@

|

||||

from typing import Any, Dict, List, Optional, Union

|

||||

from typing import Any, Dict, List, Optional

|

||||

|

||||

from pydantic import BaseModel

|

||||

|

||||

from crewai.memory.storage.rag_storage import RAGStorage

|

||||

|

||||

|

||||

class Memory(BaseModel):

|

||||

"""

|

||||

|

||||

@@ -674,19 +674,32 @@ class Task(BaseModel):

|

||||

return OutputFormat.PYDANTIC

|

||||

return OutputFormat.RAW

|

||||

|

||||

def _save_file(self, result: Any) -> None:

|

||||