mirror of

https://github.com/crewAIInc/crewAI.git

synced 2026-02-21 13:28:14 +00:00

Compare commits

18 Commits

v0.51.0

...

feat/cli-i

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

1cd1729a87 | ||

|

|

3e9cffe740 | ||

|

|

ddda8f6bda | ||

|

|

bf7372fefa | ||

|

|

3451b6fc7a | ||

|

|

dbf2570353 | ||

|

|

d0707fac91 | ||

|

|

35ebdd6022 | ||

|

|

92a77e5cac | ||

|

|

a2922c9ad5 | ||

|

|

9f9b52dd26 | ||

|

|

2482c7ab68 | ||

|

|

7fdabda97e | ||

|

|

7306414de7 | ||

|

|

97d7bfb52a | ||

|

|

9f85a2a011 | ||

|

|

ab47d276db | ||

|

|

44e38b1d5e |

35

.github/ISSUE_TEMPLATE/bug_report.md

vendored

35

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,35 +0,0 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve CrewAI

|

||||

title: "[BUG]"

|

||||

labels: bug

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Description**

|

||||

Provide a clear and concise description of what the bug is.

|

||||

|

||||

**Steps to Reproduce**

|

||||

Provide a step-by-step process to reproduce the behavior:

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots/Code snippets**

|

||||

If applicable, add screenshots or code snippets to help explain your problem.

|

||||

|

||||

**Environment Details:**

|

||||

- **Operating System**: [e.g., Ubuntu 20.04, macOS Catalina, Windows 10]

|

||||

- **Python Version**: [e.g., 3.8, 3.9, 3.10]

|

||||

- **crewAI Version**: [e.g., 0.30.11]

|

||||

- **crewAI Tools Version**: [e.g., 0.2.6]

|

||||

|

||||

**Logs**

|

||||

Include relevant logs or error messages if applicable.

|

||||

|

||||

**Possible Solution**

|

||||

Have a solution in mind? Please suggest it here, or write "None".

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

116

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

Normal file

116

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

Normal file

@@ -0,0 +1,116 @@

|

||||

name: Bug report

|

||||

description: Create a report to help us improve CrewAI

|

||||

title: "[BUG]"

|

||||

labels: ["bug"]

|

||||

assignees: []

|

||||

body:

|

||||

- type: textarea

|

||||

id: description

|

||||

attributes:

|

||||

label: Description

|

||||

description: Provide a clear and concise description of what the bug is.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: steps-to-reproduce

|

||||

attributes:

|

||||

label: Steps to Reproduce

|

||||

description: Provide a step-by-step process to reproduce the behavior.

|

||||

placeholder: |

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: expected-behavior

|

||||

attributes:

|

||||

label: Expected behavior

|

||||

description: A clear and concise description of what you expected to happen.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: screenshots-code

|

||||

attributes:

|

||||

label: Screenshots/Code snippets

|

||||

description: If applicable, add screenshots or code snippets to help explain your problem.

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

id: os

|

||||

attributes:

|

||||

label: Operating System

|

||||

description: Select the operating system you're using

|

||||

options:

|

||||

- Ubuntu 20.04

|

||||

- Ubuntu 22.04

|

||||

- Ubuntu 24.04

|

||||

- macOS Catalina

|

||||

- macOS Big Sur

|

||||

- macOS Monterey

|

||||

- macOS Ventura

|

||||

- macOS Sonoma

|

||||

- Windows 10

|

||||

- Windows 11

|

||||

- Other (specify in additional context)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

id: python-version

|

||||

attributes:

|

||||

label: Python Version

|

||||

description: Version of Python your Crew is running on

|

||||

options:

|

||||

- '3.10'

|

||||

- '3.11'

|

||||

- '3.12'

|

||||

- '3.13'

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: crewai-version

|

||||

attributes:

|

||||

label: crewAI Version

|

||||

description: What version of CrewAI are you using

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: crewai-tools-version

|

||||

attributes:

|

||||

label: crewAI Tools Version

|

||||

description: What version of CrewAI Tools are you using

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

id: virtual-environment

|

||||

attributes:

|

||||

label: Virtual Environment

|

||||

description: What Virtual Environment are you running your crew in.

|

||||

options:

|

||||

- Venv

|

||||

- Conda

|

||||

- Poetry

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: evidence

|

||||

attributes:

|

||||

label: Evidence

|

||||

description: Include relevant information, logs or error messages. These can be screenshots.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: possible-solution

|

||||

attributes:

|

||||

label: Possible Solution

|

||||

description: Have a solution in mind? Please suggest it here, or write "None".

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: additional-context

|

||||

attributes:

|

||||

label: Additional context

|

||||

description: Add any other context about the problem here.

|

||||

validations:

|

||||

required: true

|

||||

1

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

1

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1 @@

|

||||

blank_issues_enabled: false

|

||||

24

.github/ISSUE_TEMPLATE/custom.md

vendored

24

.github/ISSUE_TEMPLATE/custom.md

vendored

@@ -1,24 +0,0 @@

|

||||

---

|

||||

name: Custom issue template

|

||||

about: Describe this issue template's purpose here.

|

||||

title: "[DOCS]"

|

||||

labels: documentation

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

## Documentation Page

|

||||

<!-- Provide a link to the documentation page that needs improvement -->

|

||||

|

||||

## Description

|

||||

<!-- Describe what needs to be changed or improved in the documentation -->

|

||||

|

||||

## Suggested Changes

|

||||

<!-- If possible, provide specific suggestions for how to improve the documentation -->

|

||||

|

||||

## Additional Context

|

||||

<!-- Add any other context about the documentation issue here -->

|

||||

|

||||

## Checklist

|

||||

- [ ] I have searched the existing issues to make sure this is not a duplicate

|

||||

- [ ] I have checked the latest version of the documentation to ensure this hasn't been addressed

|

||||

65

.github/ISSUE_TEMPLATE/feature_request.yml

vendored

Normal file

65

.github/ISSUE_TEMPLATE/feature_request.yml

vendored

Normal file

@@ -0,0 +1,65 @@

|

||||

name: Feature request

|

||||

description: Suggest a new feature for CrewAI

|

||||

title: "[FEATURE]"

|

||||

labels: ["feature-request"]

|

||||

assignees: []

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Thanks for taking the time to fill out this feature request!

|

||||

- type: dropdown

|

||||

id: feature-area

|

||||

attributes:

|

||||

label: Feature Area

|

||||

description: Which area of CrewAI does this feature primarily relate to?

|

||||

options:

|

||||

- Core functionality

|

||||

- Agent capabilities

|

||||

- Task management

|

||||

- Integration with external tools

|

||||

- Performance optimization

|

||||

- Documentation

|

||||

- Other (please specify in additional context)

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: problem

|

||||

attributes:

|

||||

label: Is your feature request related to a an existing bug? Please link it here.

|

||||

description: A link to the bug or NA if not related to an existing bug.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: solution

|

||||

attributes:

|

||||

label: Describe the solution you'd like

|

||||

description: A clear and concise description of what you want to happen.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: alternatives

|

||||

attributes:

|

||||

label: Describe alternatives you've considered

|

||||

description: A clear and concise description of any alternative solutions or features you've considered.

|

||||

validations:

|

||||

required: false

|

||||

- type: textarea

|

||||

id: context

|

||||

attributes:

|

||||

label: Additional context

|

||||

description: Add any other context, screenshots, or examples about the feature request here.

|

||||

validations:

|

||||

required: false

|

||||

- type: dropdown

|

||||

id: willingness-to-contribute

|

||||

attributes:

|

||||

label: Willingness to Contribute

|

||||

description: Would you be willing to contribute to the implementation of this feature?

|

||||

options:

|

||||

- Yes, I'd be happy to submit a pull request

|

||||

- I could provide more detailed specifications

|

||||

- I can test the feature once it's implemented

|

||||

- No, I'm just suggesting the idea

|

||||

validations:

|

||||

required: true

|

||||

23

.github/workflows/security-checker.yml

vendored

Normal file

23

.github/workflows/security-checker.yml

vendored

Normal file

@@ -0,0 +1,23 @@

|

||||

name: Security Checker

|

||||

|

||||

on: [pull_request]

|

||||

|

||||

jobs:

|

||||

security-check:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: "3.11.9"

|

||||

|

||||

- name: Install dependencies

|

||||

run: pip install bandit

|

||||

|

||||

- name: Run Bandit

|

||||

run: bandit -c pyproject.toml -r src/ -lll

|

||||

|

||||

@@ -0,0 +1,129 @@

|

||||

# Creating a CrewAI Pipeline Project

|

||||

|

||||

Welcome to the comprehensive guide for creating a new CrewAI pipeline project. This document will walk you through the steps to create, customize, and run your CrewAI pipeline project, ensuring you have everything you need to get started.

|

||||

|

||||

To learn more about CrewAI pipelines, visit the [CrewAI documentation](https://docs.crewai.com/core-concepts/Pipeline/).

|

||||

|

||||

## Prerequisites

|

||||

|

||||

Before getting started with CrewAI pipelines, make sure that you have installed CrewAI via pip:

|

||||

|

||||

```shell

|

||||

$ pip install crewai crewai-tools

|

||||

```

|

||||

|

||||

The same prerequisites for virtual environments and Code IDEs apply as in regular CrewAI projects.

|

||||

|

||||

## Creating a New Pipeline Project

|

||||

|

||||

To create a new CrewAI pipeline project, you have two options:

|

||||

|

||||

1. For a basic pipeline template:

|

||||

|

||||

```shell

|

||||

$ crewai create pipeline <project_name>

|

||||

```

|

||||

|

||||

2. For a pipeline example that includes a router:

|

||||

|

||||

```shell

|

||||

$ crewai create pipeline --router <project_name>

|

||||

```

|

||||

|

||||

These commands will create a new project folder with the following structure:

|

||||

|

||||

```

|

||||

<project_name>/

|

||||

├── README.md

|

||||

├── poetry.lock

|

||||

├── pyproject.toml

|

||||

├── src/

|

||||

│ └── <project_name>/

|

||||

│ ├── __init__.py

|

||||

│ ├── main.py

|

||||

│ ├── crews/

|

||||

│ │ ├── crew1/

|

||||

│ │ │ ├── crew1.py

|

||||

│ │ │ └── config/

|

||||

│ │ │ ├── agents.yaml

|

||||

│ │ │ └── tasks.yaml

|

||||

│ │ ├── crew2/

|

||||

│ │ │ ├── crew2.py

|

||||

│ │ │ └── config/

|

||||

│ │ │ ├── agents.yaml

|

||||

│ │ │ └── tasks.yaml

|

||||

│ ├── pipelines/

|

||||

│ │ ├── __init__.py

|

||||

│ │ ├── pipeline1.py

|

||||

│ │ └── pipeline2.py

|

||||

│ └── tools/

|

||||

│ ├── __init__.py

|

||||

│ └── custom_tool.py

|

||||

└── tests/

|

||||

```

|

||||

|

||||

## Customizing Your Pipeline Project

|

||||

|

||||

To customize your pipeline project, you can:

|

||||

|

||||

1. Modify the crew files in `src/<project_name>/crews/` to define your agents and tasks for each crew.

|

||||

2. Modify the pipeline files in `src/<project_name>/pipelines/` to define your pipeline structure.

|

||||

3. Modify `src/<project_name>/main.py` to set up and run your pipelines.

|

||||

4. Add your environment variables into the `.env` file.

|

||||

|

||||

### Example: Defining a Pipeline

|

||||

|

||||

Here's an example of how to define a pipeline in `src/<project_name>/pipelines/normal_pipeline.py`:

|

||||

|

||||

```python

|

||||

from crewai import Pipeline

|

||||

from crewai.project import PipelineBase

|

||||

from ..crews.normal_crew import NormalCrew

|

||||

|

||||

@PipelineBase

|

||||

class NormalPipeline:

|

||||

def __init__(self):

|

||||

# Initialize crews

|

||||

self.normal_crew = NormalCrew().crew()

|

||||

|

||||

def create_pipeline(self):

|

||||

return Pipeline(

|

||||

stages=[

|

||||

self.normal_crew

|

||||

]

|

||||

)

|

||||

|

||||

async def kickoff(self, inputs):

|

||||

pipeline = self.create_pipeline()

|

||||

results = await pipeline.kickoff(inputs)

|

||||

return results

|

||||

```

|

||||

|

||||

### Annotations

|

||||

|

||||

The main annotation you'll use for pipelines is `@PipelineBase`. This annotation is used to decorate your pipeline classes, similar to how `@CrewBase` is used for crews.

|

||||

|

||||

## Installing Dependencies

|

||||

|

||||

To install the dependencies for your project, use Poetry:

|

||||

|

||||

```shell

|

||||

$ cd <project_name>

|

||||

$ crewai install

|

||||

```

|

||||

|

||||

## Running Your Pipeline Project

|

||||

|

||||

To run your pipeline project, use the following command:

|

||||

|

||||

```shell

|

||||

$ crewai run

|

||||

```

|

||||

|

||||

This will initialize your pipeline and begin task execution as defined in your `main.py` file.

|

||||

|

||||

## Deploying Your Pipeline Project

|

||||

|

||||

Pipelines can be deployed in the same way as regular CrewAI projects. The easiest way is through [CrewAI+](https://www.crewai.com/crewaiplus), where you can deploy your pipeline in a few clicks.

|

||||

|

||||

Remember, when working with pipelines, you're orchestrating multiple crews to work together in a sequence or parallel fashion. This allows for more complex workflows and information processing tasks.

|

||||

@@ -154,15 +154,15 @@ email_summarizer_task:

|

||||

Use the annotations to properly reference the agent and task in the crew.py file.

|

||||

|

||||

### Annotations include:

|

||||

* @agent

|

||||

* @task

|

||||

* @crew

|

||||

* @llm

|

||||

* @tool

|

||||

* @callback

|

||||

* @output_json

|

||||

* @output_pydantic

|

||||

* @cache_handler

|

||||

* [@agent](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L17)

|

||||

* [@task](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L4)

|

||||

* [@crew](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L69)

|

||||

* [@llm](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L23)

|

||||

* [@tool](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L39)

|

||||

* [@callback](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L44)

|

||||

* [@output_json](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L29)

|

||||

* [@output_pydantic](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L34)

|

||||

* [@cache_handler](https://github.com/crewAIInc/crewAI/blob/97d7bfb52ad49a9f04db360e1b6612d98c91971e/src/crewai/project/annotations.py#L49)

|

||||

|

||||

crew.py

|

||||

```py

|

||||

@@ -191,8 +191,7 @@ To install the dependencies for your project, you can use Poetry. First, navigat

|

||||

|

||||

```shell

|

||||

$ cd my_project

|

||||

$ poetry lock

|

||||

$ poetry install

|

||||

$ crewai install

|

||||

```

|

||||

|

||||

This will install the dependencies specified in the `pyproject.toml` file.

|

||||

@@ -233,11 +232,6 @@ To run your project, use the following command:

|

||||

```shell

|

||||

$ crewai run

|

||||

```

|

||||

or

|

||||

```shell

|

||||

$ poetry run my_project

|

||||

```

|

||||

|

||||

This will initialize your crew of AI agents and begin task execution as defined in your configuration in the `main.py` file.

|

||||

|

||||

### Replay Tasks from Latest Crew Kickoff

|

||||

|

||||

@@ -8,13 +8,20 @@ Cutting-edge framework for orchestrating role-playing, autonomous AI agents. By

|

||||

<div style="width:25%">

|

||||

<h2>Getting Started</h2>

|

||||

<ul>

|

||||

<li><a href='./getting-started/Installing-CrewAI'>

|

||||

<li>

|

||||

<a href='./getting-started/Installing-CrewAI'>

|

||||

Installing CrewAI

|

||||

</a>

|

||||

</a>

|

||||

</li>

|

||||

<li><a href='./getting-started/Start-a-New-CrewAI-Project-Template-Method'>

|

||||

<li>

|

||||

<a href='./getting-started/Start-a-New-CrewAI-Project-Template-Method'>

|

||||

Start a New CrewAI Project: Template Method

|

||||

</a>

|

||||

</a>

|

||||

</li>

|

||||

<li>

|

||||

<a href='./getting-started/Create-a-New-CrewAI-Pipeline-Template-Method'>

|

||||

Create a New CrewAI Pipeline: Template Method

|

||||

</a>

|

||||

</li>

|

||||

</ul>

|

||||

</div>

|

||||

|

||||

@@ -27,10 +27,10 @@ If needed you can also tweak the parameters of the DALL-E model by passing them

|

||||

```python

|

||||

from crewai_tools import DallETool

|

||||

|

||||

dalle_tool = DallETool(model: str = "dall-e-3",

|

||||

size: str = "1024x1024",

|

||||

quality: str = "standard",

|

||||

n: int = 1)

|

||||

dalle_tool = DallETool(model="dall-e-3",

|

||||

size="1024x1024",

|

||||

quality="standard",

|

||||

n=1)

|

||||

|

||||

Agent(

|

||||

...

|

||||

@@ -38,4 +38,4 @@ Agent(

|

||||

)

|

||||

```

|

||||

|

||||

The parameter are based on the `client.images.generate` method from the OpenAI API. For more information on the parameters, please refer to the [OpenAI API documentation](https://platform.openai.com/docs/guides/images/introduction?lang=python).

|

||||

The parameters are based on the `client.images.generate` method from the OpenAI API. For more information on the parameters, please refer to the [OpenAI API documentation](https://platform.openai.com/docs/guides/images/introduction?lang=python).

|

||||

|

||||

33

docs/tools/FileWriteTool.md

Normal file

33

docs/tools/FileWriteTool.md

Normal file

@@ -0,0 +1,33 @@

|

||||

# FileWriterTool Documentation

|

||||

|

||||

## Description

|

||||

The `FileWriterTool` is a component of the crewai_tools package, designed to simplify the process of writing content to files. It is particularly useful in scenarios such as generating reports, saving logs, creating configuration files, and more. This tool supports creating new directories if they don't exist, making it easier to organize your output.

|

||||

|

||||

## Installation

|

||||

Install the crewai_tools package to use the `FileWriterTool` in your projects:

|

||||

|

||||

```shell

|

||||

pip install 'crewai[tools]'

|

||||

```

|

||||

|

||||

## Example

|

||||

To get started with the `FileWriterTool`:

|

||||

|

||||

```python

|

||||

from crewai_tools import FileWriterTool

|

||||

|

||||

# Initialize the tool

|

||||

file_writer_tool = FileWriterTool()

|

||||

|

||||

# Write content to a file in a specified directory

|

||||

result = file_writer_tool._run('example.txt', 'This is a test content.', 'test_directory')

|

||||

print(result)

|

||||

```

|

||||

|

||||

## Arguments

|

||||

- `filename`: The name of the file you want to create or overwrite.

|

||||

- `content`: The content to write into the file.

|

||||

- `directory` (optional): The path to the directory where the file will be created. Defaults to the current directory (`.`). If the directory does not exist, it will be created.

|

||||

|

||||

## Conclusion

|

||||

By integrating the `FileWriterTool` into your crews, the agents can execute the process of writing content to files and creating directories. This tool is essential for tasks that require saving output data, creating structured file systems, and more. By adhering to the setup and usage guidelines provided, incorporating this tool into projects is straightforward and efficient.

|

||||

42

docs/tools/FirecrawlCrawlWebsiteTool.md

Normal file

42

docs/tools/FirecrawlCrawlWebsiteTool.md

Normal file

@@ -0,0 +1,42 @@

|

||||

# FirecrawlCrawlWebsiteTool

|

||||

|

||||

## Description

|

||||

|

||||

[Firecrawl](https://firecrawl.dev) is a platform for crawling and convert any website into clean markdown or structured data.

|

||||

|

||||

## Installation

|

||||

|

||||

- Get an API key from [firecrawl.dev](https://firecrawl.dev) and set it in environment variables (`FIRECRAWL_API_KEY`).

|

||||

- Install the [Firecrawl SDK](https://github.com/mendableai/firecrawl) along with `crewai[tools]` package:

|

||||

|

||||

```

|

||||

pip install firecrawl-py 'crewai[tools]'

|

||||

```

|

||||

|

||||

## Example

|

||||

|

||||

Utilize the FirecrawlScrapeFromWebsiteTool as follows to allow your agent to load websites:

|

||||

|

||||

```python

|

||||

from crewai_tools import FirecrawlCrawlWebsiteTool

|

||||

|

||||

tool = FirecrawlCrawlWebsiteTool(url='firecrawl.dev')

|

||||

```

|

||||

|

||||

## Arguments

|

||||

|

||||

- `api_key`: Optional. Specifies Firecrawl API key. Defaults is the `FIRECRAWL_API_KEY` environment variable.

|

||||

- `url`: The base URL to start crawling from.

|

||||

- `page_options`: Optional.

|

||||

- `onlyMainContent`: Optional. Only return the main content of the page excluding headers, navs, footers, etc.

|

||||

- `includeHtml`: Optional. Include the raw HTML content of the page. Will output a html key in the response.

|

||||

- `crawler_options`: Optional. Options for controlling the crawling behavior.

|

||||

- `includes`: Optional. URL patterns to include in the crawl.

|

||||

- `exclude`: Optional. URL patterns to exclude from the crawl.

|

||||

- `generateImgAltText`: Optional. Generate alt text for images using LLMs (requires a paid plan).

|

||||

- `returnOnlyUrls`: Optional. If true, returns only the URLs as a list in the crawl status. Note: the response will be a list of URLs inside the data, not a list of documents.

|

||||

- `maxDepth`: Optional. Maximum depth to crawl. Depth 1 is the base URL, depth 2 includes the base URL and its direct children, and so on.

|

||||

- `mode`: Optional. The crawling mode to use. Fast mode crawls 4x faster on websites without a sitemap but may not be as accurate and shouldn't be used on heavily JavaScript-rendered websites.

|

||||

- `limit`: Optional. Maximum number of pages to crawl.

|

||||

- `timeout`: Optional. Timeout in milliseconds for the crawling operation.

|

||||

|

||||

38

docs/tools/FirecrawlScrapeWebsiteTool.md

Normal file

38

docs/tools/FirecrawlScrapeWebsiteTool.md

Normal file

@@ -0,0 +1,38 @@

|

||||

# FirecrawlScrapeWebsiteTool

|

||||

|

||||

## Description

|

||||

|

||||

[Firecrawl](https://firecrawl.dev) is a platform for crawling and convert any website into clean markdown or structured data.

|

||||

|

||||

## Installation

|

||||

|

||||

- Get an API key from [firecrawl.dev](https://firecrawl.dev) and set it in environment variables (`FIRECRAWL_API_KEY`).

|

||||

- Install the [Firecrawl SDK](https://github.com/mendableai/firecrawl) along with `crewai[tools]` package:

|

||||

|

||||

```

|

||||

pip install firecrawl-py 'crewai[tools]'

|

||||

```

|

||||

|

||||

## Example

|

||||

|

||||

Utilize the FirecrawlScrapeWebsiteTool as follows to allow your agent to load websites:

|

||||

|

||||

```python

|

||||

from crewai_tools import FirecrawlScrapeWebsiteTool

|

||||

|

||||

tool = FirecrawlScrapeWebsiteTool(url='firecrawl.dev')

|

||||

```

|

||||

|

||||

## Arguments

|

||||

|

||||

- `api_key`: Optional. Specifies Firecrawl API key. Defaults is the `FIRECRAWL_API_KEY` environment variable.

|

||||

- `url`: The URL to scrape.

|

||||

- `page_options`: Optional.

|

||||

- `onlyMainContent`: Optional. Only return the main content of the page excluding headers, navs, footers, etc.

|

||||

- `includeHtml`: Optional. Include the raw HTML content of the page. Will output a html key in the response.

|

||||

- `extractor_options`: Optional. Options for LLM-based extraction of structured information from the page content

|

||||

- `mode`: The extraction mode to use, currently supports 'llm-extraction'

|

||||

- `extractionPrompt`: Optional. A prompt describing what information to extract from the page

|

||||

- `extractionSchema`: Optional. The schema for the data to be extracted

|

||||

- `timeout`: Optional. Timeout in milliseconds for the request

|

||||

|

||||

35

docs/tools/FirecrawlSearchTool.md

Normal file

35

docs/tools/FirecrawlSearchTool.md

Normal file

@@ -0,0 +1,35 @@

|

||||

# FirecrawlSearchTool

|

||||

|

||||

## Description

|

||||

|

||||

[Firecrawl](https://firecrawl.dev) is a platform for crawling and convert any website into clean markdown or structured data.

|

||||

|

||||

## Installation

|

||||

|

||||

- Get an API key from [firecrawl.dev](https://firecrawl.dev) and set it in environment variables (`FIRECRAWL_API_KEY`).

|

||||

- Install the [Firecrawl SDK](https://github.com/mendableai/firecrawl) along with `crewai[tools]` package:

|

||||

|

||||

```

|

||||

pip install firecrawl-py 'crewai[tools]'

|

||||

```

|

||||

|

||||

## Example

|

||||

|

||||

Utilize the FirecrawlSearchTool as follows to allow your agent to load websites:

|

||||

|

||||

```python

|

||||

from crewai_tools import FirecrawlSearchTool

|

||||

|

||||

tool = FirecrawlSearchTool(query='what is firecrawl?')

|

||||

```

|

||||

|

||||

## Arguments

|

||||

|

||||

- `api_key`: Optional. Specifies Firecrawl API key. Defaults is the `FIRECRAWL_API_KEY` environment variable.

|

||||

- `query`: The search query string to be used for searching.

|

||||

- `page_options`: Optional. Options for result formatting.

|

||||

- `onlyMainContent`: Optional. Only return the main content of the page excluding headers, navs, footers, etc.

|

||||

- `includeHtml`: Optional. Include the raw HTML content of the page. Will output a html key in the response.

|

||||

- `fetchPageContent`: Optional. Fetch the full content of the page.

|

||||

- `search_options`: Optional. Options for controlling the crawling behavior.

|

||||

- `limit`: Optional. Maximum number of pages to crawl.

|

||||

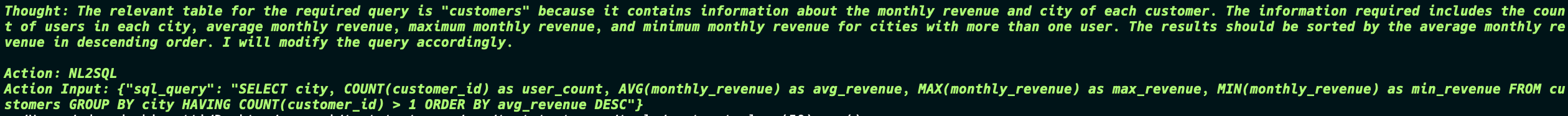

@@ -47,8 +47,8 @@ The primary task goal was:

|

||||

|

||||

So the Agent tried to get information from the DB, the first one is wrong so the Agent tries again and gets the correct information and passes to the next agent.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

The second task goal was:

|

||||

@@ -58,11 +58,11 @@ Include information on the average, maximum, and minimum monthly revenue for eac

|

||||

|

||||

Now things start to get interesting, the Agent generates the SQL query to not only create the table but also insert the data into the table. And in the end the Agent still returns the final report which is exactly what was in the database.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

This is a simple example of how the NL2SQLTool can be used to interact with the database and generate reports based on the data in the database.

|

||||

|

||||

37

mkdocs.yml

37

mkdocs.yml

@@ -129,6 +129,7 @@ nav:

|

||||

- Processes: 'core-concepts/Processes.md'

|

||||

- Crews: 'core-concepts/Crews.md'

|

||||

- Collaboration: 'core-concepts/Collaboration.md'

|

||||

- Pipeline: 'core-concepts/Pipeline.md'

|

||||

- Training: 'core-concepts/Training-Crew.md'

|

||||

- Memory: 'core-concepts/Memory.md'

|

||||

- Planning: 'core-concepts/Planning.md'

|

||||

@@ -152,33 +153,37 @@ nav:

|

||||

- Agent Monitoring with AgentOps: 'how-to/AgentOps-Observability.md'

|

||||

- Agent Monitoring with LangTrace: 'how-to/Langtrace-Observability.md'

|

||||

- Tools Docs:

|

||||

- Google Serper Search: 'tools/SerperDevTool.md'

|

||||

- Browserbase Web Loader: 'tools/BrowserbaseLoadTool.md'

|

||||

- Composio Tools: 'tools/ComposioTool.md'

|

||||

- Code Docs RAG Search: 'tools/CodeDocsSearchTool.md'

|

||||

- Code Interpreter: 'tools/CodeInterpreterTool.md'

|

||||

- Scrape Website: 'tools/ScrapeWebsiteTool.md'

|

||||

- Directory Read: 'tools/DirectoryReadTool.md'

|

||||

- Exa Serch Web Loader: 'tools/EXASearchTool.md'

|

||||

- File Read: 'tools/FileReadTool.md'

|

||||

- Selenium Scraper: 'tools/SeleniumScrapingTool.md'

|

||||

- Directory RAG Search: 'tools/DirectorySearchTool.md'

|

||||

- DALL-E Tool: 'tools/DALL-ETool.md'

|

||||

- PDF RAG Search: 'tools/PDFSearchTool.md'

|

||||

- TXT RAG Search: 'tools/TXTSearchTool.md'

|

||||

- Composio Tools: 'tools/ComposioTool.md'

|

||||

- CSV RAG Search: 'tools/CSVSearchTool.md'

|

||||

- XML RAG Search: 'tools/XMLSearchTool.md'

|

||||

- JSON RAG Search: 'tools/JSONSearchTool.md'

|

||||

- DALL-E Tool: 'tools/DALL-ETool.md'

|

||||

- Directory RAG Search: 'tools/DirectorySearchTool.md'

|

||||

- Directory Read: 'tools/DirectoryReadTool.md'

|

||||

- Docx Rag Search: 'tools/DOCXSearchTool.md'

|

||||

- EXA Serch Web Loader: 'tools/EXASearchTool.md'

|

||||

- File Read: 'tools/FileReadTool.md'

|

||||

- File Write: 'tools/FileWriteTool.md'

|

||||

- Firecrawl Crawl Website Tool: 'tools/FirecrawlCrawlWebsiteTool.md'

|

||||

- Firecrawl Scrape Website Tool: 'tools/FirecrawlScrapeWebsiteTool.md'

|

||||

- Firecrawl Search Tool: 'tools/FirecrgstawlSearchTool.md'

|

||||

- Github RAG Search: 'tools/GitHubSearchTool.md'

|

||||

- Google Serper Search: 'tools/SerperDevTool.md'

|

||||

- JSON RAG Search: 'tools/JSONSearchTool.md'

|

||||

- MDX RAG Search: 'tools/MDXSearchTool.md'

|

||||

- MySQL Tool: 'tools/MySQLTool.md'

|

||||

- NL2SQL Tool: 'tools/NL2SQLTool.md'

|

||||

- PDF RAG Search: 'tools/PDFSearchTool.md'

|

||||

- PG RAG Search: 'tools/PGSearchTool.md'

|

||||

- Scrape Website: 'tools/ScrapeWebsiteTool.md'

|

||||

- Selenium Scraper: 'tools/SeleniumScrapingTool.md'

|

||||

- TXT RAG Search: 'tools/TXTSearchTool.md'

|

||||

- Vision Tool: 'tools/VisionTool.md'

|

||||

- Website RAG Search: 'tools/WebsiteSearchTool.md'

|

||||

- Github RAG Search: 'tools/GitHubSearchTool.md'

|

||||

- Code Docs RAG Search: 'tools/CodeDocsSearchTool.md'

|

||||

- Youtube Video RAG Search: 'tools/YoutubeVideoSearchTool.md'

|

||||

- XML RAG Search: 'tools/XMLSearchTool.md'

|

||||

- Youtube Channel RAG Search: 'tools/YoutubeChannelSearchTool.md'

|

||||

- Youtube Video RAG Search: 'tools/YoutubeVideoSearchTool.md'

|

||||

- Examples:

|

||||

- Trip Planner Crew: https://github.com/joaomdmoura/crewAI-examples/tree/main/trip_planner"

|

||||

- Create Instagram Post: https://github.com/joaomdmoura/crewAI-examples/tree/main/instagram_post"

|

||||

|

||||

61

poetry.lock

generated

61

poetry.lock

generated

@@ -1,4 +1,4 @@

|

||||

# This file is automatically @generated by Poetry 1.7.1 and should not be changed by hand.

|

||||

# This file is automatically @generated by Poetry 1.8.3 and should not be changed by hand.

|

||||

|

||||

[[package]]

|

||||

name = "agentops"

|

||||

@@ -829,29 +829,27 @@ name = "crewai-tools"

|

||||

version = "0.8.3"

|

||||

description = "Set of tools for the crewAI framework"

|

||||

optional = false

|

||||

python-versions = ">=3.10,<=3.13"

|

||||

files = []

|

||||

develop = false

|

||||

python-versions = "<=3.13,>=3.10"

|

||||

files = [

|

||||

{file = "crewai_tools-0.8.3-py3-none-any.whl", hash = "sha256:a54a10c36b8403250e13d6594bd37db7e7deb3f9fabc77e8720c081864ae6189"},

|

||||

{file = "crewai_tools-0.8.3.tar.gz", hash = "sha256:f0317ea1d926221b22fcf4b816d71916fe870aa66ed7ee2a0067dba42b5634eb"},

|

||||

]

|

||||

|

||||

[package.dependencies]

|

||||

beautifulsoup4 = "^4.12.3"

|

||||

chromadb = "^0.4.22"

|

||||

docker = "^7.1.0"

|

||||

docx2txt = "^0.8"

|

||||

embedchain = "^0.1.114"

|

||||

lancedb = "^0.5.4"

|

||||

beautifulsoup4 = ">=4.12.3,<5.0.0"

|

||||

chromadb = ">=0.4.22,<0.5.0"

|

||||

docker = ">=7.1.0,<8.0.0"

|

||||

docx2txt = ">=0.8,<0.9"

|

||||

embedchain = ">=0.1.114,<0.2.0"

|

||||

lancedb = ">=0.5.4,<0.6.0"

|

||||

langchain = ">0.2,<=0.3"

|

||||

openai = "^1.12.0"

|

||||

pydantic = "^2.6.1"

|

||||

pyright = "^1.1.350"

|

||||

pytest = "^8.0.0"

|

||||

pytube = "^15.0.0"

|

||||

requests = "^2.31.0"

|

||||

selenium = "^4.18.1"

|

||||

|

||||

[package.source]

|

||||

type = "directory"

|

||||

url = "../crewai-tools"

|

||||

openai = ">=1.12.0,<2.0.0"

|

||||

pydantic = ">=2.6.1,<3.0.0"

|

||||

pyright = ">=1.1.350,<2.0.0"

|

||||

pytest = ">=8.0.0,<9.0.0"

|

||||

pytube = ">=15.0.0,<16.0.0"

|

||||

requests = ">=2.31.0,<3.0.0"

|

||||

selenium = ">=4.18.1,<5.0.0"

|

||||

|

||||

[[package]]

|

||||

name = "cssselect2"

|

||||

@@ -1321,12 +1319,12 @@ files = [

|

||||

google-auth = ">=2.14.1,<3.0.dev0"

|

||||

googleapis-common-protos = ">=1.56.2,<2.0.dev0"

|

||||

grpcio = [

|

||||

{version = ">=1.49.1,<2.0dev", optional = true, markers = "python_version >= \"3.11\" and extra == \"grpc\""},

|

||||

{version = ">=1.33.2,<2.0dev", optional = true, markers = "python_version < \"3.11\" and extra == \"grpc\""},

|

||||

{version = ">=1.49.1,<2.0dev", optional = true, markers = "python_version >= \"3.11\" and extra == \"grpc\""},

|

||||

]

|

||||

grpcio-status = [

|

||||

{version = ">=1.49.1,<2.0.dev0", optional = true, markers = "python_version >= \"3.11\" and extra == \"grpc\""},

|

||||

{version = ">=1.33.2,<2.0.dev0", optional = true, markers = "python_version < \"3.11\" and extra == \"grpc\""},

|

||||

{version = ">=1.49.1,<2.0.dev0", optional = true, markers = "python_version >= \"3.11\" and extra == \"grpc\""},

|

||||

]

|

||||

proto-plus = ">=1.22.3,<2.0.0dev"

|

||||

protobuf = ">=3.19.5,<3.20.0 || >3.20.0,<3.20.1 || >3.20.1,<4.21.0 || >4.21.0,<4.21.1 || >4.21.1,<4.21.2 || >4.21.2,<4.21.3 || >4.21.3,<4.21.4 || >4.21.4,<4.21.5 || >4.21.5,<6.0.0.dev0"

|

||||

@@ -3628,8 +3626,8 @@ files = [

|

||||

|

||||

[package.dependencies]

|

||||

numpy = [

|

||||

{version = ">=1.23.2", markers = "python_version == \"3.11\""},

|

||||

{version = ">=1.22.4", markers = "python_version < \"3.11\""},

|

||||

{version = ">=1.23.2", markers = "python_version == \"3.11\""},

|

||||

{version = ">=1.26.0", markers = "python_version >= \"3.12\""},

|

||||

]

|

||||

python-dateutil = ">=2.8.2"

|

||||

@@ -4027,6 +4025,19 @@ files = [

|

||||

{file = "pyarrow-17.0.0-cp312-cp312-win_amd64.whl", hash = "sha256:392bc9feabc647338e6c89267635e111d71edad5fcffba204425a7c8d13610d7"},

|

||||

{file = "pyarrow-17.0.0-cp38-cp38-macosx_10_15_x86_64.whl", hash = "sha256:af5ff82a04b2171415f1410cff7ebb79861afc5dae50be73ce06d6e870615204"},

|

||||

{file = "pyarrow-17.0.0-cp38-cp38-macosx_11_0_arm64.whl", hash = "sha256:edca18eaca89cd6382dfbcff3dd2d87633433043650c07375d095cd3517561d8"},

|

||||

{file = "pyarrow-17.0.0-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:7c7916bff914ac5d4a8fe25b7a25e432ff921e72f6f2b7547d1e325c1ad9d155"},

|

||||

{file = "pyarrow-17.0.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:f553ca691b9e94b202ff741bdd40f6ccb70cdd5fbf65c187af132f1317de6145"},

|

||||

{file = "pyarrow-17.0.0-cp38-cp38-manylinux_2_28_aarch64.whl", hash = "sha256:0cdb0e627c86c373205a2f94a510ac4376fdc523f8bb36beab2e7f204416163c"},

|

||||

{file = "pyarrow-17.0.0-cp38-cp38-manylinux_2_28_x86_64.whl", hash = "sha256:d7d192305d9d8bc9082d10f361fc70a73590a4c65cf31c3e6926cd72b76bc35c"},

|

||||

{file = "pyarrow-17.0.0-cp38-cp38-win_amd64.whl", hash = "sha256:02dae06ce212d8b3244dd3e7d12d9c4d3046945a5933d28026598e9dbbda1fca"},

|

||||

{file = "pyarrow-17.0.0-cp39-cp39-macosx_10_15_x86_64.whl", hash = "sha256:13d7a460b412f31e4c0efa1148e1d29bdf18ad1411eb6757d38f8fbdcc8645fb"},

|

||||

{file = "pyarrow-17.0.0-cp39-cp39-macosx_11_0_arm64.whl", hash = "sha256:9b564a51fbccfab5a04a80453e5ac6c9954a9c5ef2890d1bcf63741909c3f8df"},

|

||||

{file = "pyarrow-17.0.0-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:32503827abbc5aadedfa235f5ece8c4f8f8b0a3cf01066bc8d29de7539532687"},

|

||||

{file = "pyarrow-17.0.0-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:a155acc7f154b9ffcc85497509bcd0d43efb80d6f733b0dc3bb14e281f131c8b"},

|

||||

{file = "pyarrow-17.0.0-cp39-cp39-manylinux_2_28_aarch64.whl", hash = "sha256:dec8d129254d0188a49f8a1fc99e0560dc1b85f60af729f47de4046015f9b0a5"},

|

||||

{file = "pyarrow-17.0.0-cp39-cp39-manylinux_2_28_x86_64.whl", hash = "sha256:a48ddf5c3c6a6c505904545c25a4ae13646ae1f8ba703c4df4a1bfe4f4006bda"},

|

||||

{file = "pyarrow-17.0.0-cp39-cp39-win_amd64.whl", hash = "sha256:42bf93249a083aca230ba7e2786c5f673507fa97bbd9725a1e2754715151a204"},

|

||||

{file = "pyarrow-17.0.0.tar.gz", hash = "sha256:4beca9521ed2c0921c1023e68d097d0299b62c362639ea315572a58f3f50fd28"},

|

||||

]

|

||||

|

||||

[package.dependencies]

|

||||

@@ -6062,4 +6073,4 @@ tools = ["crewai-tools"]

|

||||

[metadata]

|

||||

lock-version = "2.0"

|

||||

python-versions = ">=3.10,<=3.13"

|

||||

content-hash = "fc1b510ea9c814db67ac69d2454071b718cb7f6846bd845f7f48561cb0397ce1"

|

||||

content-hash = "91ba982ea96ca7be017d536784223d4ef83e86de05d11eb1c3ce0fc1b726f283"

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

[tool.poetry]

|

||||

name = "crewai"

|

||||

version = "0.51.0"

|

||||

version = "0.51.1"

|

||||

description = "Cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks."

|

||||

authors = ["Joao Moura <joao@crewai.com>"]

|

||||

readme = "README.md"

|

||||

@@ -62,6 +62,9 @@ ignore_missing_imports = true

|

||||

disable_error_code = 'import-untyped'

|

||||

exclude = ["cli/templates"]

|

||||

|

||||

[tool.bandit]

|

||||

exclude_dirs = ["src/crewai/cli/templates"]

|

||||

|

||||

[build-system]

|

||||

requires = ["poetry-core"]

|

||||

build-backend = "poetry.core.masonry.api"

|

||||

|

||||

@@ -113,10 +113,11 @@ class Agent(BaseAgent):

|

||||

description="Maximum number of retries for an agent to execute a task when an error occurs.",

|

||||

)

|

||||

|

||||

def __init__(__pydantic_self__, **data):

|

||||

config = data.pop("config", {})

|

||||

super().__init__(**config, **data)

|

||||

__pydantic_self__.agent_ops_agent_name = __pydantic_self__.role

|

||||

@model_validator(mode="after")

|

||||

def set_agent_ops_agent_name(self) -> "Agent":

|

||||

"""Set agent ops agent name."""

|

||||

self.agent_ops_agent_name = self.role

|

||||

return self

|

||||

|

||||

@model_validator(mode="after")

|

||||

def set_agent_executor(self) -> "Agent":

|

||||

@@ -213,7 +214,7 @@ class Agent(BaseAgent):

|

||||

raise e

|

||||

result = self.execute_task(task, context, tools)

|

||||

|

||||

if self.max_rpm:

|

||||

if self.max_rpm and self._rpm_controller:

|

||||

self._rpm_controller.stop_rpm_counter()

|

||||

|

||||

# If there was any tool in self.tools_results that had result_as_answer

|

||||

|

||||

@@ -7,7 +7,6 @@ from typing import Any, Dict, List, Optional, TypeVar

|

||||

from pydantic import (

|

||||

UUID4,

|

||||

BaseModel,

|

||||

ConfigDict,

|

||||

Field,

|

||||

InstanceOf,

|

||||

PrivateAttr,

|

||||

@@ -74,12 +73,17 @@ class BaseAgent(ABC, BaseModel):

|

||||

"""

|

||||

|

||||

__hash__ = object.__hash__ # type: ignore

|

||||

_logger: Logger = PrivateAttr()

|

||||

_rpm_controller: RPMController = PrivateAttr(default=None)

|

||||

_logger: Logger = PrivateAttr(default_factory=lambda: Logger(verbose=False))

|

||||

_rpm_controller: Optional[RPMController] = PrivateAttr(default=None)

|

||||

_request_within_rpm_limit: Any = PrivateAttr(default=None)

|

||||

formatting_errors: int = 0

|

||||

model_config = ConfigDict(arbitrary_types_allowed=True)

|

||||

_original_role: Optional[str] = PrivateAttr(default=None)

|

||||

_original_goal: Optional[str] = PrivateAttr(default=None)

|

||||

_original_backstory: Optional[str] = PrivateAttr(default=None)

|

||||

_token_process: TokenProcess = PrivateAttr(default_factory=TokenProcess)

|

||||

id: UUID4 = Field(default_factory=uuid.uuid4, frozen=True)

|

||||

formatting_errors: int = Field(

|

||||

default=0, description="Number of formatting errors."

|

||||

)

|

||||

role: str = Field(description="Role of the agent")

|

||||

goal: str = Field(description="Objective of the agent")

|

||||

backstory: str = Field(description="Backstory of the agent")

|

||||

@@ -123,15 +127,6 @@ class BaseAgent(ABC, BaseModel):

|

||||

default=None, description="Maximum number of tokens for the agent's execution."

|

||||

)

|

||||

|

||||

_original_role: str | None = None

|

||||

_original_goal: str | None = None

|

||||

_original_backstory: str | None = None

|

||||

_token_process: TokenProcess = TokenProcess()

|

||||

|

||||

def __init__(__pydantic_self__, **data):

|

||||

config = data.pop("config", {})

|

||||

super().__init__(**config, **data)

|

||||

|

||||

@model_validator(mode="after")

|

||||

def set_config_attributes(self):

|

||||

if self.config:

|

||||

@@ -170,7 +165,7 @@ class BaseAgent(ABC, BaseModel):

|

||||

@property

|

||||

def key(self):

|

||||

source = [self.role, self.goal, self.backstory]

|

||||

return md5("|".join(source).encode()).hexdigest()

|

||||

return md5("|".join(source).encode(), usedforsecurity=False).hexdigest()

|

||||

|

||||

@abstractmethod

|

||||

def execute_task(

|

||||

|

||||

11

src/crewai/agents/cache/cache_handler.py

vendored

11

src/crewai/agents/cache/cache_handler.py

vendored

@@ -1,13 +1,12 @@

|

||||

from typing import Optional

|

||||

from typing import Any, Dict, Optional

|

||||

|

||||

from pydantic import BaseModel, PrivateAttr

|

||||

|

||||

|

||||

class CacheHandler:

|

||||

class CacheHandler(BaseModel):

|

||||

"""Callback handler for tool usage."""

|

||||

|

||||

_cache: dict = {}

|

||||

|

||||

def __init__(self):

|

||||

self._cache = {}

|

||||

_cache: Dict[str, Any] = PrivateAttr(default_factory=dict)

|

||||

|

||||

def add(self, tool, input, output):

|

||||

self._cache[f"{tool}-{input}"] = output

|

||||

|

||||

@@ -1,33 +1,29 @@

|

||||

import threading

|

||||

import time

|

||||

from typing import Any, Dict, Iterator, List, Literal, Optional, Tuple, Union

|

||||

|

||||

import click

|

||||

|

||||

|

||||

from langchain.agents import AgentExecutor

|

||||

from langchain.agents.agent import ExceptionTool

|

||||

from langchain.callbacks.manager import CallbackManagerForChainRun

|

||||

from langchain.chains.summarize import load_summarize_chain

|

||||

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

||||

from langchain_core.agents import AgentAction, AgentFinish, AgentStep

|

||||

from langchain_core.exceptions import OutputParserException

|

||||

from langchain_core.tools import BaseTool

|

||||

from langchain_core.utils.input import get_color_mapping

|

||||

from pydantic import InstanceOf

|

||||

|

||||

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

||||

from langchain.chains.summarize import load_summarize_chain

|

||||

|

||||

from crewai.agents.agent_builder.base_agent_executor_mixin import CrewAgentExecutorMixin

|

||||

from crewai.agents.tools_handler import ToolsHandler

|

||||

|

||||

|

||||

from crewai.tools.tool_usage import ToolUsage, ToolUsageErrorException

|

||||

from crewai.utilities import I18N

|

||||

from crewai.utilities.constants import TRAINING_DATA_FILE

|

||||

from crewai.utilities.exceptions.context_window_exceeding_exception import (

|

||||

LLMContextLengthExceededException,

|

||||

)

|

||||

from crewai.utilities.training_handler import CrewTrainingHandler

|

||||

from crewai.utilities.logger import Logger

|

||||

from crewai.utilities.training_handler import CrewTrainingHandler

|

||||

|

||||

|

||||

class CrewAgentExecutor(AgentExecutor, CrewAgentExecutorMixin):

|

||||

@@ -213,11 +209,7 @@ class CrewAgentExecutor(AgentExecutor, CrewAgentExecutorMixin):

|

||||

yield step

|

||||

return

|

||||

|

||||

yield AgentStep(

|

||||

action=AgentAction("_Exception", str(e), str(e)),

|

||||

observation=str(e),

|

||||

)

|

||||

return

|

||||

raise e

|

||||

|

||||

# If the tool chosen is the finishing tool, then we end and return.

|

||||

if isinstance(output, AgentFinish):

|

||||

|

||||

@@ -8,6 +8,7 @@ from crewai.memory.storage.kickoff_task_outputs_storage import (

|

||||

)

|

||||

|

||||

from .evaluate_crew import evaluate_crew

|

||||

from .install_crew import install_crew

|

||||

from .replay_from_task import replay_task_command

|

||||

from .reset_memories_command import reset_memories_command

|

||||

from .run_crew import run_crew

|

||||

@@ -165,10 +166,16 @@ def test(n_iterations: int, model: str):

|

||||

evaluate_crew(n_iterations, model)

|

||||

|

||||

|

||||

@crewai.command()

|

||||

def install():

|

||||

"""Install the Crew."""

|

||||

install_crew()

|

||||

|

||||

|

||||

@crewai.command()

|

||||

def run():

|

||||

"""Run the crew."""

|

||||

click.echo("Running the crew")

|

||||

"""Run the Crew."""

|

||||

click.echo("Running the Crew")

|

||||

run_crew()

|

||||

|

||||

|

||||

|

||||

21

src/crewai/cli/install_crew.py

Normal file

21

src/crewai/cli/install_crew.py

Normal file

@@ -0,0 +1,21 @@

|

||||

import subprocess

|

||||

|

||||

import click

|

||||

|

||||

|

||||

def install_crew() -> None:

|

||||

"""

|

||||

Install the crew by running the Poetry command to lock and install.

|

||||

"""

|

||||

try:

|

||||

subprocess.run(["poetry", "lock"], check=True, capture_output=False, text=True)

|

||||

subprocess.run(

|

||||

["poetry", "install"], check=True, capture_output=False, text=True

|

||||

)

|

||||

|

||||

except subprocess.CalledProcessError as e:

|

||||

click.echo(f"An error occurred while running the crew: {e}", err=True)

|

||||

click.echo(e.output, err=True)

|

||||

|

||||

except Exception as e:

|

||||

click.echo(f"An unexpected error occurred: {e}", err=True)

|

||||

@@ -14,12 +14,9 @@ pip install poetry

|

||||

|

||||

Next, navigate to your project directory and install the dependencies:

|

||||

|

||||

1. First lock the dependencies and then install them:

|

||||

1. First lock the dependencies and install them by using the CLI command:

|

||||

```bash

|

||||

poetry lock

|

||||

```

|

||||

```bash

|

||||

poetry install

|

||||

crewai install

|

||||

```

|

||||

### Customizing

|

||||

|

||||

@@ -37,10 +34,6 @@ To kickstart your crew of AI agents and begin task execution, run this from the

|

||||

```bash

|

||||

$ crewai run

|

||||

```

|

||||

or

|

||||

```bash

|

||||

poetry run {{folder_name}}

|

||||

```

|

||||

|

||||

This command initializes the {{name}} Crew, assembling the agents and assigning them tasks as defined in your configuration.

|

||||

|

||||

|

||||

@@ -6,7 +6,8 @@ authors = ["Your Name <you@example.com>"]

|

||||

|

||||

[tool.poetry.dependencies]

|

||||

python = ">=3.10,<=3.13"

|

||||

crewai = { extras = ["tools"], version = "^0.51.0" }

|

||||

crewai = { extras = ["tools"], version = ">=0.51.0,<1.0.0" }

|

||||

|

||||

|

||||

[tool.poetry.scripts]

|

||||

{{folder_name}} = "{{folder_name}}.main:run"

|

||||

|

||||

@@ -15,12 +15,11 @@ pip install poetry

|

||||

Next, navigate to your project directory and install the dependencies:

|

||||

|

||||

1. First lock the dependencies and then install them:

|

||||

|

||||

```bash

|

||||

poetry lock

|

||||

```

|

||||

```bash

|

||||

poetry install

|

||||

crewai install

|

||||

```

|

||||

|

||||

### Customizing

|

||||

|

||||

**Add your `OPENAI_API_KEY` into the `.env` file**

|

||||

@@ -35,7 +34,7 @@ poetry install

|

||||

To kickstart your crew of AI agents and begin task execution, run this from the root folder of your project:

|

||||

|

||||

```bash

|

||||

poetry run {{folder_name}}

|

||||

crewai run

|

||||

```

|

||||

|

||||

This command initializes the {{name}} Crew, assembling the agents and assigning them tasks as defined in your configuration.

|

||||

@@ -49,6 +48,7 @@ The {{name}} Crew is composed of multiple AI agents, each with unique roles, goa

|

||||

## Support

|

||||

|

||||

For support, questions, or feedback regarding the {{crew_name}} Crew or crewAI.

|

||||

|

||||

- Visit our [documentation](https://docs.crewai.com)

|

||||

- Reach out to us through our [GitHub repository](https://github.com/joaomdmoura/crewai)

|

||||

- [Join our Discord](https://discord.com/invite/X4JWnZnxPb)

|

||||

|

||||

@@ -6,7 +6,7 @@ authors = ["Your Name <you@example.com>"]

|

||||

|

||||

[tool.poetry.dependencies]

|

||||

python = ">=3.10,<=3.13"

|

||||

crewai = { extras = ["tools"], version = "^0.51.0" }

|

||||

crewai = { extras = ["tools"], version = ">=0.51.0,<1.0.0" }

|

||||

asyncio = "*"

|

||||

|

||||

[tool.poetry.scripts]

|

||||

|

||||

@@ -16,10 +16,7 @@ Next, navigate to your project directory and install the dependencies:

|

||||

|

||||

1. First lock the dependencies and then install them:

|

||||

```bash

|

||||

poetry lock

|

||||

```

|

||||

```bash

|

||||

poetry install

|

||||

crewai install

|

||||

```

|

||||

### Customizing

|

||||

|

||||

@@ -35,7 +32,7 @@ poetry install

|

||||

To kickstart your crew of AI agents and begin task execution, run this from the root folder of your project:

|

||||

|

||||

```bash

|

||||

poetry run {{folder_name}}

|

||||

crewai run

|

||||

```

|

||||

|

||||

This command initializes the {{name}} Crew, assembling the agents and assigning them tasks as defined in your configuration.

|

||||

|

||||

@@ -6,7 +6,8 @@ authors = ["Your Name <you@example.com>"]

|

||||

|

||||

[tool.poetry.dependencies]

|

||||

python = ">=3.10,<=3.13"

|

||||

crewai = { extras = ["tools"], version = "^0.51.0" }

|

||||

crewai = { extras = ["tools"], version = ">=0.51.0,<1.0.0" }

|

||||

|

||||

|

||||

[tool.poetry.scripts]

|

||||

{{folder_name}} = "{{folder_name}}.main:main"

|

||||

|

||||

@@ -1,16 +1,15 @@

|

||||

import asyncio

|

||||

import json

|

||||

import os

|

||||

import uuid

|

||||

from concurrent.futures import Future

|

||||

from hashlib import md5

|

||||

import os

|

||||

from typing import TYPE_CHECKING, Any, Dict, List, Optional, Tuple, Union

|

||||

|

||||

from langchain_core.callbacks import BaseCallbackHandler

|

||||

from pydantic import (

|

||||

UUID4,

|

||||

BaseModel,

|

||||

ConfigDict,

|

||||

Field,

|

||||

InstanceOf,

|

||||

Json,

|

||||

@@ -48,11 +47,10 @@ from crewai.utilities.planning_handler import CrewPlanner

|

||||

from crewai.utilities.task_output_storage_handler import TaskOutputStorageHandler

|

||||

from crewai.utilities.training_handler import CrewTrainingHandler

|

||||

|

||||

|

||||

agentops = None

|

||||

if os.environ.get("AGENTOPS_API_KEY"):

|

||||

try:

|

||||

import agentops

|

||||

import agentops # type: ignore

|

||||

except ImportError:

|

||||

pass

|

||||

|

||||

@@ -106,7 +104,6 @@ class Crew(BaseModel):

|

||||

|

||||

name: Optional[str] = Field(default=None)

|

||||

cache: bool = Field(default=True)

|

||||

model_config = ConfigDict(arbitrary_types_allowed=True)

|

||||

tasks: List[Task] = Field(default_factory=list)

|

||||

agents: List[BaseAgent] = Field(default_factory=list)

|

||||

process: Process = Field(default=Process.sequential)

|

||||

@@ -364,7 +361,7 @@ class Crew(BaseModel):

|

||||

source = [agent.key for agent in self.agents] + [

|

||||

task.key for task in self.tasks

|

||||

]

|

||||

return md5("|".join(source).encode()).hexdigest()

|

||||

return md5("|".join(source).encode(), usedforsecurity=False).hexdigest()

|

||||

|

||||

def _setup_from_config(self):

|

||||

assert self.config is not None, "Config should not be None."

|

||||

@@ -541,7 +538,7 @@ class Crew(BaseModel):

|

||||

)._handle_crew_planning()

|

||||

|

||||

for task, step_plan in zip(self.tasks, result.list_of_plans_per_task):

|

||||

task.description += step_plan

|

||||

task.description += step_plan.plan

|

||||

|

||||

def _store_execution_log(

|

||||

self,

|

||||

|

||||

@@ -6,12 +6,20 @@ def task(func):

|

||||

task.registration_order = []

|

||||

|

||||

func.is_task = True

|

||||

wrapped_func = memoize(func)

|

||||

memoized_func = memoize(func)

|

||||

|

||||

# Append the function name to the registration order list

|

||||

task.registration_order.append(func.__name__)

|

||||

|

||||

return wrapped_func

|

||||

def wrapper(*args, **kwargs):

|

||||

result = memoized_func(*args, **kwargs)

|

||||

|

||||

if not result.name:

|

||||

result.name = func.__name__

|

||||

|

||||

return result

|

||||

|

||||

return wrapper

|

||||

|

||||

|

||||

def agent(func):

|

||||

|

||||

@@ -1,56 +1,45 @@

|

||||

import inspect

|

||||

import os

|

||||

from pathlib import Path

|

||||

from typing import Any, Callable, Dict

|

||||

|

||||

import yaml

|

||||

from dotenv import load_dotenv

|

||||

from pydantic import ConfigDict

|

||||

|

||||

load_dotenv()

|

||||

|

||||

|

||||

def CrewBase(cls):

|

||||

class WrappedClass(cls):

|

||||

model_config = ConfigDict(arbitrary_types_allowed=True)

|

||||

is_crew_class: bool = True # type: ignore

|

||||

|

||||

base_directory = None

|

||||

for frame_info in inspect.stack():

|

||||

if "site-packages" not in frame_info.filename:

|

||||

base_directory = Path(frame_info.filename).parent.resolve()

|

||||

break

|

||||

# Get the directory of the class being decorated

|

||||

base_directory = Path(inspect.getfile(cls)).parent

|

||||

|

||||

original_agents_config_path = getattr(

|

||||

cls, "agents_config", "config/agents.yaml"

|

||||

)

|

||||

|

||||

original_tasks_config_path = getattr(cls, "tasks_config", "config/tasks.yaml")

|

||||

|

||||

def __init__(self, *args, **kwargs):

|

||||

super().__init__(*args, **kwargs)

|

||||

|

||||

if self.base_directory is None:

|

||||

raise Exception(

|

||||

"Unable to dynamically determine the project's base directory, you must run it from the project's root directory."

|

||||

)

|

||||

agents_config_path = self.base_directory / self.original_agents_config_path

|

||||

tasks_config_path = self.base_directory / self.original_tasks_config_path

|

||||

|

||||

self.agents_config = self.load_yaml(

|

||||

os.path.join(self.base_directory, self.original_agents_config_path)

|

||||

)

|

||||

|

||||

self.tasks_config = self.load_yaml(

|

||||

os.path.join(self.base_directory, self.original_tasks_config_path)

|

||||

)

|

||||

self.agents_config = self.load_yaml(agents_config_path)

|

||||

self.tasks_config = self.load_yaml(tasks_config_path)

|

||||

|

||||

self.map_all_agent_variables()

|

||||

self.map_all_task_variables()

|

||||

|

||||

@staticmethod

|

||||

def load_yaml(config_path: str):

|

||||

with open(config_path, "r") as file:

|

||||

# parsedContent = YamlParser.parse(file) # type: ignore # Argument 1 to "parse" has incompatible type "TextIOWrapper"; expected "YamlParser"

|

||||

return yaml.safe_load(file)

|

||||

def load_yaml(config_path: Path):