Compare commits

6 Commits

devin/1750

...

devin/1750

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

13cd8baa2c | ||

|

|

a9823a4368 | ||

|

|

4e288e4cf7 | ||

|

|

f6dfec61d6 | ||

|

|

060c486948 | ||

|

|

8b176d0598 |

BIN

docs/images/crewai_traces.gif

Normal file

|

After Width: | Height: | Size: 10 MiB |

BIN

docs/images/maxim_agent_tracking.png

Normal file

|

After Width: | Height: | Size: 1.3 MiB |

BIN

docs/images/maxim_alerts_1.png

Normal file

|

After Width: | Height: | Size: 1.1 MiB |

BIN

docs/images/maxim_dashboard_1.png

Normal file

|

After Width: | Height: | Size: 617 KiB |

BIN

docs/images/maxim_playground.png

Normal file

|

After Width: | Height: | Size: 1.2 MiB |

BIN

docs/images/maxim_trace_eval.png

Normal file

|

After Width: | Height: | Size: 845 KiB |

BIN

docs/images/maxim_versions.png

Normal file

|

After Width: | Height: | Size: 1.3 MiB |

@@ -6,11 +6,11 @@ icon: plug

|

||||

|

||||

## Overview

|

||||

|

||||

The [Model Context Protocol](https://modelcontextprotocol.io/introduction) (MCP) provides a standardized way for AI agents to provide context to LLMs by communicating with external services, known as MCP Servers.

|

||||

The `crewai-tools` library extends CrewAI's capabilities by allowing you to seamlessly integrate tools from these MCP servers into your agents.

|

||||

This gives your crews access to a vast ecosystem of functionalities.

|

||||

The [Model Context Protocol](https://modelcontextprotocol.io/introduction) (MCP) provides a standardized way for AI agents to provide context to LLMs by communicating with external services, known as MCP Servers.

|

||||

The `crewai-tools` library extends CrewAI's capabilities by allowing you to seamlessly integrate tools from these MCP servers into your agents.

|

||||

This gives your crews access to a vast ecosystem of functionalities.

|

||||

|

||||

We currently support the following transport mechanisms:

|

||||

We currently support the following transport mechanisms:

|

||||

|

||||

- **Stdio**: for local servers (communication via standard input/output between processes on the same machine)

|

||||

- **Server-Sent Events (SSE)**: for remote servers (unidirectional, real-time data streaming from server to client over HTTP)

|

||||

@@ -52,27 +52,27 @@ from mcp import StdioServerParameters # For Stdio Server

|

||||

# Example server_params (choose one based on your server type):

|

||||

# 1. Stdio Server:

|

||||

server_params=StdioServerParameters(

|

||||

command="python3",

|

||||

command="python3",

|

||||

args=["servers/your_server.py"],

|

||||

env={"UV_PYTHON": "3.12", **os.environ},

|

||||

)

|

||||

|

||||

# 2. SSE Server:

|

||||

server_params = {

|

||||

"url": "http://localhost:8000/sse",

|

||||

"url": "http://localhost:8000/sse",

|

||||

"transport": "sse"

|

||||

}

|

||||

|

||||

# 3. Streamable HTTP Server:

|

||||

server_params = {

|

||||

"url": "http://localhost:8001/mcp",

|

||||

"url": "http://localhost:8001/mcp",

|

||||

"transport": "streamable-http"

|

||||

}

|

||||

|

||||

# Example usage (uncomment and adapt once server_params is set):

|

||||

with MCPServerAdapter(server_params) as mcp_tools:

|

||||

print(f"Available tools: {[tool.name for tool in mcp_tools]}")

|

||||

|

||||

|

||||

my_agent = Agent(

|

||||

role="MCP Tool User",

|

||||

goal="Utilize tools from an MCP server.",

|

||||

@@ -101,44 +101,79 @@ with MCPServerAdapter(server_params) as mcp_tools:

|

||||

)

|

||||

# ... rest of your crew setup ...

|

||||

```

|

||||

|

||||

## Using with CrewBase

|

||||

|

||||

To use MCPServer tools within a CrewBase class, use the `mcp_tools` method. Server configurations should be provided via the mcp_server_params attribute. You can pass either a single configuration or a list of multiple server configurations.

|

||||

|

||||

```python

|

||||

@CrewBase

|

||||

class CrewWithMCP:

|

||||

# ... define your agents and tasks config file ...

|

||||

|

||||

mcp_server_params = [

|

||||

# Streamable HTTP Server

|

||||

{

|

||||

"url": "http://localhost:8001/mcp",

|

||||

"transport": "streamable-http"

|

||||

},

|

||||

# SSE Server

|

||||

{

|

||||

"url": "http://localhost:8000/sse",

|

||||

"transport": "sse"

|

||||

},

|

||||

# StdIO Server

|

||||

StdioServerParameters(

|

||||

command="python3",

|

||||

args=["servers/your_stdio_server.py"],

|

||||

env={"UV_PYTHON": "3.12", **os.environ},

|

||||

)

|

||||

]

|

||||

|

||||

@agent

|

||||

def your_agent(self):

|

||||

return Agent(config=self.agents_config["your_agent"], tools=self.get_mcp_tools()) # you can filter which tool are available also

|

||||

|

||||

# ... rest of your crew setup ...

|

||||

```

|

||||

## Explore MCP Integrations

|

||||

|

||||

<CardGroup cols={2}>

|

||||

<Card

|

||||

title="Stdio Transport"

|

||||

icon="server"

|

||||

<Card

|

||||

title="Stdio Transport"

|

||||

icon="server"

|

||||

href="/mcp/stdio"

|

||||

color="#3B82F6"

|

||||

>

|

||||

Connect to local MCP servers via standard input/output. Ideal for scripts and local executables.

|

||||

</Card>

|

||||

<Card

|

||||

title="SSE Transport"

|

||||

icon="wifi"

|

||||

<Card

|

||||

title="SSE Transport"

|

||||

icon="wifi"

|

||||

href="/mcp/sse"

|

||||

color="#10B981"

|

||||

>

|

||||

Integrate with remote MCP servers using Server-Sent Events for real-time data streaming.

|

||||

</Card>

|

||||

<Card

|

||||

title="Streamable HTTP Transport"

|

||||

icon="globe"

|

||||

<Card

|

||||

title="Streamable HTTP Transport"

|

||||

icon="globe"

|

||||

href="/mcp/streamable-http"

|

||||

color="#F59E0B"

|

||||

>

|

||||

Utilize flexible Streamable HTTP for robust communication with remote MCP servers.

|

||||

</Card>

|

||||

<Card

|

||||

title="Connecting to Multiple Servers"

|

||||

icon="layer-group"

|

||||

<Card

|

||||

title="Connecting to Multiple Servers"

|

||||

icon="layer-group"

|

||||

href="/mcp/multiple-servers"

|

||||

color="#8B5CF6"

|

||||

>

|

||||

Aggregate tools from several MCP servers simultaneously using a single adapter.

|

||||

</Card>

|

||||

<Card

|

||||

title="Security Considerations"

|

||||

icon="lock"

|

||||

<Card

|

||||

title="Security Considerations"

|

||||

icon="lock"

|

||||

href="/mcp/security"

|

||||

color="#EF4444"

|

||||

>

|

||||

@@ -148,7 +183,7 @@ with MCPServerAdapter(server_params) as mcp_tools:

|

||||

|

||||

Checkout this repository for full demos and examples of MCP integration with CrewAI! 👇

|

||||

|

||||

<Card

|

||||

<Card

|

||||

title="GitHub Repository"

|

||||

icon="github"

|

||||

href="https://github.com/tonykipkemboi/crewai-mcp-demo"

|

||||

@@ -163,7 +198,7 @@ Always ensure that you trust an MCP Server before using it.

|

||||

</Warning>

|

||||

|

||||

#### Security Warning: DNS Rebinding Attacks

|

||||

SSE transports can be vulnerable to DNS rebinding attacks if not properly secured.

|

||||

SSE transports can be vulnerable to DNS rebinding attacks if not properly secured.

|

||||

To prevent this:

|

||||

|

||||

1. **Always validate Origin headers** on incoming SSE connections to ensure they come from expected sources

|

||||

@@ -175,6 +210,6 @@ Without these protections, attackers could use DNS rebinding to interact with lo

|

||||

For more details, see the [Anthropic's MCP Transport Security docs](https://modelcontextprotocol.io/docs/concepts/transports#security-considerations).

|

||||

|

||||

### Limitations

|

||||

* **Supported Primitives**: Currently, `MCPServerAdapter` primarily supports adapting MCP `tools`.

|

||||

* **Supported Primitives**: Currently, `MCPServerAdapter` primarily supports adapting MCP `tools`.

|

||||

Other MCP primitives like `prompts` or `resources` are not directly integrated as CrewAI components through this adapter at this time.

|

||||

* **Output Handling**: The adapter typically processes the primary text output from an MCP tool (e.g., `.content[0].text`). Complex or multi-modal outputs might require custom handling if not fitting this pattern.

|

||||

|

||||

@@ -1,28 +1,107 @@

|

||||

---

|

||||

title: Maxim Integration

|

||||

description: Start Agent monitoring, evaluation, and observability

|

||||

icon: bars-staggered

|

||||

title: "Maxim Integration"

|

||||

description: "Start Agent monitoring, evaluation, and observability"

|

||||

icon: "infinity"

|

||||

---

|

||||

|

||||

# Maxim Integration

|

||||

# Maxim Overview

|

||||

|

||||

Maxim AI provides comprehensive agent monitoring, evaluation, and observability for your CrewAI applications. With Maxim's one-line integration, you can easily trace and analyse agent interactions, performance metrics, and more.

|

||||

|

||||

## Features

|

||||

|

||||

## Features: One Line Integration

|

||||

### Prompt Management

|

||||

|

||||

- **End-to-End Agent Tracing**: Monitor the complete lifecycle of your agents

|

||||

- **Performance Analytics**: Track latency, tokens consumed, and costs

|

||||

- **Hyperparameter Monitoring**: View the configuration details of your agent runs

|

||||

- **Tool Call Tracking**: Observe when and how agents use their tools

|

||||

- **Advanced Visualisation**: Understand agent trajectories through intuitive dashboards

|

||||

Maxim's Prompt Management capabilities enable you to create, organize, and optimize prompts for your CrewAI agents. Rather than hardcoding instructions, leverage Maxim’s SDK to dynamically retrieve and apply version-controlled prompts.

|

||||

|

||||

<Tabs>

|

||||

<Tab title="Prompt Playground">

|

||||

Create, refine, experiment and deploy your prompts via the playground. Organize of your prompts using folders and versions, experimenting with the real world cases by linking tools and context, and deploying based on custom logic.

|

||||

|

||||

Easily experiment across models by [**configuring models**](https://www.getmaxim.ai/docs/introduction/quickstart/setting-up-workspace#add-model-api-keys) and selecting the relevant model from the dropdown at the top of the prompt playground.

|

||||

|

||||

<img src='https://raw.githubusercontent.com/akmadan/crewAI/docs_maxim_observability/docs/images/maxim_playground.png'> </img>

|

||||

</Tab>

|

||||

<Tab title="Prompt Versions">

|

||||

As teams build their AI applications, a big part of experimentation is iterating on the prompt structure. In order to collaborate effectively and organize your changes clearly, Maxim allows prompt versioning and comparison runs across versions.

|

||||

|

||||

<img src='https://raw.githubusercontent.com/akmadan/crewAI/docs_maxim_observability/docs/images/maxim_versions.png'> </img>

|

||||

</Tab>

|

||||

<Tab title="Prompt Comparisons">

|

||||

Iterating on Prompts as you evolve your AI application would need experiments across models, prompt structures, etc. In order to compare versions and make informed decisions about changes, the comparison playground allows a side by side view of results.

|

||||

|

||||

## **Why use Prompt comparison?**

|

||||

|

||||

Prompt comparison combines multiple single Prompts into one view, enabling a streamlined approach for various workflows:

|

||||

|

||||

1. **Model comparison**: Evaluate the performance of different models on the same Prompt.

|

||||

2. **Prompt optimization**: Compare different versions of a Prompt to identify the most effective formulation.

|

||||

3. **Cross-Model consistency**: Ensure consistent outputs across various models for the same Prompt.

|

||||

4. **Performance benchmarking**: Analyze metrics like latency, cost, and token count across different models and Prompts.

|

||||

</Tab>

|

||||

</Tabs>

|

||||

|

||||

### Observability & Evals

|

||||

|

||||

Maxim AI provides comprehensive observability & evaluation for your CrewAI agents, helping you understand exactly what's happening during each execution.

|

||||

|

||||

<Tabs>

|

||||

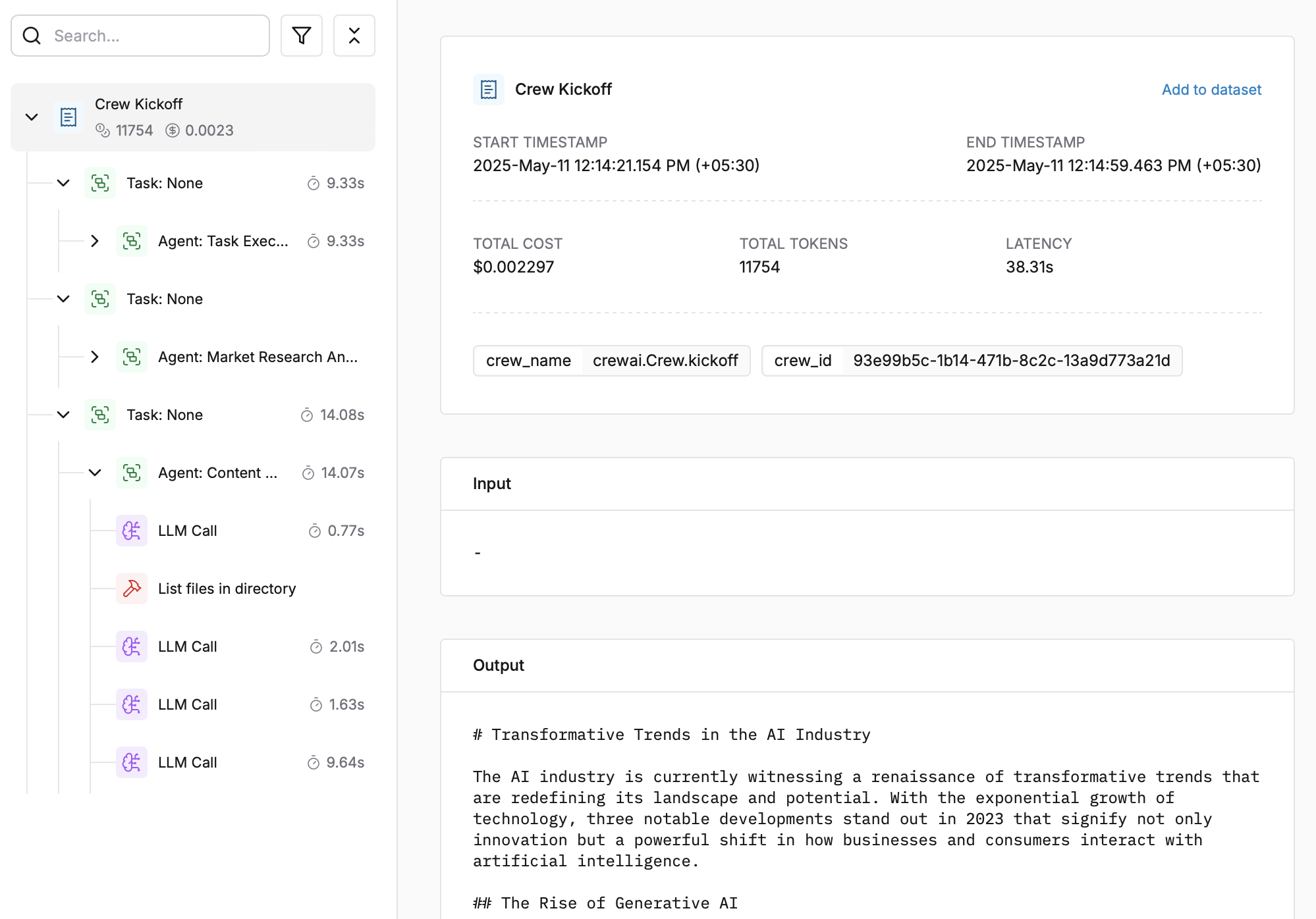

<Tab title="Agent Tracing">

|

||||

Track your agent’s complete lifecycle, including tool calls, agent trajectories, and decision flows effortlessly.

|

||||

|

||||

<img src='https://raw.githubusercontent.com/akmadan/crewAI/docs_maxim_observability/docs/images/maxim_agent_tracking.png'> </img>

|

||||

</Tab>

|

||||

<Tab title="Analytics + Evals">

|

||||

Run detailed evaluations on full traces or individual nodes with support for:

|

||||

|

||||

- Multi-step interactions and granular trace analysis

|

||||

- Session Level Evaluations

|

||||

- Simulations for real-world testing

|

||||

|

||||

<img src='https://raw.githubusercontent.com/akmadan/crewAI/docs_maxim_observability/docs/images/maxim_trace_eval.png'> </img>

|

||||

|

||||

<CardGroup cols={3}>

|

||||

<Card title="Auto Evals on Logs" icon="e" href="https://www.getmaxim.ai/docs/observe/how-to/evaluate-logs/auto-evaluation">

|

||||

<p>

|

||||

Evaluate captured logs automatically from the UI based on filters and sampling

|

||||

|

||||

</p>

|

||||

</Card>

|

||||

<Card title="Human Evals on Logs" icon="hand" href="https://www.getmaxim.ai/docs/observe/how-to/evaluate-logs/human-evaluation">

|

||||

<p>

|

||||

Use human evaluation or rating to assess the quality of your logs and evaluate them.

|

||||

|

||||

</p>

|

||||

</Card>

|

||||

<Card title="Node Level Evals" icon="road" href="https://www.getmaxim.ai/docs/observe/how-to/evaluate-logs/node-level-evaluation">

|

||||

<p>

|

||||

Evaluate any component of your trace or log to gain insights into your agent’s behavior.

|

||||

|

||||

</p>

|

||||

</Card>

|

||||

</CardGroup>

|

||||

---

|

||||

</Tab>

|

||||

<Tab title="Alerting">

|

||||

Set thresholds on **error**, **cost, token usage, user feedback, latency** and get real-time alerts via Slack or PagerDuty.

|

||||

|

||||

<img src='https://raw.githubusercontent.com/akmadan/crewAI/docs_maxim_observability/docs/images/maxim_alerts_1.png'> </img>

|

||||

</Tab>

|

||||

<Tab title="Dashboards">

|

||||

Visualize Traces over time, usage metrics, latency & error rates with ease.

|

||||

|

||||

<img src='https://raw.githubusercontent.com/akmadan/crewAI/docs_maxim_observability/docs/images/maxim_dashboard_1.png'> </img>

|

||||

</Tab>

|

||||

</Tabs>

|

||||

|

||||

## Getting Started

|

||||

|

||||

### Prerequisites

|

||||

|

||||

- Python version >=3.10

|

||||

|

||||

- Python version \>=3.10

|

||||

- A Maxim account ([sign up here](https://getmaxim.ai/))

|

||||

- Generate Maxim API Key

|

||||

- A CrewAI project

|

||||

|

||||

### Installation

|

||||

@@ -30,16 +109,14 @@ Maxim AI provides comprehensive agent monitoring, evaluation, and observability

|

||||

Install the Maxim SDK via pip:

|

||||

|

||||

```python

|

||||

pip install maxim-py>=3.6.2

|

||||

pip install maxim-py

|

||||

```

|

||||

|

||||

Or add it to your `requirements.txt`:

|

||||

|

||||

```

|

||||

maxim-py>=3.6.2

|

||||

maxim-py

|

||||

```

|

||||

|

||||

|

||||

### Basic Setup

|

||||

|

||||

### 1. Set up environment variables

|

||||

@@ -64,18 +141,15 @@ from maxim.logger.crewai import instrument_crewai

|

||||

|

||||

### 3. Initialise Maxim with your API key

|

||||

|

||||

```python

|

||||

# Initialize Maxim logger

|

||||

logger = Maxim().logger()

|

||||

|

||||

```python {8}

|

||||

# Instrument CrewAI with just one line

|

||||

instrument_crewai(logger)

|

||||

instrument_crewai(Maxim().logger())

|

||||

```

|

||||

|

||||

### 4. Create and run your CrewAI application as usual

|

||||

|

||||

```python

|

||||

|

||||

# Create your agent

|

||||

researcher = Agent(

|

||||

role='Senior Research Analyst',

|

||||

@@ -105,7 +179,8 @@ finally:

|

||||

maxim.cleanup() # Ensure cleanup happens even if errors occur

|

||||

```

|

||||

|

||||

That's it! All your CrewAI agent interactions will now be logged and available in your Maxim dashboard.

|

||||

|

||||

That's it\! All your CrewAI agent interactions will now be logged and available in your Maxim dashboard.

|

||||

|

||||

Check this Google Colab Notebook for a quick reference - [Notebook](https://colab.research.google.com/drive/1ZKIZWsmgQQ46n8TH9zLsT1negKkJA6K8?usp=sharing)

|

||||

|

||||

@@ -113,40 +188,44 @@ Check this Google Colab Notebook for a quick reference - [Notebook](https://cola

|

||||

|

||||

After running your CrewAI application:

|

||||

|

||||

|

||||

|

||||

1. Log in to your [Maxim Dashboard](https://getmaxim.ai/dashboard)

|

||||

1. Log in to your [Maxim Dashboard](https://app.getmaxim.ai/login)

|

||||

2. Navigate to your repository

|

||||

3. View detailed agent traces, including:

|

||||

- Agent conversations

|

||||

- Tool usage patterns

|

||||

- Performance metrics

|

||||

- Cost analytics

|

||||

- Agent conversations

|

||||

- Tool usage patterns

|

||||

- Performance metrics

|

||||

- Cost analytics

|

||||

|

||||

<img src='https://raw.githubusercontent.com/akmadan/crewAI/docs_maxim_observability/docs/images/crewai_traces.gif'> </img>

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Common Issues

|

||||

|

||||

- **No traces appearing**: Ensure your API key and repository ID are correc

|

||||

- Ensure you've **called `instrument_crewai()`** ***before*** running your crew. This initializes logging hooks correctly.

|

||||

- **No traces appearing**: Ensure your API key and repository ID are correct

|

||||

- Ensure you've **`called instrument_crewai()`** **_before_** running your crew. This initializes logging hooks correctly.

|

||||

- Set `debug=True` in your `instrument_crewai()` call to surface any internal errors:

|

||||

|

||||

```python

|

||||

instrument_crewai(logger, debug=True)

|

||||

```

|

||||

|

||||

|

||||

```python

|

||||

instrument_crewai(logger, debug=True)

|

||||

```

|

||||

- Configure your agents with `verbose=True` to capture detailed logs:

|

||||

|

||||

```python

|

||||

|

||||

agent = CrewAgent(..., verbose=True)

|

||||

```

|

||||

|

||||

|

||||

```python

|

||||

agent = CrewAgent(..., verbose=True)

|

||||

```

|

||||

- Double-check that `instrument_crewai()` is called **before** creating or executing agents. This might be obvious, but it's a common oversight.

|

||||

|

||||

### Support

|

||||

## Resources

|

||||

|

||||

If you encounter any issues:

|

||||

|

||||

- Check the [Maxim Documentation](https://getmaxim.ai/docs)

|

||||

- Maxim Github [Link](https://github.com/maximhq)

|

||||

<CardGroup cols="3">

|

||||

<Card title="CrewAI Docs" icon="book" href="https://docs.crewai.com/">

|

||||

Official CrewAI documentation

|

||||

</Card>

|

||||

<Card title="Maxim Docs" icon="book" href="https://getmaxim.ai/docs">

|

||||

Official Maxim documentation

|

||||

</Card>

|

||||

<Card title="Maxim Github" icon="github" href="https://github.com/maximhq">

|

||||

Maxim Github

|

||||

</Card>

|

||||

</CardGroup>

|

||||

@@ -94,17 +94,18 @@ def _get_project_attribute(

|

||||

|

||||

attribute = _get_nested_value(pyproject_content, keys)

|

||||

except FileNotFoundError:

|

||||

print(f"Error: {pyproject_path} not found.")

|

||||

console.print(f"Error: {pyproject_path} not found.", style="bold red")

|

||||

except KeyError:

|

||||

print(f"Error: {pyproject_path} is not a valid pyproject.toml file.")

|

||||

console.print(f"Error: {pyproject_path} is not a valid pyproject.toml file.", style="bold red")

|

||||

except tomllib.TOMLDecodeError if sys.version_info >= (3, 11) else Exception as e: # type: ignore

|

||||

print(

|

||||

console.print(

|

||||

f"Error: {pyproject_path} is not a valid TOML file."

|

||||

if sys.version_info >= (3, 11)

|

||||

else f"Error reading the pyproject.toml file: {e}"

|

||||

else f"Error reading the pyproject.toml file: {e}",

|

||||

style="bold red",

|

||||

)

|

||||

except Exception as e:

|

||||

print(f"Error reading the pyproject.toml file: {e}")

|

||||

console.print(f"Error reading the pyproject.toml file: {e}", style="bold red")

|

||||

|

||||

if require and not attribute:

|

||||

console.print(

|

||||

@@ -137,9 +138,9 @@ def fetch_and_json_env_file(env_file_path: str = ".env") -> dict:

|

||||

return env_dict

|

||||

|

||||

except FileNotFoundError:

|

||||

print(f"Error: {env_file_path} not found.")

|

||||

console.print(f"Error: {env_file_path} not found.", style="bold red")

|

||||

except Exception as e:

|

||||

print(f"Error reading the .env file: {e}")

|

||||

console.print(f"Error reading the .env file: {e}", style="bold red")

|

||||

|

||||

return {}

|

||||

|

||||

@@ -255,50 +256,69 @@ def write_env_file(folder_path, env_vars):

|

||||

|

||||

|

||||

def get_crews(crew_path: str = "crew.py", require: bool = False) -> list[Crew]:

|

||||

"""Get the crew instances from the a file."""

|

||||

"""Get the crew instances from a file."""

|

||||

crew_instances = []

|

||||

try:

|

||||

import importlib.util

|

||||

|

||||

for root, _, files in os.walk("."):

|

||||

if crew_path in files:

|

||||

crew_os_path = os.path.join(root, crew_path)

|

||||

try:

|

||||

spec = importlib.util.spec_from_file_location(

|

||||

"crew_module", crew_os_path

|

||||

)

|

||||

if not spec or not spec.loader:

|

||||

continue

|

||||

module = importlib.util.module_from_spec(spec)

|

||||

# Add the current directory to sys.path to ensure imports resolve correctly

|

||||

current_dir = os.getcwd()

|

||||

if current_dir not in sys.path:

|

||||

sys.path.insert(0, current_dir)

|

||||

|

||||

# If we're not in src directory but there's a src directory, add it to path

|

||||

src_dir = os.path.join(current_dir, "src")

|

||||

if os.path.isdir(src_dir) and src_dir not in sys.path:

|

||||

sys.path.insert(0, src_dir)

|

||||

|

||||

# Search in both current directory and src directory if it exists

|

||||

search_paths = [".", "src"] if os.path.isdir("src") else ["."]

|

||||

|

||||

for search_path in search_paths:

|

||||

for root, _, files in os.walk(search_path):

|

||||

if crew_path in files and "cli/templates" not in root:

|

||||

crew_os_path = os.path.join(root, crew_path)

|

||||

try:

|

||||

sys.modules[spec.name] = module

|

||||

spec.loader.exec_module(module)

|

||||

|

||||

for attr_name in dir(module):

|

||||

module_attr = getattr(module, attr_name)

|

||||

|

||||

try:

|

||||

crew_instances.extend(fetch_crews(module_attr))

|

||||

except Exception as e:

|

||||

print(f"Error processing attribute {attr_name}: {e}")

|

||||

continue

|

||||

|

||||

except Exception as exec_error:

|

||||

print(f"Error executing module: {exec_error}")

|

||||

import traceback

|

||||

|

||||

print(f"Traceback: {traceback.format_exc()}")

|

||||

except (ImportError, AttributeError) as e:

|

||||

if require:

|

||||

console.print(

|

||||

f"Error importing crew from {crew_path}: {str(e)}",

|

||||

style="bold red",

|

||||

spec = importlib.util.spec_from_file_location(

|

||||

"crew_module", crew_os_path

|

||||

)

|

||||

if not spec or not spec.loader:

|

||||

continue

|

||||

|

||||

module = importlib.util.module_from_spec(spec)

|

||||

sys.modules[spec.name] = module

|

||||

|

||||

try:

|

||||

spec.loader.exec_module(module)

|

||||

|

||||

for attr_name in dir(module):

|

||||

module_attr = getattr(module, attr_name)

|

||||

try:

|

||||

crew_instances.extend(fetch_crews(module_attr))

|

||||

except Exception as e:

|

||||

console.print(f"Error processing attribute {attr_name}: {e}", style="bold red")

|

||||

continue

|

||||

|

||||

# If we found crew instances, break out of the loop

|

||||

if crew_instances:

|

||||

break

|

||||

|

||||

except Exception as exec_error:

|

||||

console.print(f"Error executing module: {exec_error}", style="bold red")

|

||||

|

||||

except (ImportError, AttributeError) as e:

|

||||

if require:

|

||||

console.print(

|

||||

f"Error importing crew from {crew_path}: {str(e)}",

|

||||

style="bold red",

|

||||

)

|

||||

continue

|

||||

|

||||

# If we found crew instances in this search path, break out of the search paths loop

|

||||

if crew_instances:

|

||||

break

|

||||

|

||||

if require:

|

||||

if require and not crew_instances:

|

||||

console.print("No valid Crew instance found in crew.py", style="bold red")

|

||||

raise SystemExit

|

||||

|

||||

@@ -318,11 +338,15 @@ def get_crew_instance(module_attr) -> Crew | None:

|

||||

and module_attr.is_crew_class

|

||||

):

|

||||

return module_attr().crew()

|

||||

if (ismethod(module_attr) or isfunction(module_attr)) and get_type_hints(

|

||||

module_attr

|

||||

).get("return") is Crew:

|

||||

return module_attr()

|

||||

elif isinstance(module_attr, Crew):

|

||||

try:

|

||||

if (ismethod(module_attr) or isfunction(module_attr)) and get_type_hints(

|

||||

module_attr

|

||||

).get("return") is Crew:

|

||||

return module_attr()

|

||||

except Exception:

|

||||

return None

|

||||

|

||||

if isinstance(module_attr, Crew):

|

||||

return module_attr

|

||||

else:

|

||||

return None

|

||||

@@ -402,7 +426,8 @@ def _load_tools_from_init(init_file: Path) -> list[dict[str, Any]]:

|

||||

|

||||

if not hasattr(module, "__all__"):

|

||||

console.print(

|

||||

f"[bold yellow]Warning: No __all__ defined in {init_file}[/bold yellow]"

|

||||

f"Warning: No __all__ defined in {init_file}",

|

||||

style="bold yellow",

|

||||

)

|

||||

raise SystemExit(1)

|

||||

|

||||

|

||||

@@ -1,7 +1,8 @@

|

||||

import inspect

|

||||

import logging

|

||||

from pathlib import Path

|

||||

from typing import Any, Callable, Dict, TypeVar, cast

|

||||

from typing import Any, Callable, Dict, TypeVar, cast, List

|

||||

from crewai.tools import BaseTool

|

||||

|

||||

import yaml

|

||||

from dotenv import load_dotenv

|

||||

@@ -27,6 +28,8 @@ def CrewBase(cls: T) -> T:

|

||||

)

|

||||

original_tasks_config_path = getattr(cls, "tasks_config", "config/tasks.yaml")

|

||||

|

||||

mcp_server_params: Any = getattr(cls, "mcp_server_params", None)

|

||||

|

||||

def __init__(self, *args, **kwargs):

|

||||

super().__init__(*args, **kwargs)

|

||||

self.load_configurations()

|

||||

@@ -64,6 +67,39 @@ def CrewBase(cls: T) -> T:

|

||||

self._original_functions, "is_kickoff"

|

||||

)

|

||||

|

||||

# Add close mcp server method to after kickoff

|

||||

bound_method = self._create_close_mcp_server_method()

|

||||

self._after_kickoff['_close_mcp_server'] = bound_method

|

||||

|

||||

def _create_close_mcp_server_method(self):

|

||||

def _close_mcp_server(self, instance, outputs):

|

||||

adapter = getattr(self, '_mcp_server_adapter', None)

|

||||

if adapter is not None:

|

||||

try:

|

||||

adapter.stop()

|

||||

except Exception as e:

|

||||

logging.warning(f"Error stopping MCP server: {e}")

|

||||

return outputs

|

||||

|

||||

_close_mcp_server.is_after_kickoff = True

|

||||

|

||||

import types

|

||||

return types.MethodType(_close_mcp_server, self)

|

||||

|

||||

def get_mcp_tools(self) -> List[BaseTool]:

|

||||

if not self.mcp_server_params:

|

||||

return []

|

||||

|

||||

from crewai_tools import MCPServerAdapter

|

||||

|

||||

adapter = getattr(self, '_mcp_server_adapter', None)

|

||||

if adapter and isinstance(adapter, MCPServerAdapter):

|

||||

return adapter.tools

|

||||

|

||||

self._mcp_server_adapter = MCPServerAdapter(self.mcp_server_params)

|

||||

return self._mcp_server_adapter.tools

|

||||

|

||||

|

||||

def load_configurations(self):

|

||||

"""Load agent and task configurations from YAML files."""

|

||||

if isinstance(self.original_agents_config_path, str):

|

||||

|

||||

@@ -1,3 +1,4 @@

|

||||

import os

|

||||

from typing import Any, Dict, Optional

|

||||

|

||||

from rich.console import Console

|

||||

@@ -7,6 +8,39 @@ from rich.tree import Tree

|

||||

from rich.live import Live

|

||||

from rich.syntax import Syntax

|

||||

|

||||

DEFAULT_EMOJI_MAP = {

|

||||

"✅": "[DONE]",

|

||||

"❌": "[FAILED]",

|

||||

"🚀": "[CREW]",

|

||||

"🔄": "[RUNNING]",

|

||||

"📋": "[TASK]",

|

||||

"🔧": "[TOOL]",

|

||||

"🧠": "[THINKING]",

|

||||

"🌊": "[FLOW]",

|

||||

"✨": "[CREATED]",

|

||||

"🧪": "[TEST]",

|

||||

"📚": "[KNOWLEDGE]",

|

||||

"🔍": "[SEARCH]",

|

||||

"🔎": "[QUERY]",

|

||||

"🤖": "[AGENT]",

|

||||

"📊": "[METRICS]",

|

||||

"⚡": "[QUICK]",

|

||||

"🎯": "[TARGET]",

|

||||

"🔗": "[LINK]",

|

||||

"💡": "[IDEA]",

|

||||

"⚠️": "[WARNING]",

|

||||

"🎉": "[SUCCESS]",

|

||||

"🔥": "[HOT]",

|

||||

"💾": "[SAVE]",

|

||||

"🔒": "[SECURE]",

|

||||

"🌟": "[STAR]",

|

||||

}

|

||||

|

||||

|

||||

def _parse_bool_env(val: str) -> bool:

|

||||

"""Parse environment variable value to boolean."""

|

||||

return val.lower() in ("true", "1", "yes") if val else False

|

||||

|

||||

|

||||

class ConsoleFormatter:

|

||||

current_crew_tree: Optional[Tree] = None

|

||||

@@ -24,6 +58,13 @@ class ConsoleFormatter:

|

||||

def __init__(self, verbose: bool = False):

|

||||

self.console = Console(width=None)

|

||||

self.verbose = verbose

|

||||

self.disable_emojis = _parse_bool_env(os.getenv("CREWAI_DISABLE_EMOJIS", ""))

|

||||

|

||||

self.emoji_map = DEFAULT_EMOJI_MAP.copy()

|

||||

self.icon_cache: Dict[str, str] = {}

|

||||

if self.disable_emojis:

|

||||

self.icon_cache = {emoji: text for emoji, text in self.emoji_map.items()}

|

||||

|

||||

# Live instance to dynamically update a Tree renderable (e.g. the Crew tree)

|

||||

# When multiple Tree objects are printed sequentially we reuse this Live

|

||||

# instance so the previous render is replaced instead of writing a new one.

|

||||

@@ -31,6 +72,17 @@ class ConsoleFormatter:

|

||||

# final Tree persists on the terminal.

|

||||

self._live: Optional[Live] = None

|

||||

|

||||

def _get_icon(self, emoji: str) -> str:

|

||||

"""Get emoji or text alternative based on disable_emojis setting."""

|

||||

if self.disable_emojis:

|

||||

if emoji in self.icon_cache:

|

||||

return self.icon_cache[emoji]

|

||||

ascii_fallback = emoji.encode('ascii', 'ignore').decode('ascii')

|

||||

fallback = f"[ICON:{ascii_fallback or 'UNKNOWN'}]"

|

||||

self.icon_cache[emoji] = fallback

|

||||

return fallback

|

||||

return emoji

|

||||

|

||||

def create_panel(self, content: Text, title: str, style: str = "blue") -> Panel:

|

||||

"""Create a standardized panel with consistent styling."""

|

||||

return Panel(

|

||||

@@ -167,15 +219,15 @@ class ConsoleFormatter:

|

||||

return

|

||||

|

||||

if status == "completed":

|

||||

prefix, style = "✅ Crew:", "green"

|

||||

prefix, style = f"{self._get_icon('✅')} Crew:", "green"

|

||||

title = "Crew Completion"

|

||||

content_title = "Crew Execution Completed"

|

||||

elif status == "failed":

|

||||

prefix, style = "❌ Crew:", "red"

|

||||

prefix, style = f"{self._get_icon('❌')} Crew:", "red"

|

||||

title = "Crew Failure"

|

||||

content_title = "Crew Execution Failed"

|

||||

else:

|

||||

prefix, style = "🚀 Crew:", "cyan"

|

||||

prefix, style = f"{self._get_icon('🚀')} Crew:", "cyan"

|

||||

title = "Crew Execution"

|

||||

content_title = "Crew Execution Started"

|

||||

|

||||

@@ -202,7 +254,7 @@ class ConsoleFormatter:

|

||||

return None

|

||||

|

||||

tree = Tree(

|

||||

Text("🚀 Crew: ", style="cyan bold") + Text(crew_name, style="cyan")

|

||||

Text(f"{self._get_icon('🚀')} Crew: ", style="cyan bold") + Text(crew_name, style="cyan")

|

||||

)

|

||||

|

||||

content = self.create_status_content(

|

||||

@@ -227,7 +279,7 @@ class ConsoleFormatter:

|

||||

return None

|

||||

|

||||

task_content = Text()

|

||||

task_content.append(f"📋 Task: {task_id}", style="yellow bold")

|

||||

task_content.append(f"{self._get_icon('📋')} Task: {task_id}", style="yellow bold")

|

||||

task_content.append("\nStatus: ", style="white")

|

||||

task_content.append("Executing Task...", style="yellow dim")

|

||||

|

||||

@@ -258,11 +310,11 @@ class ConsoleFormatter:

|

||||

|

||||

if status == "completed":

|

||||

style = "green"

|

||||

status_text = "✅ Completed"

|

||||

status_text = f"{self._get_icon('✅')} Completed"

|

||||

panel_title = "Task Completion"

|

||||

else:

|

||||

style = "red"

|

||||

status_text = "❌ Failed"

|

||||

status_text = f"{self._get_icon('❌')} Failed"

|

||||

panel_title = "Task Failure"

|

||||

|

||||

# Update tree label

|

||||

@@ -271,7 +323,7 @@ class ConsoleFormatter:

|

||||

# Build label without introducing stray blank lines

|

||||

task_content = Text()

|

||||

# First line: Task ID

|

||||

task_content.append(f"📋 Task: {task_id}", style=f"{style} bold")

|

||||

task_content.append(f"{self._get_icon('📋')} Task: {task_id}", style=f"{style} bold")

|

||||

|

||||

# Second line: Assigned to

|

||||

task_content.append("\nAssigned to: ", style="white")

|

||||

@@ -330,14 +382,14 @@ class ConsoleFormatter:

|

||||

|

||||

# Create initial tree with flow ID

|

||||

flow_label = Text()

|

||||

flow_label.append("🌊 Flow: ", style="blue bold")

|

||||

flow_label.append(f"{self._get_icon('🌊')} Flow: ", style="blue bold")

|

||||

flow_label.append(flow_name, style="blue")

|

||||

flow_label.append("\nID: ", style="white")

|

||||

flow_label.append(flow_id, style="blue")

|

||||

|

||||

flow_tree = Tree(flow_label)

|

||||

self.add_tree_node(flow_tree, "✨ Created", "blue")

|

||||

self.add_tree_node(flow_tree, "✅ Initialization Complete", "green")

|

||||

self.add_tree_node(flow_tree, f"{self._get_icon('✨')} Created", "blue")

|

||||

self.add_tree_node(flow_tree, f"{self._get_icon('✅')} Initialization Complete", "green")

|

||||

|

||||

return flow_tree

|

||||

|

||||

@@ -345,13 +397,13 @@ class ConsoleFormatter:

|

||||

"""Initialize a flow execution tree."""

|

||||

flow_tree = Tree("")

|

||||

flow_label = Text()

|

||||

flow_label.append("🌊 Flow: ", style="blue bold")

|

||||

flow_label.append(f"{self._get_icon('🌊')} Flow: ", style="blue bold")

|

||||

flow_label.append(flow_name, style="blue")

|

||||

flow_label.append("\nID: ", style="white")

|

||||

flow_label.append(flow_id, style="blue")

|

||||

flow_tree.label = flow_label

|

||||

|

||||

self.add_tree_node(flow_tree, "🧠 Starting Flow...", "yellow")

|

||||

self.add_tree_node(flow_tree, f"{self._get_icon('🧠')} Starting Flow...", "yellow")

|

||||

|

||||

self.print(flow_tree)

|

||||

self.print()

|

||||

@@ -373,7 +425,7 @@ class ConsoleFormatter:

|

||||

# Update main flow label

|

||||

self.update_tree_label(

|

||||

flow_tree,

|

||||

"✅ Flow Finished:" if status == "completed" else "❌ Flow Failed:",

|

||||

f"{self._get_icon('✅')} Flow Finished:" if status == "completed" else f"{self._get_icon('❌')} Flow Failed:",

|

||||

flow_name,

|

||||

"green" if status == "completed" else "red",

|

||||

)

|

||||

@@ -383,9 +435,9 @@ class ConsoleFormatter:

|

||||

if "Starting Flow" in str(child.label):

|

||||

child.label = Text(

|

||||

(

|

||||

"✅ Flow Completed"

|

||||

f"{self._get_icon('✅')} Flow Completed"

|

||||

if status == "completed"

|

||||

else "❌ Flow Failed"

|

||||

else f"{self._get_icon('❌')} Flow Failed"

|

||||

),

|

||||

style="green" if status == "completed" else "red",

|

||||

)

|

||||

@@ -418,20 +470,20 @@ class ConsoleFormatter:

|

||||

return None

|

||||

|

||||

if status == "running":

|

||||

prefix, style = "🔄 Running:", "yellow"

|

||||

prefix, style = f"{self._get_icon('🔄')} Running:", "yellow"

|

||||

elif status == "completed":

|

||||

prefix, style = "✅ Completed:", "green"

|

||||

prefix, style = f"{self._get_icon('✅')} Completed:", "green"

|

||||

# Update initialization node when a method completes successfully

|

||||

for child in flow_tree.children:

|

||||

if "Starting Flow" in str(child.label):

|

||||

child.label = Text("Flow Method Step", style="white")

|

||||

break

|

||||

else:

|

||||

prefix, style = "❌ Failed:", "red"

|

||||

prefix, style = f"{self._get_icon('❌')} Failed:", "red"

|

||||

# Update initialization node on failure

|

||||

for child in flow_tree.children:

|

||||

if "Starting Flow" in str(child.label):

|

||||

child.label = Text("❌ Flow Step Failed", style="red")

|

||||

child.label = Text(f"{self._get_icon('❌')} Flow Step Failed", style="red")

|

||||

break

|

||||

|

||||

if not method_branch:

|

||||

@@ -453,7 +505,7 @@ class ConsoleFormatter:

|

||||

|

||||

def get_llm_tree(self, tool_name: str):

|

||||

text = Text()

|

||||

text.append(f"🔧 Using {tool_name} from LLM available_function", style="yellow")

|

||||

text.append(f"{self._get_icon('🔧')} Using {tool_name} from LLM available_function", style="yellow")

|

||||

|

||||

tree = self.current_flow_tree or self.current_crew_tree

|

||||

|

||||

@@ -484,7 +536,7 @@ class ConsoleFormatter:

|

||||

tool_name: str,

|

||||

):

|

||||

tree = self.get_llm_tree(tool_name)

|

||||

self.add_tree_node(tree, "✅ Tool Usage Completed", "green")

|

||||

self.add_tree_node(tree, f"{self._get_icon('✅')} Tool Usage Completed", "green")

|

||||

self.print(tree)

|

||||

self.print()

|

||||

|

||||

@@ -494,7 +546,7 @@ class ConsoleFormatter:

|

||||

error: str,

|

||||

):

|

||||

tree = self.get_llm_tree(tool_name)

|

||||

self.add_tree_node(tree, "❌ Tool Usage Failed", "red")

|

||||

self.add_tree_node(tree, f"{self._get_icon('❌')} Tool Usage Failed", "red")

|

||||

self.print(tree)

|

||||

self.print()

|

||||

|

||||

@@ -541,7 +593,7 @@ class ConsoleFormatter:

|

||||

# Update label with current count

|

||||

self.update_tree_label(

|

||||

tool_branch,

|

||||

"🔧",

|

||||

self._get_icon("🔧"),

|

||||

f"Using {tool_name} ({self.tool_usage_counts[tool_name]})",

|

||||

"yellow",

|

||||

)

|

||||

@@ -570,7 +622,7 @@ class ConsoleFormatter:

|

||||

# Update the existing tool node's label

|

||||

self.update_tree_label(

|

||||

tool_branch,

|

||||

"🔧",

|

||||

self._get_icon("🔧"),

|

||||

f"Used {tool_name} ({self.tool_usage_counts[tool_name]})",

|

||||

"green",

|

||||

)

|

||||

@@ -600,7 +652,7 @@ class ConsoleFormatter:

|

||||

if tool_branch:

|

||||

self.update_tree_label(

|

||||

tool_branch,

|

||||

"🔧 Failed",

|

||||

f"{self._get_icon('🔧')} Failed",

|

||||

f"{tool_name} ({self.tool_usage_counts[tool_name]})",

|

||||

"red",

|

||||

)

|

||||

@@ -646,7 +698,7 @@ class ConsoleFormatter:

|

||||

|

||||

if should_add_thinking:

|

||||

tool_branch = branch_to_use.add("")

|

||||

self.update_tree_label(tool_branch, "🧠", "Thinking...", "blue")

|

||||

self.update_tree_label(tool_branch, self._get_icon("🧠"), "Thinking...", "blue")

|

||||

self.current_tool_branch = tool_branch

|

||||

self.print(tree_to_use)

|

||||

self.print()

|

||||

@@ -756,7 +808,7 @@ class ConsoleFormatter:

|

||||

|

||||

# Update the thinking branch to show failure

|

||||

if thinking_branch_to_update:

|

||||

thinking_branch_to_update.label = Text("❌ LLM Failed", style="red bold")

|

||||

thinking_branch_to_update.label = Text(f"{self._get_icon('❌')} LLM Failed", style="red bold")

|

||||

# Clear the current_tool_branch reference

|

||||

if self.current_tool_branch is thinking_branch_to_update:

|

||||

self.current_tool_branch = None

|

||||

@@ -766,7 +818,7 @@ class ConsoleFormatter:

|

||||

|

||||

# Show error panel

|

||||

error_content = Text()

|

||||

error_content.append("❌ LLM Call Failed\n", style="red bold")

|

||||

error_content.append(f"{self._get_icon('❌')} LLM Call Failed\n", style="red bold")

|

||||

error_content.append("Error: ", style="white")

|

||||

error_content.append(str(error), style="red")

|

||||

|

||||

@@ -781,7 +833,7 @@ class ConsoleFormatter:

|

||||

|

||||

# Create initial panel

|

||||

content = Text()

|

||||

content.append("🧪 Starting Crew Test\n\n", style="blue bold")

|

||||

content.append(f"{self._get_icon('🧪')} Starting Crew Test\n\n", style="blue bold")

|

||||

content.append("Crew: ", style="white")

|

||||

content.append(f"{crew_name}\n", style="blue")

|

||||

content.append("ID: ", style="white")

|

||||

@@ -795,13 +847,13 @@ class ConsoleFormatter:

|

||||

|

||||

# Create and display the test tree

|

||||

test_label = Text()

|

||||

test_label.append("🧪 Test: ", style="blue bold")

|

||||

test_label.append(f"{self._get_icon('🧪')} Test: ", style="blue bold")

|

||||

test_label.append(crew_name or "Crew", style="blue")

|

||||

test_label.append("\nStatus: ", style="white")

|

||||

test_label.append("In Progress", style="yellow")

|

||||

|

||||

test_tree = Tree(test_label)

|

||||

self.add_tree_node(test_tree, "🔄 Running tests...", "yellow")

|

||||

self.add_tree_node(test_tree, f"{self._get_icon('🔄')} Running tests...", "yellow")

|

||||

|

||||

self.print(test_tree)

|

||||

self.print()

|

||||

@@ -817,7 +869,7 @@ class ConsoleFormatter:

|

||||

if flow_tree:

|

||||

# Update test tree label to show completion

|

||||

test_label = Text()

|

||||

test_label.append("✅ Test: ", style="green bold")

|

||||

test_label.append(f"{self._get_icon('✅')} Test: ", style="green bold")

|

||||

test_label.append(crew_name or "Crew", style="green")

|

||||

test_label.append("\nStatus: ", style="white")

|

||||

test_label.append("Completed", style="green bold")

|

||||

@@ -826,7 +878,7 @@ class ConsoleFormatter:

|

||||

# Update the running tests node

|

||||

for child in flow_tree.children:

|

||||

if "Running tests" in str(child.label):

|

||||

child.label = Text("✅ Tests completed successfully", style="green")

|

||||

child.label = Text(f"{self._get_icon('✅')} Tests completed successfully", style="green")

|

||||

break

|

||||

|

||||

self.print(flow_tree)

|

||||

@@ -848,7 +900,7 @@ class ConsoleFormatter:

|

||||

return

|

||||

|

||||

content = Text()

|

||||

content.append("📋 Crew Training Started\n", style="blue bold")

|

||||

content.append(f"{self._get_icon('📋')} Crew Training Started\n", style="blue bold")

|

||||

content.append("Crew: ", style="white")

|

||||

content.append(f"{crew_name}\n", style="blue")

|

||||

content.append("Time: ", style="white")

|

||||

@@ -863,7 +915,7 @@ class ConsoleFormatter:

|

||||

return

|

||||

|

||||

content = Text()

|

||||

content.append("✅ Crew Training Completed\n", style="green bold")

|

||||

content.append(f"{self._get_icon('✅')} Crew Training Completed\n", style="green bold")

|

||||

content.append("Crew: ", style="white")

|

||||

content.append(f"{crew_name}\n", style="green")

|

||||

content.append("Time: ", style="white")

|

||||

@@ -878,7 +930,7 @@ class ConsoleFormatter:

|

||||

return

|

||||

|

||||

failure_content = Text()

|

||||

failure_content.append("❌ Crew Training Failed\n", style="red bold")

|

||||

failure_content.append(f"{self._get_icon('❌')} Crew Training Failed\n", style="red bold")

|

||||

failure_content.append("Crew: ", style="white")

|

||||

failure_content.append(crew_name or "Crew", style="red")

|

||||

|

||||

@@ -891,7 +943,7 @@ class ConsoleFormatter:

|

||||

return

|

||||

|

||||

failure_content = Text()

|

||||

failure_content.append("❌ Crew Test Failed\n", style="red bold")

|

||||

failure_content.append(f"{self._get_icon('❌')} Crew Test Failed\n", style="red bold")

|

||||

failure_content.append("Crew: ", style="white")

|

||||

failure_content.append(crew_name or "Crew", style="red")

|

||||

|

||||

@@ -906,7 +958,7 @@ class ConsoleFormatter:

|

||||

# Create initial tree for LiteAgent if it doesn't exist

|

||||

if not self.current_lite_agent_branch:

|

||||

lite_agent_label = Text()

|

||||

lite_agent_label.append("🤖 LiteAgent: ", style="cyan bold")

|

||||

lite_agent_label.append(f"{self._get_icon('🤖')} LiteAgent: ", style="cyan bold")

|

||||

lite_agent_label.append(lite_agent_role, style="cyan")

|

||||

lite_agent_label.append("\nStatus: ", style="white")

|

||||

lite_agent_label.append("In Progress", style="yellow")

|

||||

@@ -931,15 +983,15 @@ class ConsoleFormatter:

|

||||

|

||||

# Determine style based on status

|

||||

if status == "completed":

|

||||

prefix, style = "✅ LiteAgent:", "green"

|

||||

prefix, style = f"{self._get_icon('✅')} LiteAgent:", "green"

|

||||

status_text = "Completed"

|

||||

title = "LiteAgent Completion"

|

||||

elif status == "failed":

|

||||

prefix, style = "❌ LiteAgent:", "red"

|

||||

prefix, style = f"{self._get_icon('❌')} LiteAgent:", "red"

|

||||

status_text = "Failed"

|

||||

title = "LiteAgent Error"

|

||||

else:

|

||||

prefix, style = "🤖 LiteAgent:", "yellow"

|

||||

prefix, style = f"{self._get_icon('🤖')} LiteAgent:", "yellow"

|

||||

status_text = "In Progress"

|

||||

title = "LiteAgent Status"

|

||||

|

||||

@@ -1010,7 +1062,7 @@ class ConsoleFormatter:

|

||||

|

||||

knowledge_branch = branch_to_use.add("")

|

||||

self.update_tree_label(

|

||||

knowledge_branch, "🔍", "Knowledge Retrieval Started", "blue"

|

||||

knowledge_branch, self._get_icon("🔍"), "Knowledge Retrieval Started", "blue"

|

||||

)

|

||||

|

||||

self.print(tree_to_use)

|

||||

@@ -1041,7 +1093,7 @@ class ConsoleFormatter:

|

||||

|

||||

knowledge_panel = Panel(

|

||||

Text(knowledge_text, style="white"),

|

||||

title="📚 Retrieved Knowledge",

|

||||

title=f"{self._get_icon('📚')} Retrieved Knowledge",

|

||||

border_style="green",

|

||||

padding=(1, 2),

|

||||

)

|

||||

@@ -1053,7 +1105,7 @@ class ConsoleFormatter:

|

||||

for child in branch_to_use.children:

|

||||

if "Knowledge Retrieval Started" in str(child.label):

|

||||

self.update_tree_label(

|

||||

child, "✅", "Knowledge Retrieval Completed", "green"

|

||||

child, self._get_icon("✅"), "Knowledge Retrieval Completed", "green"

|

||||

)

|

||||

knowledge_branch_found = True

|

||||

break

|

||||

@@ -1066,7 +1118,7 @@ class ConsoleFormatter:

|

||||

and "Completed" not in str(child.label)

|

||||

):

|

||||

self.update_tree_label(

|

||||

child, "✅", "Knowledge Retrieval Completed", "green"

|

||||

child, self._get_icon("✅"), "Knowledge Retrieval Completed", "green"

|

||||

)

|

||||

knowledge_branch_found = True

|

||||

break

|

||||

@@ -1074,7 +1126,7 @@ class ConsoleFormatter:

|

||||

if not knowledge_branch_found:

|

||||

knowledge_branch = branch_to_use.add("")

|

||||

self.update_tree_label(

|

||||

knowledge_branch, "✅", "Knowledge Retrieval Completed", "green"

|

||||

knowledge_branch, self._get_icon("✅"), "Knowledge Retrieval Completed", "green"

|

||||

)

|

||||

|

||||

self.print(tree_to_use)

|

||||

@@ -1086,7 +1138,7 @@ class ConsoleFormatter:

|

||||

|

||||

knowledge_panel = Panel(

|

||||

Text(knowledge_text, style="white"),

|

||||

title="📚 Retrieved Knowledge",

|

||||

title=f"{self._get_icon('📚')} Retrieved Knowledge",

|

||||

border_style="green",

|

||||

padding=(1, 2),

|

||||

)

|

||||

@@ -1111,7 +1163,7 @@ class ConsoleFormatter:

|

||||

|

||||

query_branch = branch_to_use.add("")

|

||||

self.update_tree_label(

|

||||

query_branch, "🔎", f"Query: {task_prompt[:50]}...", "yellow"

|

||||

query_branch, self._get_icon("🔎"), f"Query: {task_prompt[:50]}...", "yellow"

|

||||

)

|

||||

|

||||

self.print(tree_to_use)

|

||||

@@ -1132,7 +1184,7 @@ class ConsoleFormatter:

|

||||

|

||||

if branch_to_use and tree_to_use:

|

||||

query_branch = branch_to_use.add("")

|

||||

self.update_tree_label(query_branch, "❌", "Knowledge Query Failed", "red")

|

||||

self.update_tree_label(query_branch, self._get_icon("❌"), "Knowledge Query Failed", "red")

|

||||

self.print(tree_to_use)

|

||||

self.print()

|

||||

|

||||

@@ -1158,7 +1210,7 @@ class ConsoleFormatter:

|

||||

return None

|

||||

|

||||

query_branch = branch_to_use.add("")

|

||||

self.update_tree_label(query_branch, "✅", "Knowledge Query Completed", "green")

|

||||

self.update_tree_label(query_branch, self._get_icon("✅"), "Knowledge Query Completed", "green")

|

||||

|

||||

self.print(tree_to_use)

|

||||

self.print()

|

||||

@@ -1178,7 +1230,7 @@ class ConsoleFormatter:

|

||||

|

||||

if branch_to_use and tree_to_use:

|

||||

query_branch = branch_to_use.add("")

|

||||

self.update_tree_label(query_branch, "❌", "Knowledge Search Failed", "red")

|

||||

self.update_tree_label(query_branch, self._get_icon("❌"), "Knowledge Search Failed", "red")

|

||||

self.print(tree_to_use)

|

||||

self.print()

|

||||

|

||||

@@ -1223,7 +1275,7 @@ class ConsoleFormatter:

|

||||

status_text = (

|

||||

f"Reasoning (Attempt {attempt})" if attempt > 1 else "Reasoning..."

|

||||

)

|

||||

self.update_tree_label(reasoning_branch, "🧠", status_text, "blue")

|

||||

self.update_tree_label(reasoning_branch, self._get_icon("🧠"), status_text, "blue")

|

||||

|

||||

self.print(tree_to_use)

|

||||

self.print()

|

||||

@@ -1254,7 +1306,7 @@ class ConsoleFormatter:

|

||||

)

|

||||

|

||||

if reasoning_branch is not None:

|

||||

self.update_tree_label(reasoning_branch, "✅", status_text, style)

|

||||

self.update_tree_label(reasoning_branch, self._get_icon("✅"), status_text, style)

|

||||

|

||||

if tree_to_use is not None:

|

||||

self.print(tree_to_use)

|

||||

@@ -1263,7 +1315,7 @@ class ConsoleFormatter:

|

||||

if plan:

|

||||

plan_panel = Panel(

|

||||

Text(plan, style="white"),

|

||||

title="🧠 Reasoning Plan",

|

||||

title=f"{self._get_icon('🧠')} Reasoning Plan",

|

||||

border_style=style,

|

||||

padding=(1, 2),

|

||||

)

|

||||

@@ -1292,7 +1344,7 @@ class ConsoleFormatter:

|

||||

)

|

||||

|

||||

if reasoning_branch is not None:

|

||||

self.update_tree_label(reasoning_branch, "❌", "Reasoning Failed", "red")

|

||||

self.update_tree_label(reasoning_branch, self._get_icon("❌"), "Reasoning Failed", "red")

|

||||

|

||||

if tree_to_use is not None:

|

||||

self.print(tree_to_use)

|

||||

@@ -1335,7 +1387,7 @@ class ConsoleFormatter:

|

||||

# Create and display the panel

|

||||

agent_panel = Panel(

|

||||

content,

|

||||

title="🤖 Agent Started",

|

||||

title=f"{self._get_icon('🤖')} Agent Started",

|

||||

border_style="magenta",

|

||||

padding=(1, 2),

|

||||

)

|

||||

@@ -1406,7 +1458,7 @@ class ConsoleFormatter:

|

||||

# Create the main action panel

|

||||

action_panel = Panel(

|

||||

main_content,

|

||||

title="🔧 Agent Tool Execution",

|

||||

title=f"{self._get_icon('🔧')} Agent Tool Execution",

|

||||

border_style="magenta",

|

||||

padding=(1, 2),

|

||||

)

|

||||

@@ -1448,7 +1500,7 @@ class ConsoleFormatter:

|

||||

# Create and display the finish panel

|

||||

finish_panel = Panel(

|

||||

content,

|

||||

title="✅ Agent Final Answer",

|

||||

title=f"{self._get_icon('✅')} Agent Final Answer",

|

||||

border_style="green",

|

||||

padding=(1, 2),

|

||||

)

|

||||

|

||||

@@ -261,3 +261,104 @@ __all__ = ['MyTool']

|

||||

captured = capsys.readouterr()

|

||||

|

||||

assert "was never closed" in captured.out

|

||||

|

||||

|

||||

@pytest.fixture

|

||||

def mock_crew():

|

||||

from crewai.crew import Crew

|

||||

|

||||

class MockCrew(Crew):

|

||||

def __init__(self):

|

||||

pass

|

||||

|

||||

return MockCrew()

|

||||

|

||||

|

||||

@pytest.fixture

|

||||

def temp_crew_project():

|

||||

with tempfile.TemporaryDirectory() as temp_dir:

|

||||

old_cwd = os.getcwd()

|

||||

os.chdir(temp_dir)

|

||||

|

||||

crew_content = """

|

||||

from crewai.crew import Crew

|

||||

from crewai.agent import Agent

|

||||

|

||||

def create_crew() -> Crew:

|

||||

agent = Agent(role="test", goal="test", backstory="test")

|

||||

return Crew(agents=[agent], tasks=[])

|

||||

|

||||

# Direct crew instance

|

||||

direct_crew = Crew(agents=[], tasks=[])

|

||||

"""

|

||||

|

||||

with open("crew.py", "w") as f:

|

||||

f.write(crew_content)

|

||||

|

||||

os.makedirs("src", exist_ok=True)

|

||||

with open(os.path.join("src", "crew.py"), "w") as f:

|

||||

f.write(crew_content)

|

||||

|

||||

# Create a src/templates directory that should be ignored

|

||||

os.makedirs(os.path.join("src", "templates"), exist_ok=True)

|

||||

with open(os.path.join("src", "templates", "crew.py"), "w") as f:

|

||||

f.write("# This should be ignored")

|

||||

|

||||

yield temp_dir

|

||||

|

||||

os.chdir(old_cwd)

|

||||

|

||||

|

||||

def test_get_crews_finds_valid_crews(temp_crew_project, monkeypatch, mock_crew):

|

||||

def mock_fetch_crews(module_attr):

|

||||

return [mock_crew]

|

||||

|

||||

monkeypatch.setattr(utils, "fetch_crews", mock_fetch_crews)

|

||||

|

||||

crews = utils.get_crews()

|

||||

|

||||

assert len(crews) > 0

|

||||

assert mock_crew in crews

|

||||

|

||||

|

||||

def test_get_crews_with_nonexistent_file(temp_crew_project):

|

||||

crews = utils.get_crews(crew_path="nonexistent.py", require=False)

|

||||

assert len(crews) == 0

|

||||

|

||||

|

||||

def test_get_crews_with_required_nonexistent_file(temp_crew_project, capsys):

|

||||

with pytest.raises(SystemExit):

|

||||

utils.get_crews(crew_path="nonexistent.py", require=True)

|

||||

|

||||

captured = capsys.readouterr()

|

||||

assert "No valid Crew instance found" in captured.out

|

||||

|

||||

|

||||

def test_get_crews_with_invalid_module(temp_crew_project, capsys):

|

||||

with open("crew.py", "w") as f:

|

||||

f.write("import nonexistent_module\n")

|

||||

|

||||

crews = utils.get_crews(crew_path="crew.py", require=False)

|

||||

assert len(crews) == 0

|

||||

|

||||

with pytest.raises(SystemExit):

|

||||

utils.get_crews(crew_path="crew.py", require=True)

|

||||

|

||||

captured = capsys.readouterr()

|

||||

assert "Error" in captured.out

|

||||

|

||||

|

||||

def test_get_crews_ignores_template_directories(temp_crew_project, monkeypatch, mock_crew):

|

||||

template_crew_detected = False

|

||||

|

||||

def mock_fetch_crews(module_attr):

|

||||

nonlocal template_crew_detected

|

||||

if hasattr(module_attr, "__file__") and "templates" in module_attr.__file__:

|

||||

template_crew_detected = True

|

||||

return [mock_crew]

|

||||

|

||||

monkeypatch.setattr(utils, "fetch_crews", mock_fetch_crews)

|

||||

|

||||

utils.get_crews()

|

||||

|

||||

assert not template_crew_detected

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

from typing import List

|

||||

|

||||

from unittest.mock import Mock, patch

|

||||

import pytest

|

||||

|

||||

from crewai.agent import Agent

|

||||

@@ -16,7 +16,7 @@ from crewai.project import (

|

||||

task,

|

||||

)

|

||||

from crewai.task import Task

|

||||

|

||||

from crewai.tools import tool

|

||||

|

||||

class SimpleCrew:

|

||||

@agent

|

||||

@@ -85,6 +85,14 @@ class InternalCrew:

|

||||

def crew(self):

|

||||

return Crew(agents=self.agents, tasks=self.tasks, verbose=True)

|

||||

|

||||

@CrewBase

|

||||

class InternalCrewWithMCP(InternalCrew):

|

||||

mcp_server_params = {"host": "localhost", "port": 8000}

|

||||

|

||||

@agent

|

||||

def reporting_analyst(self):

|

||||

return Agent(config=self.agents_config["reporting_analyst"], tools=self.get_mcp_tools()) # type: ignore[index]

|

||||

|

||||

|

||||

def test_agent_memoization():

|

||||

crew = SimpleCrew()

|

||||

@@ -237,3 +245,17 @@ def test_multiple_before_after_kickoff():

|

||||

def test_crew_name():

|

||||

crew = InternalCrew()

|

||||

assert crew._crew_name == "InternalCrew"

|

||||

|

||||

@tool

|

||||

def simple_tool():

|

||||

"""Return 'Hi!'"""

|

||||

return "Hi!"

|

||||

|

||||

def test_internal_crew_with_mcp():

|

||||

mock = Mock()

|

||||

mock.tools = [simple_tool]

|

||||

with patch("crewai_tools.MCPServerAdapter", return_value=mock) as adapter_mock:

|

||||

crew = InternalCrewWithMCP()

|

||||

assert crew.reporting_analyst().tools == [simple_tool]

|

||||

|

||||

adapter_mock.assert_called_once_with({"host": "localhost", "port": 8000})

|

||||

1

tests/utilities/events/utils/__init__.py

Normal file

@@ -0,0 +1 @@

|

||||

|

||||

@@ -0,0 +1,270 @@

|

||||

import os

|

||||

from unittest.mock import patch

|

||||

from rich.tree import Tree

|

||||

|

||||

from crewai.utilities.events.utils.console_formatter import ConsoleFormatter

|

||||

|

||||

|

||||

class TestConsoleFormatterEmojiDisable:

|

||||