mirror of

https://github.com/crewAIInc/crewAI.git

synced 2026-01-08 07:38:29 +00:00

Compare commits

38 Commits

devin/1748

...

joaomdmour

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

2ab4986b25 | ||

|

|

3161a871b3 | ||

|

|

2242545a2e | ||

|

|

1fd9c5b697 | ||

|

|

28c1efc933 | ||

|

|

9fccd2726b | ||

|

|

94c8412724 | ||

|

|

1b18310cf7 | ||

|

|

81f4182e3b | ||

|

|

b36b150e20 | ||

|

|

2c2b60410b | ||

|

|

556ce2300c | ||

|

|

9355f980f5 | ||

|

|

db83843317 | ||

|

|

e947cb26ee | ||

|

|

757910439a | ||

|

|

9444d3a762 | ||

|

|

fdba0c8a03 | ||

|

|

53fed872f2 | ||

|

|

923e3a9c6e | ||

|

|

21d063a46c | ||

|

|

02912a653e | ||

|

|

f1cfba7527 | ||

|

|

3e075cd48d | ||

|

|

e03ec4d60f | ||

|

|

ba740c6157 | ||

|

|

34c813ed79 | ||

|

|

545cc2ffe4 | ||

|

|

47b97d9b7f | ||

|

|

bf8fbb0a44 | ||

|

|

552921cf83 | ||

|

|

372874fb3a | ||

|

|

2bd6b72aae | ||

|

|

f02e0060fa | ||

|

|

66b7628972 | ||

|

|

c045399d6b | ||

|

|

1da2fd2a5c | ||

|

|

e07e11fbe7 |

5

.github/workflows/linter.yml

vendored

5

.github/workflows/linter.yml

vendored

@@ -30,4 +30,7 @@ jobs:

|

||||

- name: Run Ruff on Changed Files

|

||||

if: ${{ steps.changed-files.outputs.files != '' }}

|

||||

run: |

|

||||

echo "${{ steps.changed-files.outputs.files }}" | tr " " "\n" | xargs -I{} ruff check "{}"

|

||||

echo "${{ steps.changed-files.outputs.files }}" \

|

||||

| tr ' ' '\n' \

|

||||

| grep -v 'src/crewai/cli/templates/' \

|

||||

| xargs -I{} ruff check "{}"

|

||||

|

||||

2

.github/workflows/tests.yml

vendored

2

.github/workflows/tests.yml

vendored

@@ -14,7 +14,7 @@ jobs:

|

||||

timeout-minutes: 15

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ['3.10', '3.11', '3.12']

|

||||

python-version: ['3.10', '3.11', '3.12', '3.13']

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

@@ -161,7 +161,7 @@ To get started with CrewAI, follow these simple steps:

|

||||

|

||||

### 1. Installation

|

||||

|

||||

Ensure you have Python >=3.10 <3.13 installed on your system. CrewAI uses [UV](https://docs.astral.sh/uv/) for dependency management and package handling, offering a seamless setup and execution experience.

|

||||

Ensure you have Python >=3.10 <3.14 installed on your system. CrewAI uses [UV](https://docs.astral.sh/uv/) for dependency management and package handling, offering a seamless setup and execution experience.

|

||||

|

||||

First, install CrewAI:

|

||||

|

||||

@@ -403,7 +403,7 @@ In addition to the sequential process, you can use the hierarchical process, whi

|

||||

|

||||

## Key Features

|

||||

|

||||

CrewAI stands apart as a lean, standalone, high-performance framework delivering simplicity, flexibility, and precise control—free from the complexity and limitations found in other agent frameworks.

|

||||

CrewAI stands apart as a lean, standalone, high-performance multi-AI Agent framework delivering simplicity, flexibility, and precise control—free from the complexity and limitations found in other agent frameworks.

|

||||

|

||||

- **Standalone & Lean**: Completely independent from other frameworks like LangChain, offering faster execution and lighter resource demands.

|

||||

- **Flexible & Precise**: Easily orchestrate autonomous agents through intuitive [Crews](https://docs.crewai.com/concepts/crews) or precise [Flows](https://docs.crewai.com/concepts/flows), achieving perfect balance for your needs.

|

||||

|

||||

@@ -43,7 +43,6 @@ The Visual Agent Builder enables:

|

||||

| **Max Iterations** _(optional)_ | `max_iter` | `int` | Maximum iterations before the agent must provide its best answer. Default is 20. |

|

||||

| **Max RPM** _(optional)_ | `max_rpm` | `Optional[int]` | Maximum requests per minute to avoid rate limits. |

|

||||

| **Max Execution Time** _(optional)_ | `max_execution_time` | `Optional[int]` | Maximum time (in seconds) for task execution. |

|

||||

| **Memory** _(optional)_ | `memory` | `bool` | Whether the agent should maintain memory of interactions. Default is True. |

|

||||

| **Verbose** _(optional)_ | `verbose` | `bool` | Enable detailed execution logs for debugging. Default is False. |

|

||||

| **Allow Delegation** _(optional)_ | `allow_delegation` | `bool` | Allow the agent to delegate tasks to other agents. Default is False. |

|

||||

| **Step Callback** _(optional)_ | `step_callback` | `Optional[Any]` | Function called after each agent step, overrides crew callback. |

|

||||

@@ -156,7 +155,6 @@ agent = Agent(

|

||||

"you excel at finding patterns in complex datasets.",

|

||||

llm="gpt-4", # Default: OPENAI_MODEL_NAME or "gpt-4"

|

||||

function_calling_llm=None, # Optional: Separate LLM for tool calling

|

||||

memory=True, # Default: True

|

||||

verbose=False, # Default: False

|

||||

allow_delegation=False, # Default: False

|

||||

max_iter=20, # Default: 20 iterations

|

||||

@@ -537,7 +535,6 @@ The context window management feature works automatically in the background. You

|

||||

- Adjust `max_iter` and `max_retry_limit` based on task complexity

|

||||

|

||||

### Memory and Context Management

|

||||

- Use `memory: true` for tasks requiring historical context

|

||||

- Leverage `knowledge_sources` for domain-specific information

|

||||

- Configure `embedder` when using custom embedding models

|

||||

- Use custom templates (`system_template`, `prompt_template`, `response_template`) for fine-grained control over agent behavior

|

||||

@@ -585,7 +582,6 @@ The context window management feature works automatically in the background. You

|

||||

- Review code sandbox settings

|

||||

|

||||

4. **Memory Issues**: If agent responses seem inconsistent:

|

||||

- Verify memory is enabled

|

||||

- Check knowledge source configuration

|

||||

- Review conversation history management

|

||||

|

||||

|

||||

@@ -325,12 +325,12 @@ for result in results:

|

||||

|

||||

# Example of using kickoff_async

|

||||

inputs = {'topic': 'AI in healthcare'}

|

||||

async_result = my_crew.kickoff_async(inputs=inputs)

|

||||

async_result = await my_crew.kickoff_async(inputs=inputs)

|

||||

print(async_result)

|

||||

|

||||

# Example of using kickoff_for_each_async

|

||||

inputs_array = [{'topic': 'AI in healthcare'}, {'topic': 'AI in finance'}]

|

||||

async_results = my_crew.kickoff_for_each_async(inputs=inputs_array)

|

||||

async_results = await my_crew.kickoff_for_each_async(inputs=inputs_array)

|

||||

for async_result in async_results:

|

||||

print(async_result)

|

||||

```

|

||||

|

||||

@@ -602,6 +602,30 @@ agent = Agent(

|

||||

)

|

||||

```

|

||||

|

||||

#### Configuring Azure OpenAI Embeddings

|

||||

|

||||

When using Azure OpenAI embeddings:

|

||||

1. Make sure you deploy the embedding model in Azure platform first

|

||||

2. Then you need to use the following configuration:

|

||||

|

||||

```python

|

||||

agent = Agent(

|

||||

role="Researcher",

|

||||

goal="Research topics",

|

||||

backstory="Expert researcher",

|

||||

knowledge_sources=[knowledge_source],

|

||||

embedder={

|

||||

"provider": "azure",

|

||||

"config": {

|

||||

"api_key": "your-azure-api-key",

|

||||

"model": "text-embedding-ada-002", # change to the model you are using and is deployed in Azure

|

||||

"api_base": "https://your-azure-endpoint.openai.azure.com/",

|

||||

"api_version": "2024-02-01"

|

||||

}

|

||||

}

|

||||

)

|

||||

```

|

||||

|

||||

## Advanced Features

|

||||

|

||||

### Query Rewriting

|

||||

|

||||

@@ -6,11 +6,11 @@ icon: brain

|

||||

|

||||

## Overview

|

||||

|

||||

Agent reasoning is a feature that allows agents to reflect on a task and create a plan before execution. This helps agents approach tasks more methodically and ensures they're ready to perform the assigned work.

|

||||

Agent reasoning is a feature that allows agents to reflect on a task and create a plan before and during execution. This helps agents approach tasks more methodically and adapt their strategy as they progress through complex tasks.

|

||||

|

||||

## Usage

|

||||

|

||||

To enable reasoning for an agent, simply set `reasoning=True` when creating the agent:

|

||||

To enable reasoning for an agent, set `reasoning=True` when creating the agent:

|

||||

|

||||

```python

|

||||

from crewai import Agent

|

||||

@@ -19,13 +19,43 @@ agent = Agent(

|

||||

role="Data Analyst",

|

||||

goal="Analyze complex datasets and provide insights",

|

||||

backstory="You are an experienced data analyst with expertise in finding patterns in complex data.",

|

||||

reasoning=True, # Enable reasoning

|

||||

reasoning=True, # Enable basic reasoning

|

||||

max_reasoning_attempts=3 # Optional: Set a maximum number of reasoning attempts

|

||||

)

|

||||

```

|

||||

|

||||

### Interval-based Reasoning

|

||||

|

||||

To enable periodic reasoning during task execution, set `reasoning_interval` to specify how often the agent should re-evaluate its plan:

|

||||

|

||||

```python

|

||||

agent = Agent(

|

||||

role="Research Analyst",

|

||||

goal="Find comprehensive information about a topic",

|

||||

backstory="You are a skilled research analyst who methodically approaches information gathering.",

|

||||

reasoning=True,

|

||||

reasoning_interval=3, # Re-evaluate plan every 3 steps

|

||||

)

|

||||

```

|

||||

|

||||

### Adaptive Reasoning

|

||||

|

||||

For more dynamic reasoning that adapts to the execution context, enable `adaptive_reasoning`:

|

||||

|

||||

```python

|

||||

agent = Agent(

|

||||

role="Strategic Advisor",

|

||||

goal="Provide strategic advice based on market research",

|

||||

backstory="You are an experienced strategic advisor who adapts your approach based on the information you discover.",

|

||||

reasoning=True,

|

||||

adaptive_reasoning=True, # Agent decides when to reason based on context

|

||||

)

|

||||

```

|

||||

|

||||

## How It Works

|

||||

|

||||

### Initial Reasoning

|

||||

|

||||

When reasoning is enabled, before executing a task, the agent will:

|

||||

|

||||

1. Reflect on the task and create a detailed plan

|

||||

@@ -33,7 +63,17 @@ When reasoning is enabled, before executing a task, the agent will:

|

||||

3. Refine the plan as necessary until it's ready or max_reasoning_attempts is reached

|

||||

4. Inject the reasoning plan into the task description before execution

|

||||

|

||||

This process helps the agent break down complex tasks into manageable steps and identify potential challenges before starting.

|

||||

### Mid-execution Reasoning

|

||||

|

||||

During task execution, the agent can re-evaluate and adjust its plan based on:

|

||||

|

||||

1. **Interval-based reasoning**: The agent reasons after a fixed number of steps (specified by `reasoning_interval`)

|

||||

2. **Adaptive reasoning**: The agent uses its LLM to intelligently decide when reasoning is needed based on:

|

||||

- Current execution context (task description, expected output, steps taken)

|

||||

- The agent's own judgment about whether strategic reassessment would be beneficial

|

||||

- Automatic fallback when recent errors or failures are detected in the execution

|

||||

|

||||

This mid-execution reasoning helps agents adapt to new information, overcome obstacles, and optimize their approach as they work through complex tasks.

|

||||

|

||||

## Configuration Options

|

||||

|

||||

@@ -45,35 +85,44 @@ This process helps the agent break down complex tasks into manageable steps and

|

||||

Maximum number of attempts to refine the plan before proceeding with execution. If None (default), the agent will continue refining until it's ready.

|

||||

</ParamField>

|

||||

|

||||

## Example

|

||||

<ParamField body="reasoning_interval" type="int" default="None">

|

||||

Interval of steps after which the agent should reason again during execution. If None, reasoning only happens before execution.

|

||||

</ParamField>

|

||||

|

||||

Here's a complete example:

|

||||

<ParamField body="adaptive_reasoning" type="bool" default="False">

|

||||

Whether the agent should adaptively decide when to reason during execution based on context.

|

||||

</ParamField>

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew

|

||||

## Technical Implementation

|

||||

|

||||

# Create an agent with reasoning enabled

|

||||

analyst = Agent(

|

||||

role="Data Analyst",

|

||||

goal="Analyze data and provide insights",

|

||||

backstory="You are an expert data analyst.",

|

||||

reasoning=True,

|

||||

max_reasoning_attempts=3 # Optional: Set a limit on reasoning attempts

|

||||

)

|

||||

### Interval-based Reasoning

|

||||

|

||||

# Create a task

|

||||

analysis_task = Task(

|

||||

description="Analyze the provided sales data and identify key trends.",

|

||||

expected_output="A report highlighting the top 3 sales trends.",

|

||||

agent=analyst

|

||||

)

|

||||

The interval-based reasoning feature works by:

|

||||

|

||||

# Create a crew and run the task

|

||||

crew = Crew(agents=[analyst], tasks=[analysis_task])

|

||||

result = crew.kickoff()

|

||||

1. Tracking the number of steps since the last reasoning event

|

||||

2. Triggering reasoning when `steps_since_reasoning >= reasoning_interval`

|

||||

3. Resetting the counter after each reasoning event

|

||||

4. Generating an updated plan based on current progress

|

||||

|

||||

print(result)

|

||||

```

|

||||

This creates a predictable pattern of reflection during task execution, which is useful for complex tasks where periodic reassessment is beneficial.

|

||||

|

||||

### Adaptive Reasoning

|

||||

|

||||

The adaptive reasoning feature uses LLM function calling to determine when reasoning should occur:

|

||||

|

||||

1. **LLM-based decision**: The agent's LLM evaluates the current execution context (task description, expected output, steps taken so far) to decide if reasoning is needed

|

||||

2. **Error detection fallback**: When recent messages contain error indicators like "error", "exception", "failed", etc., reasoning is automatically triggered

|

||||

|

||||

This creates an intelligent reasoning pattern where the agent uses its own judgment to determine when strategic reassessment would be most beneficial, while maintaining automatic error recovery.

|

||||

|

||||

### Mid-execution Reasoning Process

|

||||

|

||||

When mid-execution reasoning is triggered, the agent:

|

||||

|

||||

1. Summarizes current progress (steps taken, tools used, recent actions)

|

||||

2. Evaluates the effectiveness of the current approach

|

||||

3. Adjusts the plan based on new information and challenges encountered

|

||||

4. Continues execution with the updated plan

|

||||

|

||||

## Error Handling

|

||||

|

||||

@@ -93,7 +142,7 @@ agent = Agent(

|

||||

role="Data Analyst",

|

||||

goal="Analyze data and provide insights",

|

||||

reasoning=True,

|

||||

max_reasoning_attempts=3

|

||||

reasoning_interval=5 # Re-evaluate plan every 5 steps

|

||||

)

|

||||

|

||||

# Create a task

|

||||

@@ -144,4 +193,33 @@ I'll analyze the sales data to identify the top 3 trends.

|

||||

READY: I am ready to execute the task.

|

||||

```

|

||||

|

||||

This reasoning plan helps the agent organize its approach to the task, consider potential challenges, and ensure it delivers the expected output.

|

||||

During execution, the agent might generate an updated plan:

|

||||

|

||||

```

|

||||

Based on progress so far (3 steps completed):

|

||||

|

||||

Updated Reasoning Plan:

|

||||

After examining the data structure and initial exploratory analysis, I need to adjust my approach:

|

||||

|

||||

1. Current findings:

|

||||

- The data shows seasonal patterns that need deeper investigation

|

||||

- Customer segments show varying purchasing behaviors

|

||||

- There are outliers in the luxury product category

|

||||

|

||||

2. Adjusted approach:

|

||||

- Focus more on seasonal analysis with year-over-year comparisons

|

||||

- Segment analysis by both demographics and purchasing frequency

|

||||

- Investigate the luxury product category anomalies

|

||||

|

||||

3. Next steps:

|

||||

- Apply time series analysis to better quantify seasonal patterns

|

||||

- Create customer cohorts for more precise segmentation

|

||||

- Perform statistical tests on the luxury category data

|

||||

|

||||

4. Expected outcome:

|

||||

Still on track to deliver the top 3 sales trends, but with more precise quantification and actionable insights.

|

||||

|

||||

READY: I am ready to continue executing the task.

|

||||

```

|

||||

|

||||

This mid-execution reasoning helps the agent adapt its approach based on what it has learned during the initial steps of the task.

|

||||

|

||||

@@ -85,7 +85,12 @@

|

||||

{

|

||||

"group": "MCP Integration",

|

||||

"pages": [

|

||||

"mcp/crewai-mcp-integration"

|

||||

"mcp/overview",

|

||||

"mcp/stdio",

|

||||

"mcp/sse",

|

||||

"mcp/streamable-http",

|

||||

"mcp/multiple-servers",

|

||||

"mcp/security"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -208,6 +213,7 @@

|

||||

"group": "Learn",

|

||||

"pages": [

|

||||

"learn/overview",

|

||||

"learn/llm-selection-guide",

|

||||

"learn/conditional-tasks",

|

||||

"learn/coding-agents",

|

||||

"learn/create-custom-tools",

|

||||

|

||||

@@ -25,8 +25,13 @@ AI hallucinations occur when language models generate content that appears plaus

|

||||

from crewai.tasks.hallucination_guardrail import HallucinationGuardrail

|

||||

from crewai import LLM

|

||||

|

||||

# Initialize the guardrail with reference context

|

||||

# Basic usage - will use task's expected_output as context

|

||||

guardrail = HallucinationGuardrail(

|

||||

llm=LLM(model="gpt-4o-mini")

|

||||

)

|

||||

|

||||

# With explicit reference context

|

||||

context_guardrail = HallucinationGuardrail(

|

||||

context="AI helps with various tasks including analysis and generation.",

|

||||

llm=LLM(model="gpt-4o-mini")

|

||||

)

|

||||

|

||||

@@ -21,6 +21,7 @@ Before using the Tool Repository, ensure you have:

|

||||

|

||||

- A [CrewAI Enterprise](https://app.crewai.com) account

|

||||

- [CrewAI CLI](https://docs.crewai.com/concepts/cli#cli) installed

|

||||

- uv>=0.5.0 installed. Check out [how to upgrade](https://docs.astral.sh/uv/getting-started/installation/#upgrading-uv)

|

||||

- [Git](https://git-scm.com) installed and configured

|

||||

- Access permissions to publish or install tools in your CrewAI Enterprise organization

|

||||

|

||||

|

||||

BIN

docs/images/enterprise/enterprise-testing.png

Normal file

BIN

docs/images/enterprise/enterprise-testing.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 288 KiB |

@@ -22,7 +22,7 @@ Watch this video tutorial for a step-by-step demonstration of the installation p

|

||||

<Note>

|

||||

**Python Version Requirements**

|

||||

|

||||

CrewAI requires `Python >=3.10 and <3.13`. Here's how to check your version:

|

||||

CrewAI requires `Python >=3.10 and <=3.13`. Here's how to check your version:

|

||||

```bash

|

||||

python3 --version

|

||||

```

|

||||

|

||||

@@ -108,6 +108,7 @@ crew_2 = Crew(agents=[coding_agent], tasks=[task_2])

|

||||

|

||||

# Async function to kickoff multiple crews asynchronously and wait for all to finish

|

||||

async def async_multiple_crews():

|

||||

# Create coroutines for concurrent execution

|

||||

result_1 = crew_1.kickoff_async(inputs={"ages": [25, 30, 35, 40, 45]})

|

||||

result_2 = crew_2.kickoff_async(inputs={"ages": [20, 22, 24, 28, 30]})

|

||||

|

||||

|

||||

729

docs/learn/llm-selection-guide.mdx

Normal file

729

docs/learn/llm-selection-guide.mdx

Normal file

@@ -0,0 +1,729 @@

|

||||

---

|

||||

title: 'Strategic LLM Selection Guide'

|

||||

description: 'Strategic framework for choosing the right LLM for your CrewAI AI agents and writing effective task and agent definitions'

|

||||

icon: 'brain-circuit'

|

||||

---

|

||||

|

||||

## The CrewAI Approach to LLM Selection

|

||||

|

||||

Rather than prescriptive model recommendations, we advocate for a **thinking framework** that helps you make informed decisions based on your specific use case, constraints, and requirements. The LLM landscape evolves rapidly, with new models emerging regularly and existing ones being updated frequently. What matters most is developing a systematic approach to evaluation that remains relevant regardless of which specific models are available.

|

||||

|

||||

<Note>

|

||||

This guide focuses on strategic thinking rather than specific model recommendations, as the LLM landscape evolves rapidly.

|

||||

</Note>

|

||||

|

||||

## Quick Decision Framework

|

||||

|

||||

<Steps>

|

||||

<Step title="Analyze Your Tasks">

|

||||

Begin by deeply understanding what your tasks actually require. Consider the cognitive complexity involved, the depth of reasoning needed, the format of expected outputs, and the amount of context the model will need to process. This foundational analysis will guide every subsequent decision.

|

||||

</Step>

|

||||

<Step title="Map Model Capabilities">

|

||||

Once you understand your requirements, map them to model strengths. Different model families excel at different types of work; some are optimized for reasoning and analysis, others for creativity and content generation, and others for speed and efficiency.

|

||||

</Step>

|

||||

<Step title="Consider Constraints">

|

||||

Factor in your real-world operational constraints including budget limitations, latency requirements, data privacy needs, and infrastructure capabilities. The theoretically best model may not be the practically best choice for your situation.

|

||||

</Step>

|

||||

<Step title="Test and Iterate">

|

||||

Start with reliable, well-understood models and optimize based on actual performance in your specific use case. Real-world results often differ from theoretical benchmarks, so empirical testing is crucial.

|

||||

</Step>

|

||||

</Steps>

|

||||

|

||||

## Core Selection Framework

|

||||

|

||||

### a. Task-First Thinking

|

||||

|

||||

The most critical step in LLM selection is understanding what your task actually demands. Too often, teams select models based on general reputation or benchmark scores without carefully analyzing their specific requirements. This approach leads to either over-engineering simple tasks with expensive, complex models, or under-powering sophisticated work with models that lack the necessary capabilities.

|

||||

|

||||

<Tabs>

|

||||

<Tab title="Reasoning Complexity">

|

||||

- **Simple Tasks** represent the majority of everyday AI work and include basic instruction following, straightforward data processing, and simple formatting operations. These tasks typically have clear inputs and outputs with minimal ambiguity. The cognitive load is low, and the model primarily needs to follow explicit instructions rather than engage in complex reasoning.

|

||||

|

||||

- **Complex Tasks** require multi-step reasoning, strategic thinking, and the ability to handle ambiguous or incomplete information. These might involve analyzing multiple data sources, developing comprehensive strategies, or solving problems that require breaking down into smaller components. The model needs to maintain context across multiple reasoning steps and often must make inferences that aren't explicitly stated.

|

||||

|

||||

- **Creative Tasks** demand a different type of cognitive capability focused on generating novel, engaging, and contextually appropriate content. This includes storytelling, marketing copy creation, and creative problem-solving. The model needs to understand nuance, tone, and audience while producing content that feels authentic and engaging rather than formulaic.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Output Requirements">

|

||||

- **Structured Data** tasks require precision and consistency in format adherence. When working with JSON, XML, or database formats, the model must reliably produce syntactically correct output that can be programmatically processed. These tasks often have strict validation requirements and little tolerance for format errors, making reliability more important than creativity.

|

||||

|

||||

- **Creative Content** outputs demand a balance of technical competence and creative flair. The model needs to understand audience, tone, and brand voice while producing content that engages readers and achieves specific communication goals. Quality here is often subjective and requires models that can adapt their writing style to different contexts and purposes.

|

||||

|

||||

- **Technical Content** sits between structured data and creative content, requiring both precision and clarity. Documentation, code generation, and technical analysis need to be accurate and comprehensive while remaining accessible to the intended audience. The model must understand complex technical concepts and communicate them effectively.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Context Needs">

|

||||

- **Short Context** scenarios involve focused, immediate tasks where the model needs to process limited information quickly. These are often transactional interactions where speed and efficiency matter more than deep understanding. The model doesn't need to maintain extensive conversation history or process large documents.

|

||||

|

||||

- **Long Context** requirements emerge when working with substantial documents, extended conversations, or complex multi-part tasks. The model needs to maintain coherence across thousands of tokens while referencing earlier information accurately. This capability becomes crucial for document analysis, comprehensive research, and sophisticated dialogue systems.

|

||||

|

||||

- **Very Long Context** scenarios push the boundaries of what's currently possible, involving massive document processing, extensive research synthesis, or complex multi-session interactions. These use cases require models specifically designed for extended context handling and often involve trade-offs between context length and processing speed.

|

||||

</Tab>

|

||||

</Tabs>

|

||||

|

||||

### b. Model Capability Mapping

|

||||

|

||||

Understanding model capabilities requires looking beyond marketing claims and benchmark scores to understand the fundamental strengths and limitations of different model architectures and training approaches.

|

||||

|

||||

<AccordionGroup>

|

||||

<Accordion title="Reasoning Models" icon="brain">

|

||||

Reasoning models represent a specialized category designed specifically for complex, multi-step thinking tasks. These models excel when problems require careful analysis, strategic planning, or systematic problem decomposition. They typically employ techniques like chain-of-thought reasoning or tree-of-thought processing to work through complex problems step by step.

|

||||

|

||||

The strength of reasoning models lies in their ability to maintain logical consistency across extended reasoning chains and to break down complex problems into manageable components. They're particularly valuable for strategic planning, complex analysis, and situations where the quality of reasoning matters more than speed of response.

|

||||

|

||||

However, reasoning models often come with trade-offs in terms of speed and cost. They may also be less suitable for creative tasks or simple operations where their sophisticated reasoning capabilities aren't needed. Consider these models when your tasks involve genuine complexity that benefits from systematic, step-by-step analysis.

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="General Purpose Models" icon="microchip">

|

||||

General purpose models offer the most balanced approach to LLM selection, providing solid performance across a wide range of tasks without extreme specialization in any particular area. These models are trained on diverse datasets and optimized for versatility rather than peak performance in specific domains.

|

||||

|

||||

The primary advantage of general purpose models is their reliability and predictability across different types of work. They handle most standard business tasks competently, from research and analysis to content creation and data processing. This makes them excellent choices for teams that need consistent performance across varied workflows.

|

||||

|

||||

While general purpose models may not achieve the peak performance of specialized alternatives in specific domains, they offer operational simplicity and reduced complexity in model management. They're often the best starting point for new projects, allowing teams to understand their specific needs before potentially optimizing with more specialized models.

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Fast & Efficient Models" icon="bolt">

|

||||

Fast and efficient models prioritize speed, cost-effectiveness, and resource efficiency over sophisticated reasoning capabilities. These models are optimized for high-throughput scenarios where quick responses and low operational costs are more important than nuanced understanding or complex reasoning.

|

||||

|

||||

These models excel in scenarios involving routine operations, simple data processing, function calling, and high-volume tasks where the cognitive requirements are relatively straightforward. They're particularly valuable for applications that need to process many requests quickly or operate within tight budget constraints.

|

||||

|

||||

The key consideration with efficient models is ensuring that their capabilities align with your task requirements. While they can handle many routine operations effectively, they may struggle with tasks requiring nuanced understanding, complex reasoning, or sophisticated content generation. They're best used for well-defined, routine operations where speed and cost matter more than sophistication.

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Creative Models" icon="pen">

|

||||

Creative models are specifically optimized for content generation, writing quality, and creative thinking tasks. These models typically excel at understanding nuance, tone, and style while producing engaging, contextually appropriate content that feels natural and authentic.

|

||||

|

||||

The strength of creative models lies in their ability to adapt writing style to different audiences, maintain consistent voice and tone, and generate content that engages readers effectively. They often perform better on tasks involving storytelling, marketing copy, brand communications, and other content where creativity and engagement are primary goals.

|

||||

|

||||

When selecting creative models, consider not just their ability to generate text, but their understanding of audience, context, and purpose. The best creative models can adapt their output to match specific brand voices, target different audience segments, and maintain consistency across extended content pieces.

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Open Source Models" icon="code">

|

||||

Open source models offer unique advantages in terms of cost control, customization potential, data privacy, and deployment flexibility. These models can be run locally or on private infrastructure, providing complete control over data handling and model behavior.

|

||||

|

||||

The primary benefits of open source models include elimination of per-token costs, ability to fine-tune for specific use cases, complete data privacy, and independence from external API providers. They're particularly valuable for organizations with strict data privacy requirements, budget constraints, or specific customization needs.

|

||||

|

||||

However, open source models require more technical expertise to deploy and maintain effectively. Teams need to consider infrastructure costs, model management complexity, and the ongoing effort required to keep models updated and optimized. The total cost of ownership may be higher than cloud-based alternatives when factoring in technical overhead.

|

||||

</Accordion>

|

||||

</AccordionGroup>

|

||||

|

||||

## Strategic Configuration Patterns

|

||||

|

||||

### a. Multi-Model Approach

|

||||

|

||||

<Tip>

|

||||

Use different models for different purposes within the same crew to optimize both performance and cost.

|

||||

</Tip>

|

||||

|

||||

The most sophisticated CrewAI implementations often employ multiple models strategically, assigning different models to different agents based on their specific roles and requirements. This approach allows teams to optimize for both performance and cost by using the most appropriate model for each type of work.

|

||||

|

||||

Planning agents benefit from reasoning models that can handle complex strategic thinking and multi-step analysis. These agents often serve as the "brain" of the operation, developing strategies and coordinating other agents' work. Content agents, on the other hand, perform best with creative models that excel at writing quality and audience engagement. Processing agents handling routine operations can use efficient models that prioritize speed and cost-effectiveness.

|

||||

|

||||

**Example: Research and Analysis Crew**

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew, LLM

|

||||

|

||||

# High-capability reasoning model for strategic planning

|

||||

manager_llm = LLM(model="gemini-2.5-flash-preview-05-20", temperature=0.1)

|

||||

|

||||

# Creative model for content generation

|

||||

content_llm = LLM(model="claude-3-5-sonnet-20241022", temperature=0.7)

|

||||

|

||||

# Efficient model for data processing

|

||||

processing_llm = LLM(model="gpt-4o-mini", temperature=0)

|

||||

|

||||

research_manager = Agent(

|

||||

role="Research Strategy Manager",

|

||||

goal="Develop comprehensive research strategies and coordinate team efforts",

|

||||

backstory="Expert research strategist with deep analytical capabilities",

|

||||

llm=manager_llm, # High-capability model for complex reasoning

|

||||

verbose=True

|

||||

)

|

||||

|

||||

content_writer = Agent(

|

||||

role="Research Content Writer",

|

||||

goal="Transform research findings into compelling, well-structured reports",

|

||||

backstory="Skilled writer who excels at making complex topics accessible",

|

||||

llm=content_llm, # Creative model for engaging content

|

||||

verbose=True

|

||||

)

|

||||

|

||||

data_processor = Agent(

|

||||

role="Data Analysis Specialist",

|

||||

goal="Extract and organize key data points from research sources",

|

||||

backstory="Detail-oriented analyst focused on accuracy and efficiency",

|

||||

llm=processing_llm, # Fast, cost-effective model for routine tasks

|

||||

verbose=True

|

||||

)

|

||||

|

||||

crew = Crew(

|

||||

agents=[research_manager, content_writer, data_processor],

|

||||

tasks=[...], # Your specific tasks

|

||||

manager_llm=manager_llm, # Manager uses the reasoning model

|

||||

verbose=True

|

||||

)

|

||||

```

|

||||

|

||||

The key to successful multi-model implementation is understanding how different agents interact and ensuring that model capabilities align with agent responsibilities. This requires careful planning but can result in significant improvements in both output quality and operational efficiency.

|

||||

|

||||

### b. Component-Specific Selection

|

||||

|

||||

<Tabs>

|

||||

<Tab title="Manager LLM">

|

||||

The manager LLM plays a crucial role in hierarchical CrewAI processes, serving as the coordination point for multiple agents and tasks. This model needs to excel at delegation, task prioritization, and maintaining context across multiple concurrent operations.

|

||||

|

||||

Effective manager LLMs require strong reasoning capabilities to make good delegation decisions, consistent performance to ensure predictable coordination, and excellent context management to track the state of multiple agents simultaneously. The model needs to understand the capabilities and limitations of different agents while optimizing task allocation for efficiency and quality.

|

||||

|

||||

Cost considerations are particularly important for manager LLMs since they're involved in every operation. The model needs to provide sufficient capability for effective coordination while remaining cost-effective for frequent use. This often means finding models that offer good reasoning capabilities without the premium pricing of the most sophisticated options.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Function Calling LLM">

|

||||

Function calling LLMs handle tool usage across all agents, making them critical for crews that rely heavily on external tools and APIs. These models need to excel at understanding tool capabilities, extracting parameters accurately, and handling tool responses effectively.

|

||||

|

||||

The most important characteristics for function calling LLMs are precision and reliability rather than creativity or sophisticated reasoning. The model needs to consistently extract the correct parameters from natural language requests and handle tool responses appropriately. Speed is also important since tool usage often involves multiple round trips that can impact overall performance.

|

||||

|

||||

Many teams find that specialized function calling models or general purpose models with strong tool support work better than creative or reasoning-focused models for this role. The key is ensuring that the model can reliably bridge the gap between natural language instructions and structured tool calls.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Agent-Specific Overrides">

|

||||

Individual agents can override crew-level LLM settings when their specific needs differ significantly from the general crew requirements. This capability allows for fine-tuned optimization while maintaining operational simplicity for most agents.

|

||||

|

||||

Consider agent-specific overrides when an agent's role requires capabilities that differ substantially from other crew members. For example, a creative writing agent might benefit from a model optimized for content generation, while a data analysis agent might perform better with a reasoning-focused model.

|

||||

|

||||

The challenge with agent-specific overrides is balancing optimization with operational complexity. Each additional model adds complexity to deployment, monitoring, and cost management. Teams should focus overrides on agents where the performance improvement justifies the additional complexity.

|

||||

</Tab>

|

||||

</Tabs>

|

||||

|

||||

## Task Definition Framework

|

||||

|

||||

### a. Focus on Clarity Over Complexity

|

||||

|

||||

Effective task definition is often more important than model selection in determining the quality of CrewAI outputs. Well-defined tasks provide clear direction and context that enable even modest models to perform well, while poorly defined tasks can cause even sophisticated models to produce unsatisfactory results.

|

||||

|

||||

<AccordionGroup>

|

||||

<Accordion title="Effective Task Descriptions" icon="list-check">

|

||||

The best task descriptions strike a balance between providing sufficient detail and maintaining clarity. They should define the specific objective clearly enough that there's no ambiguity about what success looks like, while explaining the approach or methodology in enough detail that the agent understands how to proceed.

|

||||

|

||||

Effective task descriptions include relevant context and constraints that help the agent understand the broader purpose and any limitations they need to work within. They break complex work into focused steps that can be executed systematically, rather than presenting overwhelming, multi-faceted objectives that are difficult to approach systematically.

|

||||

|

||||

Common mistakes include being too vague about objectives, failing to provide necessary context, setting unclear success criteria, or combining multiple unrelated tasks into a single description. The goal is to provide enough information for the agent to succeed while maintaining focus on a single, clear objective.

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Expected Output Guidelines" icon="bullseye">

|

||||

Expected output guidelines serve as a contract between the task definition and the agent, clearly specifying what the deliverable should look like and how it will be evaluated. These guidelines should describe both the format and structure needed, as well as the key elements that must be included for the output to be considered complete.

|

||||

|

||||

The best output guidelines provide concrete examples of quality indicators and define completion criteria clearly enough that both the agent and human reviewers can assess whether the task has been completed successfully. This reduces ambiguity and helps ensure consistent results across multiple task executions.

|

||||

|

||||

Avoid generic output descriptions that could apply to any task, missing format specifications that leave agents guessing about structure, unclear quality standards that make evaluation difficult, or failing to provide examples or templates that help agents understand expectations.

|

||||

</Accordion>

|

||||

</AccordionGroup>

|

||||

|

||||

### b. Task Sequencing Strategy

|

||||

|

||||

<Tabs>

|

||||

<Tab title="Sequential Dependencies">

|

||||

Sequential task dependencies are essential when tasks build upon previous outputs, information flows from one task to another, or quality depends on the completion of prerequisite work. This approach ensures that each task has access to the information and context it needs to succeed.

|

||||

|

||||

Implementing sequential dependencies effectively requires using the context parameter to chain related tasks, building complexity gradually through task progression, and ensuring that each task produces outputs that serve as meaningful inputs for subsequent tasks. The goal is to maintain logical flow between dependent tasks while avoiding unnecessary bottlenecks.

|

||||

|

||||

Sequential dependencies work best when there's a clear logical progression from one task to another and when the output of one task genuinely improves the quality or feasibility of subsequent tasks. However, they can create bottlenecks if not managed carefully, so it's important to identify which dependencies are truly necessary versus those that are merely convenient.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Parallel Execution">

|

||||

Parallel execution becomes valuable when tasks are independent of each other, time efficiency is important, or different expertise areas are involved that don't require coordination. This approach can significantly reduce overall execution time while allowing specialized agents to work on their areas of strength simultaneously.

|

||||

|

||||

Successful parallel execution requires identifying tasks that can truly run independently, grouping related but separate work streams effectively, and planning for result integration when parallel tasks need to be combined into a final deliverable. The key is ensuring that parallel tasks don't create conflicts or redundancies that reduce overall quality.

|

||||

|

||||

Consider parallel execution when you have multiple independent research streams, different types of analysis that don't depend on each other, or content creation tasks that can be developed simultaneously. However, be mindful of resource allocation and ensure that parallel execution doesn't overwhelm your available model capacity or budget.

|

||||

</Tab>

|

||||

</Tabs>

|

||||

|

||||

## Optimizing Agent Configuration for LLM Performance

|

||||

|

||||

### a. Role-Driven LLM Selection

|

||||

|

||||

<Warning>

|

||||

Generic agent roles make it impossible to select the right LLM. Specific roles enable targeted model optimization.

|

||||

</Warning>

|

||||

|

||||

The specificity of your agent roles directly determines which LLM capabilities matter most for optimal performance. This creates a strategic opportunity to match precise model strengths with agent responsibilities.

|

||||

|

||||

**Generic vs. Specific Role Impact on LLM Choice:**

|

||||

|

||||

When defining roles, think about the specific domain knowledge, working style, and decision-making frameworks that would be most valuable for the tasks the agent will handle. The more specific and contextual the role definition, the better the model can embody that role effectively.

|

||||

```python

|

||||

# ✅ Specific role - clear LLM requirements

|

||||

specific_agent = Agent(

|

||||

role="SaaS Revenue Operations Analyst", # Clear domain expertise needed

|

||||

goal="Analyze recurring revenue metrics and identify growth opportunities",

|

||||

backstory="Specialist in SaaS business models with deep understanding of ARR, churn, and expansion revenue",

|

||||

llm=LLM(model="gpt-4o") # Reasoning model justified for complex analysis

|

||||

)

|

||||

```

|

||||

|

||||

**Role-to-Model Mapping Strategy:**

|

||||

|

||||

- **"Research Analyst"** → Reasoning model (GPT-4o, Claude Sonnet) for complex analysis

|

||||

- **"Content Editor"** → Creative model (Claude, GPT-4o) for writing quality

|

||||

- **"Data Processor"** → Efficient model (GPT-4o-mini, Gemini Flash) for structured tasks

|

||||

- **"API Coordinator"** → Function-calling optimized model (GPT-4o, Claude) for tool usage

|

||||

|

||||

### b. Backstory as Model Context Amplifier

|

||||

|

||||

<Info>

|

||||

Strategic backstories multiply your chosen LLM's effectiveness by providing domain-specific context that generic prompting cannot achieve.

|

||||

</Info>

|

||||

|

||||

A well-crafted backstory transforms your LLM choice from generic capability to specialized expertise. This is especially crucial for cost optimization - a well-contextualized efficient model can outperform a premium model without proper context.

|

||||

|

||||

**Context-Driven Performance Example:**

|

||||

|

||||

```python

|

||||

# Context amplifies model effectiveness

|

||||

domain_expert = Agent(

|

||||

role="B2B SaaS Marketing Strategist",

|

||||

goal="Develop comprehensive go-to-market strategies for enterprise software",

|

||||

backstory="""

|

||||

You have 10+ years of experience scaling B2B SaaS companies from Series A to IPO.

|

||||

You understand the nuances of enterprise sales cycles, the importance of product-market

|

||||

fit in different verticals, and how to balance growth metrics with unit economics.

|

||||

You've worked with companies like Salesforce, HubSpot, and emerging unicorns, giving

|

||||

you perspective on both established and disruptive go-to-market strategies.

|

||||

""",

|

||||

llm=LLM(model="claude-3-5-sonnet", temperature=0.3) # Balanced creativity with domain knowledge

|

||||

)

|

||||

|

||||

# This context enables Claude to perform like a domain expert

|

||||

# Without it, even it would produce generic marketing advice

|

||||

```

|

||||

|

||||

**Backstory Elements That Enhance LLM Performance:**

|

||||

- **Domain Experience**: "10+ years in enterprise SaaS sales"

|

||||

- **Specific Expertise**: "Specializes in technical due diligence for Series B+ rounds"

|

||||

- **Working Style**: "Prefers data-driven decisions with clear documentation"

|

||||

- **Quality Standards**: "Insists on citing sources and showing analytical work"

|

||||

|

||||

### c. Holistic Agent-LLM Optimization

|

||||

|

||||

The most effective agent configurations create synergy between role specificity, backstory depth, and LLM selection. Each element reinforces the others to maximize model performance.

|

||||

|

||||

**Optimization Framework:**

|

||||

|

||||

```python

|

||||

# Example: Technical Documentation Agent

|

||||

tech_writer = Agent(

|

||||

role="API Documentation Specialist", # Specific role for clear LLM requirements

|

||||

goal="Create comprehensive, developer-friendly API documentation",

|

||||

backstory="""

|

||||

You're a technical writer with 8+ years documenting REST APIs, GraphQL endpoints,

|

||||

and SDK integration guides. You've worked with developer tools companies and

|

||||

understand what developers need: clear examples, comprehensive error handling,

|

||||

and practical use cases. You prioritize accuracy and usability over marketing fluff.

|

||||

""",

|

||||

llm=LLM(

|

||||

model="claude-3-5-sonnet", # Excellent for technical writing

|

||||

temperature=0.1 # Low temperature for accuracy

|

||||

),

|

||||

tools=[code_analyzer_tool, api_scanner_tool],

|

||||

verbose=True

|

||||

)

|

||||

```

|

||||

|

||||

**Alignment Checklist:**

|

||||

- ✅ **Role Specificity**: Clear domain and responsibilities

|

||||

- ✅ **LLM Match**: Model strengths align with role requirements

|

||||

- ✅ **Backstory Depth**: Provides domain context the LLM can leverage

|

||||

- ✅ **Tool Integration**: Tools support the agent's specialized function

|

||||

- ✅ **Parameter Tuning**: Temperature and settings optimize for role needs

|

||||

|

||||

The key is creating agents where every configuration choice reinforces your LLM selection strategy, maximizing performance while optimizing costs.

|

||||

|

||||

## Practical Implementation Checklist

|

||||

|

||||

Rather than repeating the strategic framework, here's a tactical checklist for implementing your LLM selection decisions in CrewAI:

|

||||

|

||||

<Steps>

|

||||

<Step title="Audit Your Current Setup" icon="clipboard-check">

|

||||

**What to Review:**

|

||||

- Are all agents using the same LLM by default?

|

||||

- Which agents handle the most complex reasoning tasks?

|

||||

- Which agents primarily do data processing or formatting?

|

||||

- Are any agents heavily tool-dependent?

|

||||

|

||||

**Action**: Document current agent roles and identify optimization opportunities.

|

||||

</Step>

|

||||

|

||||

<Step title="Implement Crew-Level Strategy" icon="users-gear">

|

||||

**Set Your Baseline:**

|

||||

```python

|

||||

# Start with a reliable default for the crew

|

||||

default_crew_llm = LLM(model="gpt-4o-mini") # Cost-effective baseline

|

||||

|

||||

crew = Crew(

|

||||

agents=[...],

|

||||

tasks=[...],

|

||||

memory=True

|

||||

)

|

||||

```

|

||||

|

||||

**Action**: Establish your crew's default LLM before optimizing individual agents.

|

||||

</Step>

|

||||

|

||||

<Step title="Optimize High-Impact Agents" icon="star">

|

||||

**Identify and Upgrade Key Agents:**

|

||||

```python

|

||||

# Manager or coordination agents

|

||||

manager_agent = Agent(

|

||||

role="Project Manager",

|

||||

llm=LLM(model="gemini-2.5-flash-preview-05-20"), # Premium for coordination

|

||||

# ... rest of config

|

||||

)

|

||||

|

||||

# Creative or customer-facing agents

|

||||

content_agent = Agent(

|

||||

role="Content Creator",

|

||||

llm=LLM(model="claude-3-5-sonnet"), # Best for writing

|

||||

# ... rest of config

|

||||

)

|

||||

```

|

||||

|

||||

**Action**: Upgrade 20% of your agents that handle 80% of the complexity.

|

||||

</Step>

|

||||

|

||||

<Step title="Validate with Enterprise Testing" icon="test-tube">

|

||||

**Once you deploy your agents to production:**

|

||||

- Use [CrewAI Enterprise platform](https://app.crewai.com) to A/B test your model selections

|

||||

- Run multiple iterations with real inputs to measure consistency and performance

|

||||

- Compare cost vs. performance across your optimized setup

|

||||

- Share results with your team for collaborative decision-making

|

||||

|

||||

**Action**: Replace guesswork with data-driven validation using the testing platform.

|

||||

</Step>

|

||||

</Steps>

|

||||

|

||||

### When to Use Different Model Types

|

||||

|

||||

<Tabs>

|

||||

<Tab title="Reasoning Models">

|

||||

Reasoning models become essential when tasks require genuine multi-step logical thinking, strategic planning, or high-level decision making that benefits from systematic analysis. These models excel when problems need to be broken down into components and analyzed systematically rather than handled through pattern matching or simple instruction following.

|

||||

|

||||

Consider reasoning models for business strategy development, complex data analysis that requires drawing insights from multiple sources, multi-step problem solving where each step depends on previous analysis, and strategic planning tasks that require considering multiple variables and their interactions.

|

||||

|

||||

However, reasoning models often come with higher costs and slower response times, so they're best reserved for tasks where their sophisticated capabilities provide genuine value rather than being used for simple operations that don't require complex reasoning.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Creative Models">

|

||||

Creative models become valuable when content generation is the primary output and the quality, style, and engagement level of that content directly impact success. These models excel when writing quality and style matter significantly, creative ideation or brainstorming is needed, or brand voice and tone are important considerations.

|

||||

|

||||

Use creative models for blog post writing and article creation, marketing copy that needs to engage and persuade, creative storytelling and narrative development, and brand communications where voice and tone are crucial. These models often understand nuance and context better than general purpose alternatives.

|

||||

|

||||

Creative models may be less suitable for technical or analytical tasks where precision and factual accuracy are more important than engagement and style. They're best used when the creative and communicative aspects of the output are primary success factors.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Efficient Models">

|

||||

Efficient models are ideal for high-frequency, routine operations where speed and cost optimization are priorities. These models work best when tasks have clear, well-defined parameters and don't require sophisticated reasoning or creative capabilities.

|

||||

|

||||

Consider efficient models for data processing and transformation tasks, simple formatting and organization operations, function calling and tool usage where precision matters more than sophistication, and high-volume operations where cost per operation is a significant factor.

|

||||

|

||||

The key with efficient models is ensuring that their capabilities align with task requirements. They can handle many routine operations effectively but may struggle with tasks requiring nuanced understanding, complex reasoning, or sophisticated content generation.

|

||||

</Tab>

|

||||

|

||||

<Tab title="Open Source Models">

|

||||

Open source models become attractive when budget constraints are significant, data privacy requirements exist, customization needs are important, or local deployment is required for operational or compliance reasons.

|

||||

|

||||

Consider open source models for internal company tools where data privacy is paramount, privacy-sensitive applications that can't use external APIs, cost-optimized deployments where per-token pricing is prohibitive, and situations requiring custom model modifications or fine-tuning.

|

||||

|

||||

However, open source models require more technical expertise to deploy and maintain effectively. Consider the total cost of ownership including infrastructure, technical overhead, and ongoing maintenance when evaluating open source options.

|

||||

</Tab>

|

||||

</Tabs>

|

||||

|

||||

## Common CrewAI Model Selection Pitfalls

|

||||

|

||||

<AccordionGroup>

|

||||

<Accordion title="The 'One Model Fits All' Trap" icon="triangle-exclamation">

|

||||

**The Problem**: Using the same LLM for all agents in a crew, regardless of their specific roles and responsibilities. This is often the default approach but rarely optimal.

|

||||

|

||||

**Real Example**: Using GPT-4o for both a strategic planning manager and a data extraction agent. The manager needs reasoning capabilities worth the premium cost, but the data extractor could perform just as well with GPT-4o-mini at a fraction of the price.

|

||||

|

||||

**CrewAI Solution**: Leverage agent-specific LLM configuration to match model capabilities with agent roles:

|

||||

```python

|

||||

# Strategic agent gets premium model

|

||||

manager = Agent(role="Strategy Manager", llm=LLM(model="gpt-4o"))

|

||||

|

||||

# Processing agent gets efficient model

|

||||

processor = Agent(role="Data Processor", llm=LLM(model="gpt-4o-mini"))

|

||||

```

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Ignoring Crew-Level vs Agent-Level LLM Hierarchy" icon="shuffle">

|

||||

**The Problem**: Not understanding how CrewAI's LLM hierarchy works - crew LLM, manager LLM, and agent LLM settings can conflict or be poorly coordinated.

|

||||

|

||||

**Real Example**: Setting a crew to use Claude, but having agents configured with GPT models, creating inconsistent behavior and unnecessary model switching overhead.

|

||||

|

||||

**CrewAI Solution**: Plan your LLM hierarchy strategically:

|

||||

```python

|

||||

crew = Crew(

|

||||

agents=[agent1, agent2],

|

||||

tasks=[task1, task2],

|

||||

manager_llm=LLM(model="gpt-4o"), # For crew coordination

|

||||

process=Process.hierarchical # When using manager_llm

|

||||

)

|

||||

|

||||

# Agents inherit crew LLM unless specifically overridden

|

||||

agent1 = Agent(llm=LLM(model="claude-3-5-sonnet")) # Override for specific needs

|

||||

```

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Function Calling Model Mismatch" icon="screwdriver-wrench">

|

||||

**The Problem**: Choosing models based on general capabilities while ignoring function calling performance for tool-heavy CrewAI workflows.

|

||||

|

||||

**Real Example**: Selecting a creative-focused model for an agent that primarily needs to call APIs, search tools, or process structured data. The agent struggles with tool parameter extraction and reliable function calls.

|

||||

|

||||

**CrewAI Solution**: Prioritize function calling capabilities for tool-heavy agents:

|

||||

```python

|

||||

# For agents that use many tools

|

||||

tool_agent = Agent(

|

||||

role="API Integration Specialist",

|

||||

tools=[search_tool, api_tool, data_tool],

|

||||

llm=LLM(model="gpt-4o"), # Excellent function calling

|

||||

# OR

|

||||

llm=LLM(model="claude-3-5-sonnet") # Also strong with tools

|

||||

)

|

||||

```

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Premature Optimization Without Testing" icon="gear">

|

||||

**The Problem**: Making complex model selection decisions based on theoretical performance without validating with actual CrewAI workflows and tasks.

|

||||

|

||||

**Real Example**: Implementing elaborate model switching logic based on task types without testing if the performance gains justify the operational complexity.

|

||||

|

||||

**CrewAI Solution**: Start simple, then optimize based on real performance data:

|

||||

```python

|

||||

# Start with this

|

||||

crew = Crew(agents=[...], tasks=[...], llm=LLM(model="gpt-4o-mini"))

|

||||

|

||||

# Test performance, then optimize specific agents as needed

|

||||

# Use Enterprise platform testing to validate improvements

|

||||

```

|

||||

</Accordion>

|

||||

|

||||

<Accordion title="Overlooking Context and Memory Limitations" icon="brain">

|

||||

**The Problem**: Not considering how model context windows interact with CrewAI's memory and context sharing between agents.

|

||||

|

||||

**Real Example**: Using a short-context model for agents that need to maintain conversation history across multiple task iterations, or in crews with extensive agent-to-agent communication.

|

||||

|

||||

**CrewAI Solution**: Match context capabilities to crew communication patterns.

|

||||

</Accordion>

|

||||

</AccordionGroup>

|

||||

|

||||

## Testing and Iteration Strategy

|

||||

|

||||

<Steps>

|

||||

<Step title="Start Simple" icon="play">

|

||||

Begin with reliable, general-purpose models that are well-understood and widely supported. This provides a stable foundation for understanding your specific requirements and performance expectations before optimizing for specialized needs.

|

||||

</Step>

|

||||

<Step title="Measure What Matters" icon="chart-line">

|

||||

Develop metrics that align with your specific use case and business requirements rather than relying solely on general benchmarks. Focus on measuring outcomes that directly impact your success rather than theoretical performance indicators.

|

||||

</Step>

|

||||

<Step title="Iterate Based on Results" icon="arrows-rotate">

|

||||

Make model changes based on observed performance in your specific context rather than theoretical considerations or general recommendations. Real-world performance often differs significantly from benchmark results or general reputation.

|

||||

</Step>

|

||||

<Step title="Consider Total Cost" icon="calculator">

|

||||

Evaluate the complete cost of ownership including model costs, development time, maintenance overhead, and operational complexity. The cheapest model per token may not be the most cost-effective choice when considering all factors.

|

||||

</Step>

|

||||

</Steps>

|

||||

|

||||

<Tip>

|

||||

Focus on understanding your requirements first, then select models that best match those needs. The best LLM choice is the one that consistently delivers the results you need within your operational constraints.

|

||||

</Tip>

|

||||

|

||||

### Enterprise-Grade Model Validation

|

||||

|

||||

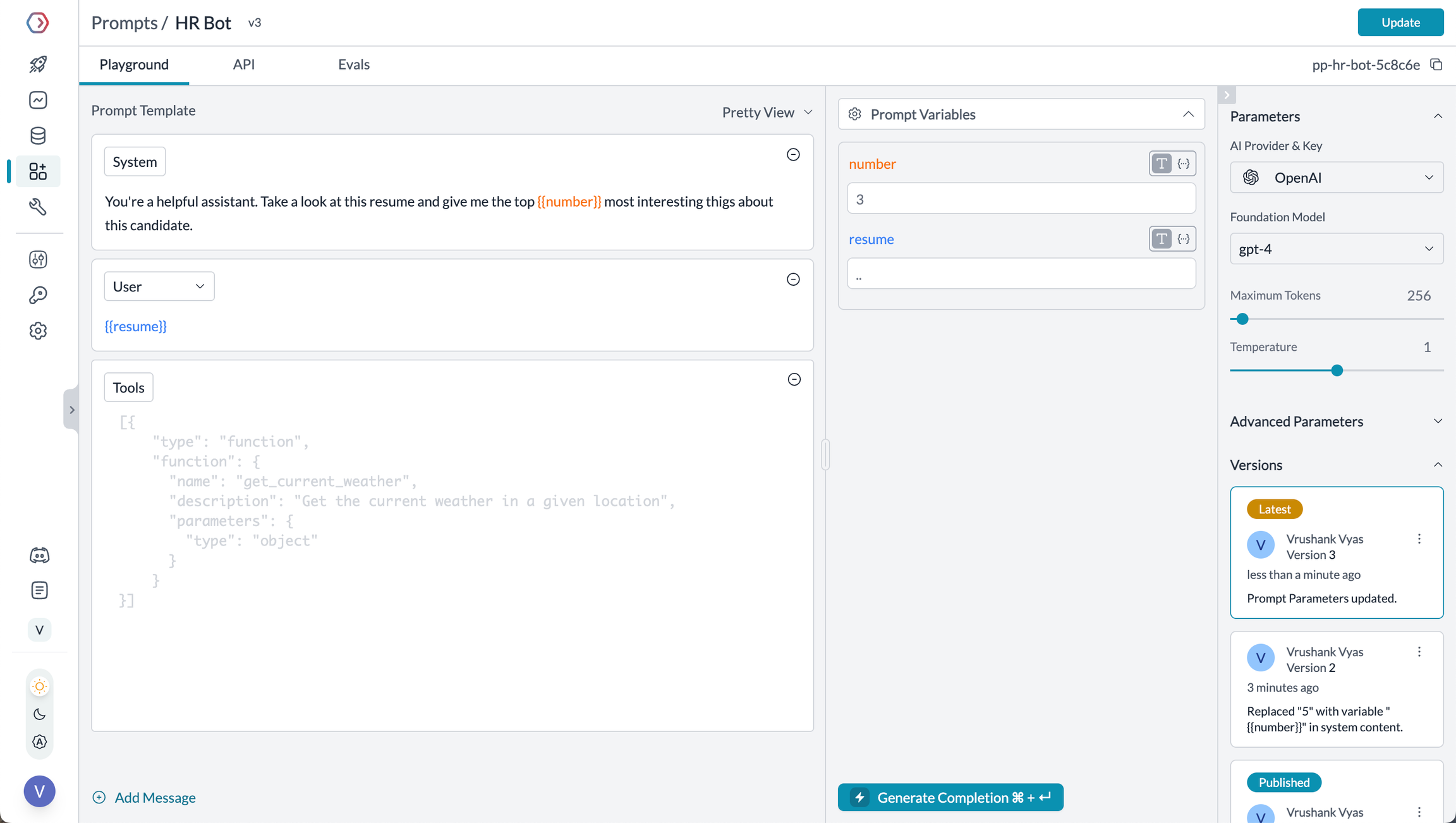

For teams serious about optimizing their LLM selection, the **CrewAI Enterprise platform** provides sophisticated testing capabilities that go far beyond basic CLI testing. The platform enables comprehensive model evaluation that helps you make data-driven decisions about your LLM strategy.

|

||||

|

||||

<Frame>

|

||||

|

||||

</Frame>

|

||||

|

||||

**Advanced Testing Features:**

|

||||

|

||||

- **Multi-Model Comparison**: Test multiple LLMs simultaneously across the same tasks and inputs. Compare performance between GPT-4o, Claude, Llama, Groq, Cerebras, and other leading models in parallel to identify the best fit for your specific use case.

|

||||

|

||||

- **Statistical Rigor**: Configure multiple iterations with consistent inputs to measure reliability and performance variance. This helps identify models that not only perform well but do so consistently across runs.

|

||||

|

||||

- **Real-World Validation**: Use your actual crew inputs and scenarios rather than synthetic benchmarks. The platform allows you to test with your specific industry context, company information, and real use cases for more accurate evaluation.

|

||||

|

||||

- **Comprehensive Analytics**: Access detailed performance metrics, execution times, and cost analysis across all tested models. This enables data-driven decision making rather than relying on general model reputation or theoretical capabilities.

|

||||

|

||||