mirror of

https://github.com/crewAIInc/crewAI.git

synced 2025-12-18 13:28:31 +00:00

Compare commits

32 Commits

bugfix/utc

...

devin/1738

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

20fc2f9878 | ||

|

|

c149b75874 | ||

|

|

86844ff3df | ||

|

|

b442fe20a2 | ||

|

|

9b1b1d33ba | ||

|

|

3c350e8933 | ||

|

|

a3a5507f9a | ||

|

|

a175167aaf | ||

|

|

1dc62b0d0a | ||

|

|

75b376ebac | ||

|

|

29106068b7 | ||

|

|

3bf531189f | ||

|

|

47919a60a0 | ||

|

|

6b9ed90510 | ||

|

|

f6a65486f1 | ||

|

|

bf6db93bdf | ||

|

|

25e68bc459 | ||

|

|

6f6010db1c | ||

|

|

a95227deef | ||

|

|

636dac6efb | ||

|

|

a4e2b17bae | ||

|

|

823f22a601 | ||

|

|

649414805d | ||

|

|

8017ab2dfd | ||

|

|

6445cda35a | ||

|

|

6116c73721 | ||

|

|

a038b751ef | ||

|

|

5006161d31 | ||

|

|

85a13751ba | ||

|

|

1c7c4cb828 | ||

|

|

509fb375ca | ||

|

|

d01d44b29c |

3

.gitignore

vendored

3

.gitignore

vendored

@@ -21,5 +21,4 @@ crew_tasks_output.json

|

||||

.mypy_cache

|

||||

.ruff_cache

|

||||

.venv

|

||||

agentops.log

|

||||

test_flow.html

|

||||

agentops.log

|

||||

@@ -91,7 +91,7 @@ result = crew.kickoff(inputs={"question": "What city does John live in and how o

|

||||

```

|

||||

|

||||

|

||||

Here's another example with the `CrewDoclingSource`. The CrewDoclingSource is actually quite versatile and can handle multiple file formats including MD, PDF, DOCX, HTML, and more.

|

||||

Here's another example with the `CrewDoclingSource`. The CrewDoclingSource is actually quite versatile and can handle multiple file formats including TXT, PDF, DOCX, HTML, and more.

|

||||

|

||||

<Note>

|

||||

You need to install `docling` for the following example to work: `uv add docling`

|

||||

@@ -152,10 +152,10 @@ Here are examples of how to use different types of knowledge sources:

|

||||

|

||||

### Text File Knowledge Source

|

||||

```python

|

||||

from crewai.knowledge.source.text_file_knowledge_source import TextFileKnowledgeSource

|

||||

from crewai.knowledge.source.crew_docling_source import CrewDoclingSource

|

||||

|

||||

# Create a text file knowledge source

|

||||

text_source = TextFileKnowledgeSource(

|

||||

text_source = CrewDoclingSource(

|

||||

file_paths=["document.txt", "another.txt"]

|

||||

)

|

||||

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

@@ -282,19 +282,6 @@ my_crew = Crew(

|

||||

|

||||

### Using Google AI embeddings

|

||||

|

||||

#### Prerequisites

|

||||

Before using Google AI embeddings, ensure you have:

|

||||

- Access to the Gemini API

|

||||

- The necessary API keys and permissions

|

||||

|

||||

You will need to update your *pyproject.toml* dependencies:

|

||||

```YAML

|

||||

dependencies = [

|

||||

"google-generativeai>=0.8.4", #main version in January/2025 - crewai v.0.100.0 and crewai-tools 0.33.0

|

||||

"crewai[tools]>=0.100.0,<1.0.0"

|

||||

]

|

||||

```

|

||||

|

||||

```python Code

|

||||

from crewai import Crew, Agent, Task, Process

|

||||

|

||||

@@ -447,38 +434,6 @@ my_crew = Crew(

|

||||

)

|

||||

```

|

||||

|

||||

### Using Amazon Bedrock embeddings

|

||||

|

||||

```python Code

|

||||

# Note: Ensure you have installed `boto3` for Bedrock embeddings to work.

|

||||

|

||||

import os

|

||||

import boto3

|

||||

from crewai import Crew, Agent, Task, Process

|

||||

|

||||

boto3_session = boto3.Session(

|

||||

region_name=os.environ.get("AWS_REGION_NAME"),

|

||||

aws_access_key_id=os.environ.get("AWS_ACCESS_KEY_ID"),

|

||||

aws_secret_access_key=os.environ.get("AWS_SECRET_ACCESS_KEY")

|

||||

)

|

||||

|

||||

my_crew = Crew(

|

||||

agents=[...],

|

||||

tasks=[...],

|

||||

process=Process.sequential,

|

||||

memory=True,

|

||||

embedder={

|

||||

"provider": "bedrock",

|

||||

"config":{

|

||||

"session": boto3_session,

|

||||

"model": "amazon.titan-embed-text-v2:0",

|

||||

"vector_dimension": 1024

|

||||

}

|

||||

}

|

||||

verbose=True

|

||||

)

|

||||

```

|

||||

|

||||

### Adding Custom Embedding Function

|

||||

|

||||

```python Code

|

||||

@@ -506,7 +461,7 @@ my_crew = Crew(

|

||||

)

|

||||

```

|

||||

|

||||

### Resetting Memory via cli

|

||||

### Resetting Memory

|

||||

|

||||

```shell

|

||||

crewai reset-memories [OPTIONS]

|

||||

@@ -520,46 +475,8 @@ crewai reset-memories [OPTIONS]

|

||||

| `-s`, `--short` | Reset SHORT TERM memory. | Flag (boolean) | False |

|

||||

| `-e`, `--entities` | Reset ENTITIES memory. | Flag (boolean) | False |

|

||||

| `-k`, `--kickoff-outputs` | Reset LATEST KICKOFF TASK OUTPUTS. | Flag (boolean) | False |

|

||||

| `-kn`, `--knowledge` | Reset KNOWLEDEGE storage | Flag (boolean) | False |

|

||||

| `-a`, `--all` | Reset ALL memories. | Flag (boolean) | False |

|

||||

|

||||

Note: To use the cli command you need to have your crew in a file called crew.py in the same directory.

|

||||

|

||||

|

||||

|

||||

|

||||

### Resetting Memory via crew object

|

||||

|

||||

```python

|

||||

|

||||

my_crew = Crew(

|

||||

agents=[...],

|

||||

tasks=[...],

|

||||

process=Process.sequential,

|

||||

memory=True,

|

||||

verbose=True,

|

||||

embedder={

|

||||

"provider": "custom",

|

||||

"config": {

|

||||

"embedder": CustomEmbedder()

|

||||

}

|

||||

}

|

||||

)

|

||||

|

||||

my_crew.reset_memories(command_type = 'all') # Resets all the memory

|

||||

```

|

||||

|

||||

#### Resetting Memory Options

|

||||

|

||||

| Command Type | Description |

|

||||

| :----------------- | :------------------------------- |

|

||||

| `long` | Reset LONG TERM memory. |

|

||||

| `short` | Reset SHORT TERM memory. |

|

||||

| `entities` | Reset ENTITIES memory. |

|

||||

| `kickoff_outputs` | Reset LATEST KICKOFF TASK OUTPUTS. |

|

||||

| `knowledge` | Reset KNOWLEDGE memory. |

|

||||

| `all` | Reset ALL memories. |

|

||||

|

||||

|

||||

## Benefits of Using CrewAI's Memory System

|

||||

|

||||

|

||||

@@ -268,7 +268,7 @@ analysis_task = Task(

|

||||

|

||||

Task guardrails provide a way to validate and transform task outputs before they

|

||||

are passed to the next task. This feature helps ensure data quality and provides

|

||||

feedback to agents when their output doesn't meet specific criteria.

|

||||

efeedback to agents when their output doesn't meet specific criteria.

|

||||

|

||||

### Using Task Guardrails

|

||||

|

||||

|

||||

@@ -54,8 +54,7 @@ coding_agent = Agent(

|

||||

# Create a task that requires code execution

|

||||

data_analysis_task = Task(

|

||||

description="Analyze the given dataset and calculate the average age of participants. Ages: {ages}",

|

||||

agent=coding_agent,

|

||||

expected_output="The average age of the participants."

|

||||

agent=coding_agent

|

||||

)

|

||||

|

||||

# Create a crew and add the task

|

||||

@@ -117,4 +116,4 @@ async def async_multiple_crews():

|

||||

|

||||

# Run the async function

|

||||

asyncio.run(async_multiple_crews())

|

||||

```

|

||||

```

|

||||

@@ -1,100 +0,0 @@

|

||||

---

|

||||

title: Agent Monitoring with Langfuse

|

||||

description: Learn how to integrate Langfuse with CrewAI via OpenTelemetry using OpenLit

|

||||

icon: magnifying-glass-chart

|

||||

---

|

||||

|

||||

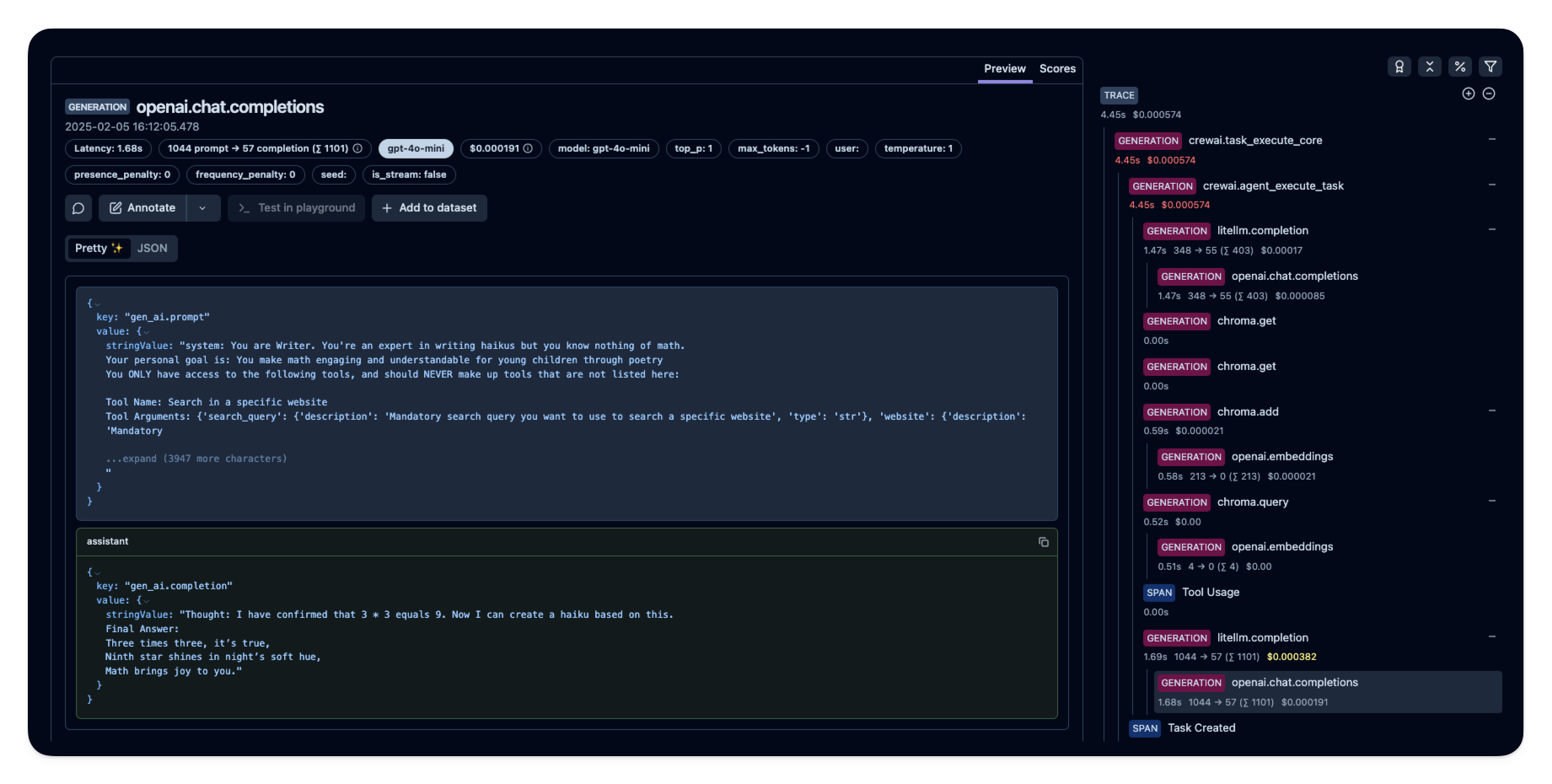

# Integrate Langfuse with CrewAI

|

||||

|

||||

This notebook demonstrates how to integrate **Langfuse** with **CrewAI** using OpenTelemetry via the **OpenLit** SDK. By the end of this notebook, you will be able to trace your CrewAI applications with Langfuse for improved observability and debugging.

|

||||

|

||||

> **What is Langfuse?** [Langfuse](https://langfuse.com) is an open-source LLM engineering platform. It provides tracing and monitoring capabilities for LLM applications, helping developers debug, analyze, and optimize their AI systems. Langfuse integrates with various tools and frameworks via native integrations, OpenTelemetry, and APIs/SDKs.

|

||||

|

||||

[](https://langfuse.com/watch-demo)

|

||||

|

||||

## Get Started

|

||||

|

||||

We'll walk through a simple example of using CrewAI and integrating it with Langfuse via OpenTelemetry using OpenLit.

|

||||

|

||||

### Step 1: Install Dependencies

|

||||

|

||||

|

||||

```python

|

||||

%pip install langfuse openlit crewai crewai_tools

|

||||

```

|

||||

|

||||

### Step 2: Set Up Environment Variables

|

||||

|

||||

Set your Langfuse API keys and configure OpenTelemetry export settings to send traces to Langfuse. Please refer to the [Langfuse OpenTelemetry Docs](https://langfuse.com/docs/opentelemetry/get-started) for more information on the Langfuse OpenTelemetry endpoint `/api/public/otel` and authentication.

|

||||

|

||||

|

||||

```python

|

||||

import os

|

||||

import base64

|

||||

|

||||

LANGFUSE_PUBLIC_KEY="pk-lf-..."

|

||||

LANGFUSE_SECRET_KEY="sk-lf-..."

|

||||

LANGFUSE_AUTH=base64.b64encode(f"{LANGFUSE_PUBLIC_KEY}:{LANGFUSE_SECRET_KEY}".encode()).decode()

|

||||

|

||||

os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = "https://cloud.langfuse.com/api/public/otel" # EU data region

|

||||

# os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = "https://us.cloud.langfuse.com/api/public/otel" # US data region

|

||||

os.environ["OTEL_EXPORTER_OTLP_HEADERS"] = f"Authorization=Basic {LANGFUSE_AUTH}"

|

||||

|

||||

# your openai key

|

||||

os.environ["OPENAI_API_KEY"] = "sk-..."

|

||||

```

|

||||

|

||||

### Step 3: Initialize OpenLit

|

||||

|

||||

Initialize the OpenLit OpenTelemetry instrumentation SDK to start capturing OpenTelemetry traces.

|

||||

|

||||

|

||||

```python

|

||||

import openlit

|

||||

|

||||

openlit.init()

|

||||

```

|

||||

|

||||

### Step 4: Create a Simple CrewAI Application

|

||||

|

||||

We'll create a simple CrewAI application where multiple agents collaborate to answer a user's question.

|

||||

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew

|

||||

|

||||

from crewai_tools import (

|

||||

WebsiteSearchTool

|

||||

)

|

||||

|

||||

web_rag_tool = WebsiteSearchTool()

|

||||

|

||||

writer = Agent(

|

||||

role="Writer",

|

||||

goal="You make math engaging and understandable for young children through poetry",

|

||||

backstory="You're an expert in writing haikus but you know nothing of math.",

|

||||

tools=[web_rag_tool],

|

||||

)

|

||||

|

||||

task = Task(description=("What is {multiplication}?"),

|

||||

expected_output=("Compose a haiku that includes the answer."),

|

||||

agent=writer)

|

||||

|

||||

crew = Crew(

|

||||

agents=[writer],

|

||||

tasks=[task],

|

||||

share_crew=False

|

||||

)

|

||||

```

|

||||

|

||||

### Step 5: See Traces in Langfuse

|

||||

|

||||

After running the agent, you can view the traces generated by your CrewAI application in [Langfuse](https://cloud.langfuse.com). You should see detailed steps of the LLM interactions, which can help you debug and optimize your AI agent.

|

||||

|

||||

|

||||

|

||||

_[Public example trace in Langfuse](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/e2cf380ffc8d47d28da98f136140642b?timestamp=2025-02-05T15%3A12%3A02.717Z&observation=3b32338ee6a5d9af)_

|

||||

|

||||

## References

|

||||

|

||||

- [Langfuse OpenTelemetry Docs](https://langfuse.com/docs/opentelemetry/get-started)

|

||||

211

docs/how-to/portkey-observability-and-guardrails.mdx

Normal file

211

docs/how-to/portkey-observability-and-guardrails.mdx

Normal file

@@ -0,0 +1,211 @@

|

||||

# Portkey Integration with CrewAI

|

||||

<img src="https://raw.githubusercontent.com/siddharthsambharia-portkey/Portkey-Product-Images/main/Portkey-CrewAI.png" alt="Portkey CrewAI Header Image" width="70%" />

|

||||

|

||||

|

||||

[Portkey](https://portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai) is a 2-line upgrade to make your CrewAI agents reliable, cost-efficient, and fast.

|

||||

|

||||

Portkey adds 4 core production capabilities to any CrewAI agent:

|

||||

1. Routing to **200+ LLMs**

|

||||

2. Making each LLM call more robust

|

||||

3. Full-stack tracing & cost, performance analytics

|

||||

4. Real-time guardrails to enforce behavior

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Getting Started

|

||||

|

||||

1. **Install Required Packages:**

|

||||

|

||||

```bash

|

||||

pip install -qU crewai portkey-ai

|

||||

```

|

||||

|

||||

2. **Configure the LLM Client:**

|

||||

|

||||

To build CrewAI Agents with Portkey, you'll need two keys:

|

||||

- **Portkey API Key**: Sign up on the [Portkey app](https://app.portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai) and copy your API key

|

||||

- **Virtual Key**: Virtual Keys securely manage your LLM API keys in one place. Store your LLM provider API keys securely in Portkey's vault

|

||||

|

||||

```python

|

||||

from crewai import LLM

|

||||

from portkey_ai import createHeaders, PORTKEY_GATEWAY_URL

|

||||

|

||||

gpt_llm = LLM(

|

||||

model="gpt-4",

|

||||

base_url=PORTKEY_GATEWAY_URL,

|

||||

api_key="dummy", # We are using Virtual key

|

||||

extra_headers=createHeaders(

|

||||

api_key="YOUR_PORTKEY_API_KEY",

|

||||

virtual_key="YOUR_VIRTUAL_KEY", # Enter your Virtual key from Portkey

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

3. **Create and Run Your First Agent:**

|

||||

|

||||

```python

|

||||

from crewai import Agent, Task, Crew

|

||||

|

||||

# Define your agents with roles and goals

|

||||

coder = Agent(

|

||||

role='Software developer',

|

||||

goal='Write clear, concise code on demand',

|

||||

backstory='An expert coder with a keen eye for software trends.',

|

||||

llm=gpt_llm

|

||||

)

|

||||

|

||||

# Create tasks for your agents

|

||||

task1 = Task(

|

||||

description="Define the HTML for making a simple website with heading- Hello World! Portkey is working!",

|

||||

expected_output="A clear and concise HTML code",

|

||||

agent=coder

|

||||

)

|

||||

|

||||

# Instantiate your crew

|

||||

crew = Crew(

|

||||

agents=[coder],

|

||||

tasks=[task1],

|

||||

)

|

||||

|

||||

result = crew.kickoff()

|

||||

print(result)

|

||||

```

|

||||

|

||||

|

||||

## Key Features

|

||||

|

||||

| Feature | Description |

|

||||

|---------|-------------|

|

||||

| 🌐 Multi-LLM Support | Access OpenAI, Anthropic, Gemini, Azure, and 250+ providers through a unified interface |

|

||||

| 🛡️ Production Reliability | Implement retries, timeouts, load balancing, and fallbacks |

|

||||

| 📊 Advanced Observability | Track 40+ metrics including costs, tokens, latency, and custom metadata |

|

||||

| 🔍 Comprehensive Logging | Debug with detailed execution traces and function call logs |

|

||||

| 🚧 Security Controls | Set budget limits and implement role-based access control |

|

||||

| 🔄 Performance Analytics | Capture and analyze feedback for continuous improvement |

|

||||

| 💾 Intelligent Caching | Reduce costs and latency with semantic or simple caching |

|

||||

|

||||

|

||||

## Production Features with Portkey Configs

|

||||

|

||||

All features mentioned below are through Portkey's Config system. Portkey's Config system allows you to define routing strategies using simple JSON objects in your LLM API calls. You can create and manage Configs directly in your code or through the Portkey Dashboard. Each Config has a unique ID for easy reference.

|

||||

|

||||

<Frame>

|

||||

<img src="https://raw.githubusercontent.com/Portkey-AI/docs-core/refs/heads/main/images/libraries/libraries-3.avif"/>

|

||||

</Frame>

|

||||

|

||||

|

||||

### 1. Use 250+ LLMs

|

||||

Access various LLMs like Anthropic, Gemini, Mistral, Azure OpenAI, and more with minimal code changes. Switch between providers or use them together seamlessly. [Learn more about Universal API](https://portkey.ai/docs/product/ai-gateway/universal-api)

|

||||

|

||||

|

||||

Easily switch between different LLM providers:

|

||||

|

||||

```python

|

||||

# Anthropic Configuration

|

||||

anthropic_llm = LLM(

|

||||

model="claude-3-5-sonnet-latest",

|

||||

base_url=PORTKEY_GATEWAY_URL,

|

||||

api_key="dummy",

|

||||

extra_headers=createHeaders(

|

||||

api_key="YOUR_PORTKEY_API_KEY",

|

||||

virtual_key="YOUR_ANTHROPIC_VIRTUAL_KEY", #You don't need provider when using Virtual keys

|

||||

trace_id="anthropic_agent"

|

||||

)

|

||||

)

|

||||

|

||||

# Azure OpenAI Configuration

|

||||

azure_llm = LLM(

|

||||

model="gpt-4",

|

||||

base_url=PORTKEY_GATEWAY_URL,

|

||||

api_key="dummy",

|

||||

extra_headers=createHeaders(

|

||||

api_key="YOUR_PORTKEY_API_KEY",

|

||||

virtual_key="YOUR_AZURE_VIRTUAL_KEY", #You don't need provider when using Virtual keys

|

||||

trace_id="azure_agent"

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

### 2. Caching

|

||||

Improve response times and reduce costs with two powerful caching modes:

|

||||

- **Simple Cache**: Perfect for exact matches

|

||||

- **Semantic Cache**: Matches responses for requests that are semantically similar

|

||||

[Learn more about Caching](https://portkey.ai/docs/product/ai-gateway/cache-simple-and-semantic)

|

||||

|

||||

```py

|

||||

config = {

|

||||

"cache": {

|

||||

"mode": "semantic", # or "simple" for exact matching

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 3. Production Reliability

|

||||

Portkey provides comprehensive reliability features:

|

||||

- **Automatic Retries**: Handle temporary failures gracefully

|

||||

- **Request Timeouts**: Prevent hanging operations

|

||||

- **Conditional Routing**: Route requests based on specific conditions

|

||||

- **Fallbacks**: Set up automatic provider failovers

|

||||

- **Load Balancing**: Distribute requests efficiently

|

||||

|

||||

[Learn more about Reliability Features](https://portkey.ai/docs/product/ai-gateway/)

|

||||

|

||||

|

||||

|

||||

### 4. Metrics

|

||||

|

||||

Agent runs are complex. Portkey automatically logs **40+ comprehensive metrics** for your AI agents, including cost, tokens used, latency, etc. Whether you need a broad overview or granular insights into your agent runs, Portkey's customizable filters provide the metrics you need.

|

||||

|

||||

|

||||

- Cost per agent interaction

|

||||

- Response times and latency

|

||||

- Token usage and efficiency

|

||||

- Success/failure rates

|

||||

- Cache hit rates

|

||||

|

||||

<img src="https://github.com/siddharthsambharia-portkey/Portkey-Product-Images/blob/main/Portkey-Dashboard.png?raw=true" width="70%" alt="Portkey Dashboard" />

|

||||

|

||||

### 5. Detailed Logging

|

||||

Logs are essential for understanding agent behavior, diagnosing issues, and improving performance. They provide a detailed record of agent activities and tool use, which is crucial for debugging and optimizing processes.

|

||||

|

||||

|

||||

Access a dedicated section to view records of agent executions, including parameters, outcomes, function calls, and errors. Filter logs based on multiple parameters such as trace ID, model, tokens used, and metadata.

|

||||

|

||||

<details>

|

||||

<summary><b>Traces</b></summary>

|

||||

<img src="https://raw.githubusercontent.com/siddharthsambharia-portkey/Portkey-Product-Images/main/Portkey-Traces.png" alt="Portkey Traces" width="70%" />

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><b>Logs</b></summary>

|

||||

<img src="https://raw.githubusercontent.com/siddharthsambharia-portkey/Portkey-Product-Images/main/Portkey-Logs.png" alt="Portkey Logs" width="70%" />

|

||||

</details>

|

||||

|

||||

### 6. Enterprise Security Features

|

||||

- Set budget limit and rate limts per Virtual Key (disposable API keys)

|

||||

- Implement role-based access control

|

||||

- Track system changes with audit logs

|

||||

- Configure data retention policies

|

||||

|

||||

|

||||

|

||||

For detailed information on creating and managing Configs, visit the [Portkey documentation](https://docs.portkey.ai/product/ai-gateway/configs).

|

||||

|

||||

## Resources

|

||||

|

||||

- [📘 Portkey Documentation](https://docs.portkey.ai)

|

||||

- [📊 Portkey Dashboard](https://app.portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai)

|

||||

- [🐦 Twitter](https://twitter.com/portkeyai)

|

||||

- [💬 Discord Community](https://discord.gg/DD7vgKK299)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

---

|

||||

title: Agent Monitoring with Portkey

|

||||

title: Portkey Observability and Guardrails

|

||||

description: How to use Portkey with CrewAI

|

||||

icon: key

|

||||

---

|

||||

|

||||

45

docs/memory.md

Normal file

45

docs/memory.md

Normal file

@@ -0,0 +1,45 @@

|

||||

# Memory in CrewAI

|

||||

|

||||

CrewAI provides a robust memory system that allows agents to retain and recall information from previous interactions.

|

||||

|

||||

## Configuring Embedding Providers

|

||||

|

||||

CrewAI supports multiple embedding providers for memory functionality:

|

||||

|

||||

- OpenAI (default) - Requires `OPENAI_API_KEY`

|

||||

- Ollama - Requires `CREWAI_OLLAMA_URL` (defaults to "http://localhost:11434/api/embeddings")

|

||||

|

||||

### Environment Variables

|

||||

|

||||

Configure the embedding provider using these environment variables:

|

||||

|

||||

- `CREWAI_EMBEDDING_PROVIDER`: Provider name (default: "openai")

|

||||

- `CREWAI_EMBEDDING_MODEL`: Model name (default: "text-embedding-3-small")

|

||||

- `CREWAI_OLLAMA_URL`: URL for Ollama API (when using Ollama provider)

|

||||

|

||||

### Example Configuration

|

||||

|

||||

```python

|

||||

# Using OpenAI (default)

|

||||

os.environ["OPENAI_API_KEY"] = "your-api-key"

|

||||

|

||||

# Using Ollama

|

||||

os.environ["CREWAI_EMBEDDING_PROVIDER"] = "ollama"

|

||||

os.environ["CREWAI_EMBEDDING_MODEL"] = "llama2" # or any other model supported by your Ollama instance

|

||||

os.environ["CREWAI_OLLAMA_URL"] = "http://localhost:11434/api/embeddings" # optional, this is the default

|

||||

```

|

||||

|

||||

## Memory Usage

|

||||

|

||||

When an agent has memory enabled, it can access and store information from previous interactions:

|

||||

|

||||

```python

|

||||

agent = Agent(

|

||||

role="Researcher",

|

||||

goal="Research AI topics",

|

||||

backstory="You're an AI researcher",

|

||||

memory=True # Enable memory for this agent

|

||||

)

|

||||

```

|

||||

|

||||

The memory system uses embeddings to store and retrieve relevant information, allowing agents to maintain context across multiple interactions and tasks.

|

||||

@@ -103,8 +103,7 @@

|

||||

"how-to/langtrace-observability",

|

||||

"how-to/mlflow-observability",

|

||||

"how-to/openlit-observability",

|

||||

"how-to/portkey-observability",

|

||||

"how-to/langfuse-observability"

|

||||

"how-to/portkey-observability"

|

||||

]

|

||||

},

|

||||

{

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

[project]

|

||||

name = "crewai"

|

||||

version = "0.102.0"

|

||||

version = "0.100.1"

|

||||

description = "Cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks."

|

||||

readme = "README.md"

|

||||

requires-python = ">=3.10,<3.13"

|

||||

@@ -45,7 +45,7 @@ Documentation = "https://docs.crewai.com"

|

||||

Repository = "https://github.com/crewAIInc/crewAI"

|

||||

|

||||

[project.optional-dependencies]

|

||||

tools = ["crewai-tools>=0.36.0"]

|

||||

tools = ["crewai-tools>=0.32.1"]

|

||||

embeddings = [

|

||||

"tiktoken~=0.7.0"

|

||||

]

|

||||

|

||||

@@ -14,7 +14,7 @@ warnings.filterwarnings(

|

||||

category=UserWarning,

|

||||

module="pydantic.main",

|

||||

)

|

||||

__version__ = "0.102.0"

|

||||

__version__ = "0.100.1"

|

||||

__all__ = [

|

||||

"Agent",

|

||||

"Crew",

|

||||

|

||||

@@ -1,13 +1,14 @@

|

||||

import re

|

||||

import os

|

||||

import shutil

|

||||

import subprocess

|

||||

from typing import Any, Dict, List, Literal, Optional, Sequence, Union

|

||||

from typing import Any, Dict, List, Literal, Optional, Union

|

||||

|

||||

from pydantic import Field, InstanceOf, PrivateAttr, model_validator

|

||||

|

||||

from crewai.agents import CacheHandler

|

||||

from crewai.agents.agent_builder.base_agent import BaseAgent

|

||||

from crewai.agents.crew_agent_executor import CrewAgentExecutor

|

||||

from crewai.cli.constants import ENV_VARS, LITELLM_PARAMS

|

||||

from crewai.knowledge.knowledge import Knowledge

|

||||

from crewai.knowledge.source.base_knowledge_source import BaseKnowledgeSource

|

||||

from crewai.knowledge.utils.knowledge_utils import extract_knowledge_context

|

||||

@@ -16,20 +17,28 @@ from crewai.memory.contextual.contextual_memory import ContextualMemory

|

||||

from crewai.task import Task

|

||||

from crewai.tools import BaseTool

|

||||

from crewai.tools.agent_tools.agent_tools import AgentTools

|

||||

from crewai.tools.base_tool import Tool

|

||||

from crewai.utilities import Converter, Prompts

|

||||

from crewai.utilities.constants import TRAINED_AGENTS_DATA_FILE, TRAINING_DATA_FILE

|

||||

from crewai.utilities.converter import generate_model_description

|

||||

from crewai.utilities.events.agent_events import (

|

||||

AgentExecutionCompletedEvent,

|

||||

AgentExecutionErrorEvent,

|

||||

AgentExecutionStartedEvent,

|

||||

)

|

||||

from crewai.utilities.events.crewai_event_bus import crewai_event_bus

|

||||

from crewai.utilities.llm_utils import create_llm

|

||||

from crewai.utilities.token_counter_callback import TokenCalcHandler

|

||||

from crewai.utilities.training_handler import CrewTrainingHandler

|

||||

|

||||

agentops = None

|

||||

|

||||

try:

|

||||

import agentops # type: ignore # Name "agentops" is already defined

|

||||

from agentops import track_agent # type: ignore

|

||||

except ImportError:

|

||||

|

||||

def track_agent():

|

||||

def noop(f):

|

||||

return f

|

||||

|

||||

return noop

|

||||

|

||||

|

||||

@track_agent()

|

||||

class Agent(BaseAgent):

|

||||

"""Represents an agent in a system.

|

||||

|

||||

@@ -46,13 +55,13 @@ class Agent(BaseAgent):

|

||||

llm: The language model that will run the agent.

|

||||

function_calling_llm: The language model that will handle the tool calling for this agent, it overrides the crew function_calling_llm.

|

||||

max_iter: Maximum number of iterations for an agent to execute a task.

|

||||

memory: Whether the agent should have memory or not.

|

||||

max_rpm: Maximum number of requests per minute for the agent execution to be respected.

|

||||

verbose: Whether the agent execution should be in verbose mode.

|

||||

allow_delegation: Whether the agent is allowed to delegate tasks to other agents.

|

||||

tools: Tools at agents disposal

|

||||

step_callback: Callback to be executed after each step of the agent execution.

|

||||

knowledge_sources: Knowledge sources for the agent.

|

||||

embedder: Embedder configuration for the agent.

|

||||

"""

|

||||

|

||||

_times_executed: int = PrivateAttr(default=0)

|

||||

@@ -62,6 +71,9 @@ class Agent(BaseAgent):

|

||||

)

|

||||

agent_ops_agent_name: str = None # type: ignore # Incompatible types in assignment (expression has type "None", variable has type "str")

|

||||

agent_ops_agent_id: str = None # type: ignore # Incompatible types in assignment (expression has type "None", variable has type "str")

|

||||

cache_handler: InstanceOf[CacheHandler] = Field(

|

||||

default=None, description="An instance of the CacheHandler class."

|

||||

)

|

||||

step_callback: Optional[Any] = Field(

|

||||

default=None,

|

||||

description="Callback to be executed after each step of the agent execution.",

|

||||

@@ -73,7 +85,7 @@ class Agent(BaseAgent):

|

||||

llm: Union[str, InstanceOf[LLM], Any] = Field(

|

||||

description="Language model that will run the agent.", default=None

|

||||

)

|

||||

function_calling_llm: Optional[Union[str, InstanceOf[LLM], Any]] = Field(

|

||||

function_calling_llm: Optional[Any] = Field(

|

||||

description="Language model that will run the agent.", default=None

|

||||

)

|

||||

system_template: Optional[str] = Field(

|

||||

@@ -95,6 +107,10 @@ class Agent(BaseAgent):

|

||||

default=True,

|

||||

description="Keep messages under the context window size by summarizing content.",

|

||||

)

|

||||

max_iter: int = Field(

|

||||

default=20,

|

||||

description="Maximum number of iterations for an agent to execute a task before giving it's best answer",

|

||||

)

|

||||

max_retry_limit: int = Field(

|

||||

default=2,

|

||||

description="Maximum number of retries for an agent to execute a task when an error occurs.",

|

||||

@@ -107,18 +123,105 @@ class Agent(BaseAgent):

|

||||

default="safe",

|

||||

description="Mode for code execution: 'safe' (using Docker) or 'unsafe' (direct execution).",

|

||||

)

|

||||

embedder: Optional[Dict[str, Any]] = Field(

|

||||

embedder_config: Optional[Dict[str, Any]] = Field(

|

||||

default=None,

|

||||

description="Embedder configuration for the agent.",

|

||||

)

|

||||

knowledge_sources: Optional[List[BaseKnowledgeSource]] = Field(

|

||||

default=None,

|

||||

description="Knowledge sources for the agent.",

|

||||

)

|

||||

_knowledge: Optional[Knowledge] = PrivateAttr(

|

||||

default=None,

|

||||

)

|

||||

|

||||

@model_validator(mode="after")

|

||||

def post_init_setup(self):

|

||||

self._set_knowledge()

|

||||

self.agent_ops_agent_name = self.role

|

||||

unaccepted_attributes = [

|

||||

"AWS_ACCESS_KEY_ID",

|

||||

"AWS_SECRET_ACCESS_KEY",

|

||||

"AWS_REGION_NAME",

|

||||

]

|

||||

|

||||

self.llm = create_llm(self.llm)

|

||||

if self.function_calling_llm and not isinstance(self.function_calling_llm, LLM):

|

||||

self.function_calling_llm = create_llm(self.function_calling_llm)

|

||||

# Handle different cases for self.llm

|

||||

if isinstance(self.llm, str):

|

||||

# If it's a string, create an LLM instance

|

||||

self.llm = LLM(model=self.llm)

|

||||

elif isinstance(self.llm, LLM):

|

||||

# If it's already an LLM instance, keep it as is

|

||||

pass

|

||||

elif self.llm is None:

|

||||

# Determine the model name from environment variables or use default

|

||||

model_name = (

|

||||

os.environ.get("OPENAI_MODEL_NAME")

|

||||

or os.environ.get("MODEL")

|

||||

or "gpt-4o-mini"

|

||||

)

|

||||

llm_params = {"model": model_name}

|

||||

|

||||

api_base = os.environ.get("OPENAI_API_BASE") or os.environ.get(

|

||||

"OPENAI_BASE_URL"

|

||||

)

|

||||

if api_base:

|

||||

llm_params["base_url"] = api_base

|

||||

|

||||

set_provider = model_name.split("/")[0] if "/" in model_name else "openai"

|

||||

|

||||

# Iterate over all environment variables to find matching API keys or use defaults

|

||||

for provider, env_vars in ENV_VARS.items():

|

||||

if provider == set_provider:

|

||||

for env_var in env_vars:

|

||||

# Check if the environment variable is set

|

||||

key_name = env_var.get("key_name")

|

||||

if key_name and key_name not in unaccepted_attributes:

|

||||

env_value = os.environ.get(key_name)

|

||||

if env_value:

|

||||

key_name = key_name.lower()

|

||||

for pattern in LITELLM_PARAMS:

|

||||

if pattern in key_name:

|

||||

key_name = pattern

|

||||

break

|

||||

llm_params[key_name] = env_value

|

||||

# Check for default values if the environment variable is not set

|

||||

elif env_var.get("default", False):

|

||||

for key, value in env_var.items():

|

||||

if key not in ["prompt", "key_name", "default"]:

|

||||

# Only add default if the key is already set in os.environ

|

||||

if key in os.environ:

|

||||

llm_params[key] = value

|

||||

|

||||

self.llm = LLM(**llm_params)

|

||||

else:

|

||||

# For any other type, attempt to extract relevant attributes

|

||||

llm_params = {

|

||||

"model": getattr(self.llm, "model_name", None)

|

||||

or getattr(self.llm, "deployment_name", None)

|

||||

or str(self.llm),

|

||||

"temperature": getattr(self.llm, "temperature", None),

|

||||

"max_tokens": getattr(self.llm, "max_tokens", None),

|

||||

"logprobs": getattr(self.llm, "logprobs", None),

|

||||

"timeout": getattr(self.llm, "timeout", None),

|

||||

"max_retries": getattr(self.llm, "max_retries", None),

|

||||

"api_key": getattr(self.llm, "api_key", None),

|

||||

"base_url": getattr(self.llm, "base_url", None),

|

||||

"organization": getattr(self.llm, "organization", None),

|

||||

}

|

||||

# Remove None values to avoid passing unnecessary parameters

|

||||

llm_params = {k: v for k, v in llm_params.items() if v is not None}

|

||||

self.llm = LLM(**llm_params)

|

||||

|

||||

# Similar handling for function_calling_llm

|

||||

if self.function_calling_llm:

|

||||

if isinstance(self.function_calling_llm, str):

|

||||

self.function_calling_llm = LLM(model=self.function_calling_llm)

|

||||

elif not isinstance(self.function_calling_llm, LLM):

|

||||

self.function_calling_llm = LLM(

|

||||

model=getattr(self.function_calling_llm, "model_name", None)

|

||||

or getattr(self.function_calling_llm, "deployment_name", None)

|

||||

or str(self.function_calling_llm)

|

||||

)

|

||||

|

||||

if not self.agent_executor:

|

||||

self._setup_agent_executor()

|

||||

@@ -133,22 +236,26 @@ class Agent(BaseAgent):

|

||||

self.cache_handler = CacheHandler()

|

||||

self.set_cache_handler(self.cache_handler)

|

||||

|

||||

def set_knowledge(self, crew_embedder: Optional[Dict[str, Any]] = None):

|

||||

def _set_knowledge(self):

|

||||

try:

|

||||

if self.embedder is None and crew_embedder:

|

||||

self.embedder = crew_embedder

|

||||

|

||||

if self.knowledge_sources:

|

||||

full_pattern = re.compile(r"[^a-zA-Z0-9\-_\r\n]|(\.\.)")

|

||||

knowledge_agent_name = f"{re.sub(full_pattern, '_', self.role)}"

|

||||

knowledge_agent_name = f"{self.role.replace(' ', '_')}"

|

||||

if isinstance(self.knowledge_sources, list) and all(

|

||||

isinstance(k, BaseKnowledgeSource) for k in self.knowledge_sources

|

||||

):

|

||||

self.knowledge = Knowledge(

|

||||

# Validate embedding configuration based on provider

|

||||

from crewai.utilities.constants import DEFAULT_EMBEDDING_PROVIDER

|

||||

provider = os.getenv("CREWAI_EMBEDDING_PROVIDER", DEFAULT_EMBEDDING_PROVIDER)

|

||||

|

||||

if provider == "openai" and not os.getenv("OPENAI_API_KEY"):

|

||||

raise ValueError("Please provide an OpenAI API key via OPENAI_API_KEY environment variable")

|

||||

elif provider == "ollama" and not os.getenv("CREWAI_OLLAMA_URL", "http://localhost:11434/api/embeddings"):

|

||||

raise ValueError("Please provide Ollama URL via CREWAI_OLLAMA_URL environment variable")

|

||||

|

||||

self._knowledge = Knowledge(

|

||||

sources=self.knowledge_sources,

|

||||

embedder=self.embedder,

|

||||

embedder_config=self.embedder_config,

|

||||

collection_name=knowledge_agent_name,

|

||||

storage=self.knowledge_storage or None,

|

||||

)

|

||||

except (TypeError, ValueError) as e:

|

||||

raise ValueError(f"Invalid Knowledge Configuration: {str(e)}")

|

||||

@@ -182,15 +289,13 @@ class Agent(BaseAgent):

|

||||

if task.output_json:

|

||||

# schema = json.dumps(task.output_json, indent=2)

|

||||

schema = generate_model_description(task.output_json)

|

||||

task_prompt += "\n" + self.i18n.slice(

|

||||

"formatted_task_instructions"

|

||||

).format(output_format=schema)

|

||||

|

||||

elif task.output_pydantic:

|

||||

schema = generate_model_description(task.output_pydantic)

|

||||

task_prompt += "\n" + self.i18n.slice(

|

||||

"formatted_task_instructions"

|

||||

).format(output_format=schema)

|

||||

|

||||

task_prompt += "\n" + self.i18n.slice("formatted_task_instructions").format(

|

||||

output_format=schema

|

||||

)

|

||||

|

||||

if context:

|

||||

task_prompt = self.i18n.slice("task_with_context").format(

|

||||

@@ -209,8 +314,8 @@ class Agent(BaseAgent):

|

||||

if memory.strip() != "":

|

||||

task_prompt += self.i18n.slice("memory").format(memory=memory)

|

||||

|

||||

if self.knowledge:

|

||||

agent_knowledge_snippets = self.knowledge.query([task.prompt()])

|

||||

if self._knowledge:

|

||||

agent_knowledge_snippets = self._knowledge.query([task.prompt()])

|

||||

if agent_knowledge_snippets:

|

||||

agent_knowledge_context = extract_knowledge_context(

|

||||

agent_knowledge_snippets

|

||||

@@ -234,15 +339,6 @@ class Agent(BaseAgent):

|

||||

task_prompt = self._use_trained_data(task_prompt=task_prompt)

|

||||

|

||||

try:

|

||||

crewai_event_bus.emit(

|

||||

self,

|

||||

event=AgentExecutionStartedEvent(

|

||||

agent=self,

|

||||

tools=self.tools,

|

||||

task_prompt=task_prompt,

|

||||

task=task,

|

||||

),

|

||||

)

|

||||

result = self.agent_executor.invoke(

|

||||

{

|

||||

"input": task_prompt,

|

||||

@@ -252,27 +348,8 @@ class Agent(BaseAgent):

|

||||

}

|

||||

)["output"]

|

||||

except Exception as e:

|

||||

if e.__class__.__module__.startswith("litellm"):

|

||||

# Do not retry on litellm errors

|

||||

crewai_event_bus.emit(

|

||||

self,

|

||||

event=AgentExecutionErrorEvent(

|

||||

agent=self,

|

||||

task=task,

|

||||

error=str(e),

|

||||

),

|

||||

)

|

||||

raise e

|

||||

self._times_executed += 1

|

||||

if self._times_executed > self.max_retry_limit:

|

||||

crewai_event_bus.emit(

|

||||

self,

|

||||

event=AgentExecutionErrorEvent(

|

||||

agent=self,

|

||||

task=task,

|

||||

error=str(e),

|

||||

),

|

||||

)

|

||||

raise e

|

||||

result = self.execute_task(task, context, tools)

|

||||

|

||||

@@ -285,10 +362,7 @@ class Agent(BaseAgent):

|

||||

for tool_result in self.tools_results: # type: ignore # Item "None" of "list[Any] | None" has no attribute "__iter__" (not iterable)

|

||||

if tool_result.get("result_as_answer", False):

|

||||

result = tool_result["result"]

|

||||

crewai_event_bus.emit(

|

||||

self,

|

||||

event=AgentExecutionCompletedEvent(agent=self, task=task, output=result),

|

||||

)

|

||||

|

||||

return result

|

||||

|

||||

def create_agent_executor(

|

||||

@@ -346,14 +420,13 @@ class Agent(BaseAgent):

|

||||

tools = agent_tools.tools()

|

||||

return tools

|

||||

|

||||

def get_multimodal_tools(self) -> Sequence[BaseTool]:

|

||||

def get_multimodal_tools(self) -> List[Tool]:

|

||||

from crewai.tools.agent_tools.add_image_tool import AddImageTool

|

||||

|

||||

return [AddImageTool()]

|

||||

|

||||

def get_code_execution_tools(self):

|

||||

try:

|

||||

from crewai_tools import CodeInterpreterTool # type: ignore

|

||||

from crewai_tools import CodeInterpreterTool

|

||||

|

||||

# Set the unsafe_mode based on the code_execution_mode attribute

|

||||

unsafe_mode = self.code_execution_mode == "unsafe"

|

||||

|

||||

@@ -20,7 +20,8 @@ from crewai.agents.cache.cache_handler import CacheHandler

|

||||

from crewai.agents.tools_handler import ToolsHandler

|

||||

from crewai.knowledge.knowledge import Knowledge

|

||||

from crewai.knowledge.source.base_knowledge_source import BaseKnowledgeSource

|

||||

from crewai.tools.base_tool import BaseTool, Tool

|

||||

from crewai.tools import BaseTool

|

||||

from crewai.tools.base_tool import Tool

|

||||

from crewai.utilities import I18N, Logger, RPMController

|

||||

from crewai.utilities.config import process_config

|

||||

from crewai.utilities.converter import Converter

|

||||

@@ -111,8 +112,8 @@ class BaseAgent(ABC, BaseModel):

|

||||

default=False,

|

||||

description="Enable agent to delegate and ask questions among each other.",

|

||||

)

|

||||

tools: Optional[List[BaseTool]] = Field(

|

||||

default_factory=list, description="Tools at agents' disposal"

|

||||

tools: Optional[List[Any]] = Field(

|

||||

default_factory=lambda: [], description="Tools at agents' disposal"

|

||||

)

|

||||

max_iter: int = Field(

|

||||

default=25, description="Maximum iterations for an agent to execute a task"

|

||||

@@ -351,6 +352,3 @@ class BaseAgent(ABC, BaseModel):

|

||||

if not self._rpm_controller:

|

||||

self._rpm_controller = rpm_controller

|

||||

self.create_agent_executor()

|

||||

|

||||

def set_knowledge(self, crew_embedder: Optional[Dict[str, Any]] = None):

|

||||

pass

|

||||

|

||||

@@ -114,15 +114,10 @@ class CrewAgentExecutorMixin:

|

||||

prompt = (

|

||||

"\n\n=====\n"

|

||||

"## HUMAN FEEDBACK: Provide feedback on the Final Result and Agent's actions.\n"

|

||||

"Please follow these guidelines:\n"

|

||||

" - If you are happy with the result, simply hit Enter without typing anything.\n"

|

||||

" - Otherwise, provide specific improvement requests.\n"

|

||||

" - You can provide multiple rounds of feedback until satisfied.\n"

|

||||

"Respond with 'looks good' to accept or provide specific improvement requests.\n"

|

||||

"You can provide multiple rounds of feedback until satisfied.\n"

|

||||

"=====\n"

|

||||

)

|

||||

|

||||

self._printer.print(content=prompt, color="bold_yellow")

|

||||

response = input()

|

||||

if response.strip() != "":

|

||||

self._printer.print(content="\nProcessing your feedback...", color="cyan")

|

||||

return response

|

||||

return input()

|

||||

|

||||

@@ -31,11 +31,11 @@ class OutputConverter(BaseModel, ABC):

|

||||

)

|

||||

|

||||

@abstractmethod

|

||||

def to_pydantic(self, current_attempt=1) -> BaseModel:

|

||||

def to_pydantic(self, current_attempt=1):

|

||||

"""Convert text to pydantic."""

|

||||

pass

|

||||

|

||||

@abstractmethod

|

||||

def to_json(self, current_attempt=1) -> dict:

|

||||

def to_json(self, current_attempt=1):

|

||||

"""Convert text to json."""

|

||||

pass

|

||||

|

||||

@@ -18,12 +18,6 @@ from crewai.tools.base_tool import BaseTool

|

||||

from crewai.tools.tool_usage import ToolUsage, ToolUsageErrorException

|

||||

from crewai.utilities import I18N, Printer

|

||||

from crewai.utilities.constants import MAX_LLM_RETRY, TRAINING_DATA_FILE

|

||||

from crewai.utilities.events import (

|

||||

ToolUsageErrorEvent,

|

||||

ToolUsageStartedEvent,

|

||||

crewai_event_bus,

|

||||

)

|

||||

from crewai.utilities.events.tool_usage_events import ToolUsageStartedEvent

|

||||

from crewai.utilities.exceptions.context_window_exceeding_exception import (

|

||||

LLMContextLengthExceededException,

|

||||

)

|

||||

@@ -113,11 +107,11 @@ class CrewAgentExecutor(CrewAgentExecutorMixin):

|

||||

)

|

||||

raise

|

||||

except Exception as e:

|

||||

self._handle_unknown_error(e)

|

||||

if e.__class__.__module__.startswith("litellm"):

|

||||

# Do not retry on litellm errors

|

||||

raise e

|

||||

else:

|

||||

self._handle_unknown_error(e)

|

||||

raise e

|

||||

|

||||

if self.ask_for_human_input:

|

||||

@@ -355,68 +349,40 @@ class CrewAgentExecutor(CrewAgentExecutorMixin):

|

||||

)

|

||||

|

||||

def _execute_tool_and_check_finality(self, agent_action: AgentAction) -> ToolResult:

|

||||

try:

|

||||

if self.agent:

|

||||

crewai_event_bus.emit(

|

||||

self,

|

||||

event=ToolUsageStartedEvent(

|

||||

agent_key=self.agent.key,

|

||||

agent_role=self.agent.role,

|

||||

tool_name=agent_action.tool,

|

||||

tool_args=agent_action.tool_input,

|

||||

tool_class=agent_action.tool,

|

||||

),

|

||||

)

|

||||

tool_usage = ToolUsage(

|

||||

tools_handler=self.tools_handler,

|

||||

tools=self.tools,

|

||||

original_tools=self.original_tools,

|

||||

tools_description=self.tools_description,

|

||||

tools_names=self.tools_names,

|

||||

function_calling_llm=self.function_calling_llm,

|

||||

task=self.task, # type: ignore[arg-type]

|

||||

agent=self.agent,

|

||||

action=agent_action,

|

||||

)

|

||||

tool_calling = tool_usage.parse_tool_calling(agent_action.text)

|

||||

tool_usage = ToolUsage(

|

||||

tools_handler=self.tools_handler,

|

||||

tools=self.tools,

|

||||

original_tools=self.original_tools,

|

||||

tools_description=self.tools_description,

|

||||

tools_names=self.tools_names,

|

||||

function_calling_llm=self.function_calling_llm,

|

||||

task=self.task, # type: ignore[arg-type]

|

||||

agent=self.agent,

|

||||

action=agent_action,

|

||||

)

|

||||

tool_calling = tool_usage.parse_tool_calling(agent_action.text)

|

||||

|

||||

if isinstance(tool_calling, ToolUsageErrorException):

|

||||

tool_result = tool_calling.message

|

||||

return ToolResult(result=tool_result, result_as_answer=False)

|

||||

else:

|

||||

if tool_calling.tool_name.casefold().strip() in [

|

||||

name.casefold().strip() for name in self.tool_name_to_tool_map

|

||||

] or tool_calling.tool_name.casefold().replace("_", " ") in [

|

||||

name.casefold().strip() for name in self.tool_name_to_tool_map

|

||||

]:

|

||||

tool_result = tool_usage.use(tool_calling, agent_action.text)

|

||||

tool = self.tool_name_to_tool_map.get(tool_calling.tool_name)

|

||||

if tool:

|

||||

return ToolResult(

|

||||

result=tool_result, result_as_answer=tool.result_as_answer

|

||||

)

|

||||

else:

|

||||

tool_result = self._i18n.errors("wrong_tool_name").format(

|

||||

tool=tool_calling.tool_name,

|

||||

tools=", ".join([tool.name.casefold() for tool in self.tools]),

|

||||

if isinstance(tool_calling, ToolUsageErrorException):

|

||||

tool_result = tool_calling.message

|

||||

return ToolResult(result=tool_result, result_as_answer=False)

|

||||

else:

|

||||

if tool_calling.tool_name.casefold().strip() in [

|

||||

name.casefold().strip() for name in self.tool_name_to_tool_map

|

||||

] or tool_calling.tool_name.casefold().replace("_", " ") in [

|

||||

name.casefold().strip() for name in self.tool_name_to_tool_map

|

||||

]:

|

||||

tool_result = tool_usage.use(tool_calling, agent_action.text)

|

||||

tool = self.tool_name_to_tool_map.get(tool_calling.tool_name)

|

||||

if tool:

|

||||

return ToolResult(

|

||||

result=tool_result, result_as_answer=tool.result_as_answer

|

||||

)

|

||||

return ToolResult(result=tool_result, result_as_answer=False)

|

||||

|

||||

except Exception as e:

|

||||

# TODO: drop

|

||||

if self.agent:

|

||||

crewai_event_bus.emit(

|

||||

self,

|

||||

event=ToolUsageErrorEvent( # validation error

|

||||

agent_key=self.agent.key,

|

||||

agent_role=self.agent.role,

|

||||

tool_name=agent_action.tool,

|

||||

tool_args=agent_action.tool_input,

|

||||

tool_class=agent_action.tool,

|

||||

error=str(e),

|

||||

),

|

||||

else:

|

||||

tool_result = self._i18n.errors("wrong_tool_name").format(

|

||||

tool=tool_calling.tool_name,

|

||||

tools=", ".join([tool.name.casefold() for tool in self.tools]),

|

||||

)

|

||||

raise e

|

||||

return ToolResult(result=tool_result, result_as_answer=False)

|

||||

|

||||

def _summarize_messages(self) -> None:

|

||||

messages_groups = []

|

||||

@@ -548,6 +514,10 @@ class CrewAgentExecutor(CrewAgentExecutorMixin):

|

||||

self, initial_answer: AgentFinish, feedback: str

|

||||

) -> AgentFinish:

|

||||

"""Process feedback for training scenarios with single iteration."""

|

||||

self._printer.print(

|

||||

content="\nProcessing training feedback.\n",

|

||||

color="yellow",

|

||||

)

|

||||

self._handle_crew_training_output(initial_answer, feedback)

|

||||

self.messages.append(

|

||||

self._format_msg(

|

||||

@@ -567,8 +537,9 @@ class CrewAgentExecutor(CrewAgentExecutorMixin):

|

||||

answer = current_answer

|

||||

|

||||

while self.ask_for_human_input:

|

||||

# If the user provides a blank response, assume they are happy with the result

|

||||

if feedback.strip() == "":

|

||||

response = self._get_llm_feedback_response(feedback)

|

||||

|

||||

if not self._feedback_requires_changes(response):

|

||||

self.ask_for_human_input = False

|

||||

else:

|

||||

answer = self._process_feedback_iteration(feedback)

|

||||

@@ -576,6 +547,27 @@ class CrewAgentExecutor(CrewAgentExecutorMixin):

|

||||

|

||||

return answer

|

||||

|

||||

def _get_llm_feedback_response(self, feedback: str) -> Optional[str]:

|

||||

"""Get LLM classification of whether feedback requires changes."""

|

||||

prompt = self._i18n.slice("human_feedback_classification").format(

|

||||

feedback=feedback

|

||||

)

|

||||

message = self._format_msg(prompt, role="system")

|

||||

|

||||

for retry in range(MAX_LLM_RETRY):

|

||||

try:

|

||||

response = self.llm.call([message], callbacks=self.callbacks)

|

||||

return response.strip().lower() if response else None

|

||||

except Exception as error:

|

||||

self._log_feedback_error(retry, error)

|

||||

|

||||

self._log_max_retries_exceeded()

|

||||

return None

|

||||

|

||||

def _feedback_requires_changes(self, response: Optional[str]) -> bool:

|

||||

"""Determine if feedback response indicates need for changes."""

|

||||

return response == "true" if response else False

|

||||

|

||||

def _process_feedback_iteration(self, feedback: str) -> AgentFinish:

|

||||

"""Process a single feedback iteration."""

|

||||

self.messages.append(

|

||||

|

||||

@@ -94,13 +94,6 @@ class CrewAgentParser:

|

||||

|

||||

elif includes_answer:

|

||||

final_answer = text.split(FINAL_ANSWER_ACTION)[-1].strip()

|

||||

# Check whether the final answer ends with triple backticks.

|

||||

if final_answer.endswith("```"):

|

||||

# Count occurrences of triple backticks in the final answer.

|

||||

count = final_answer.count("```")

|

||||

# If count is odd then it's an unmatched trailing set; remove it.

|

||||

if count % 2 != 0:

|

||||

final_answer = final_answer[:-3].rstrip()

|

||||

return AgentFinish(thought, final_answer, text)

|

||||

|

||||

if not re.search(r"Action\s*\d*\s*:[\s]*(.*?)", text, re.DOTALL):

|

||||

@@ -127,10 +120,7 @@ class CrewAgentParser:

|

||||

regex = r"(.*?)(?:\n\nAction|\n\nFinal Answer)"

|

||||

thought_match = re.search(regex, text, re.DOTALL)

|

||||

if thought_match:

|

||||

thought = thought_match.group(1).strip()

|

||||

# Remove any triple backticks from the thought string

|

||||

thought = thought.replace("```", "").strip()

|

||||

return thought

|

||||

return thought_match.group(1).strip()

|

||||

return ""

|

||||

|

||||

def _clean_action(self, text: str) -> str:

|

||||

|

||||

@@ -216,43 +216,10 @@ MODELS = {

|

||||

"watsonx/ibm/granite-3-8b-instruct",

|

||||

],

|

||||

"bedrock": [

|

||||

"bedrock/us.amazon.nova-pro-v1:0",

|

||||

"bedrock/us.amazon.nova-micro-v1:0",

|

||||

"bedrock/us.amazon.nova-lite-v1:0",

|

||||

"bedrock/us.anthropic.claude-3-5-sonnet-20240620-v1:0",

|

||||

"bedrock/us.anthropic.claude-3-5-haiku-20241022-v1:0",

|

||||

"bedrock/us.anthropic.claude-3-5-sonnet-20241022-v2:0",

|

||||

"bedrock/us.anthropic.claude-3-7-sonnet-20250219-v1:0",

|

||||

"bedrock/us.anthropic.claude-3-sonnet-20240229-v1:0",

|

||||

"bedrock/us.anthropic.claude-3-opus-20240229-v1:0",

|

||||

"bedrock/us.anthropic.claude-3-haiku-20240307-v1:0",

|

||||

"bedrock/us.meta.llama3-2-11b-instruct-v1:0",

|

||||

"bedrock/us.meta.llama3-2-3b-instruct-v1:0",

|

||||

"bedrock/us.meta.llama3-2-90b-instruct-v1:0",

|

||||

"bedrock/us.meta.llama3-2-1b-instruct-v1:0",

|

||||

"bedrock/us.meta.llama3-1-8b-instruct-v1:0",

|

||||

"bedrock/us.meta.llama3-1-70b-instruct-v1:0",

|

||||

"bedrock/us.meta.llama3-3-70b-instruct-v1:0",

|

||||

"bedrock/us.meta.llama3-1-405b-instruct-v1:0",

|

||||

"bedrock/eu.anthropic.claude-3-5-sonnet-20240620-v1:0",

|

||||

"bedrock/eu.anthropic.claude-3-sonnet-20240229-v1:0",

|

||||

"bedrock/eu.anthropic.claude-3-haiku-20240307-v1:0",

|

||||

"bedrock/eu.meta.llama3-2-3b-instruct-v1:0",

|

||||

"bedrock/eu.meta.llama3-2-1b-instruct-v1:0",

|

||||

"bedrock/apac.anthropic.claude-3-5-sonnet-20240620-v1:0",

|

||||

"bedrock/apac.anthropic.claude-3-5-sonnet-20241022-v2:0",

|

||||

"bedrock/apac.anthropic.claude-3-sonnet-20240229-v1:0",

|

||||

"bedrock/apac.anthropic.claude-3-haiku-20240307-v1:0",

|

||||

"bedrock/amazon.nova-pro-v1:0",

|

||||

"bedrock/amazon.nova-micro-v1:0",

|

||||

"bedrock/amazon.nova-lite-v1:0",

|

||||

"bedrock/anthropic.claude-3-5-sonnet-20240620-v1:0",

|

||||

"bedrock/anthropic.claude-3-5-haiku-20241022-v1:0",

|

||||

"bedrock/anthropic.claude-3-5-sonnet-20241022-v2:0",

|

||||

"bedrock/anthropic.claude-3-7-sonnet-20250219-v1:0",

|

||||

"bedrock/anthropic.claude-3-sonnet-20240229-v1:0",

|

||||

"bedrock/anthropic.claude-3-opus-20240229-v1:0",

|

||||

"bedrock/anthropic.claude-3-haiku-20240307-v1:0",

|

||||

"bedrock/anthropic.claude-3-opus-20240229-v1:0",

|

||||

"bedrock/anthropic.claude-v2:1",

|

||||

"bedrock/anthropic.claude-v2",

|

||||

"bedrock/anthropic.claude-instant-v1",

|

||||

@@ -267,6 +234,8 @@ MODELS = {

|

||||

"bedrock/ai21.j2-mid-v1",

|

||||

"bedrock/ai21.j2-ultra-v1",

|

||||

"bedrock/ai21.jamba-instruct-v1:0",

|

||||

"bedrock/meta.llama2-13b-chat-v1",

|

||||

"bedrock/meta.llama2-70b-chat-v1",

|

||||

"bedrock/mistral.mistral-7b-instruct-v0:2",

|

||||

"bedrock/mistral.mixtral-8x7b-instruct-v0:1",

|

||||

],

|

||||

|

||||

@@ -56,8 +56,7 @@ def test():

|

||||

Test the crew execution and returns the results.

|

||||

"""

|

||||

inputs = {

|

||||

"topic": "AI LLMs",

|

||||

"current_year": str(datetime.now().year)

|

||||

"topic": "AI LLMs"

|

||||

}

|

||||

try:

|

||||

{{crew_name}}().crew().test(n_iterations=int(sys.argv[1]), openai_model_name=sys.argv[2], inputs=inputs)

|

||||

|

||||

@@ -5,7 +5,7 @@ description = "{{name}} using crewAI"

|

||||

authors = [{ name = "Your Name", email = "you@example.com" }]

|

||||

requires-python = ">=3.10,<3.13"

|

||||

dependencies = [

|

||||

"crewai[tools]>=0.102.0,<1.0.0"

|

||||

"crewai[tools]>=0.100.1,<1.0.0"

|

||||

]

|

||||

|

||||

[project.scripts]

|

||||

|

||||

@@ -5,7 +5,7 @@ description = "{{name}} using crewAI"

|

||||

authors = [{ name = "Your Name", email = "you@example.com" }]

|

||||

requires-python = ">=3.10,<3.13"

|

||||

dependencies = [

|

||||

"crewai[tools]>=0.102.0,<1.0.0",

|

||||

"crewai[tools]>=0.100.1,<1.0.0",

|

||||

]

|

||||

|

||||

[project.scripts]

|

||||

|

||||

@@ -5,7 +5,7 @@ description = "Power up your crews with {{folder_name}}"

|

||||

readme = "README.md"

|

||||

requires-python = ">=3.10,<3.13"

|

||||

dependencies = [

|

||||

"crewai[tools]>=0.102.0"

|

||||

"crewai[tools]>=0.100.1"

|

||||

]

|

||||

|

||||

[tool.crewai]

|

||||

|

||||

@@ -257,11 +257,11 @@ def get_crew(crew_path: str = "crew.py", require: bool = False) -> Crew | None:

|

||||

import os

|

||||

|

||||

for root, _, files in os.walk("."):

|

||||

if crew_path in files:

|

||||

crew_os_path = os.path.join(root, crew_path)

|

||||

if "crew.py" in files:

|

||||

crew_path = os.path.join(root, "crew.py")

|

||||

try:

|

||||

spec = importlib.util.spec_from_file_location(

|

||||

"crew_module", crew_os_path

|

||||

"crew_module", crew_path

|

||||

)

|

||||

if not spec or not spec.loader:

|

||||

continue

|

||||

@@ -273,11 +273,9 @@ def get_crew(crew_path: str = "crew.py", require: bool = False) -> Crew | None:

|

||||

for attr_name in dir(module):

|

||||

attr = getattr(module, attr_name)

|

||||

try:

|

||||

if isinstance(attr, Crew) and hasattr(attr, "kickoff"):

|

||||

print(

|

||||

f"Found valid crew object in attribute '{attr_name}' at {crew_os_path}."

|

||||

)

|

||||

return attr

|

||||

if callable(attr) and hasattr(attr, "crew"):

|

||||

crew_instance = attr().crew()

|

||||

return crew_instance

|

||||

|

||||

except Exception as e:

|

||||

print(f"Error processing attribute {attr_name}: {e}")

|

||||

|

||||

@@ -1,12 +1,10 @@

|

||||

import asyncio

|

||||

import json

|

||||

import re

|

||||

import uuid

|

||||

import warnings

|

||||

from concurrent.futures import Future

|

||||

from copy import copy as shallow_copy

|

||||

from hashlib import md5

|

||||

from typing import Any, Callable, Dict, List, Optional, Set, Tuple, Union

|

||||