+

+

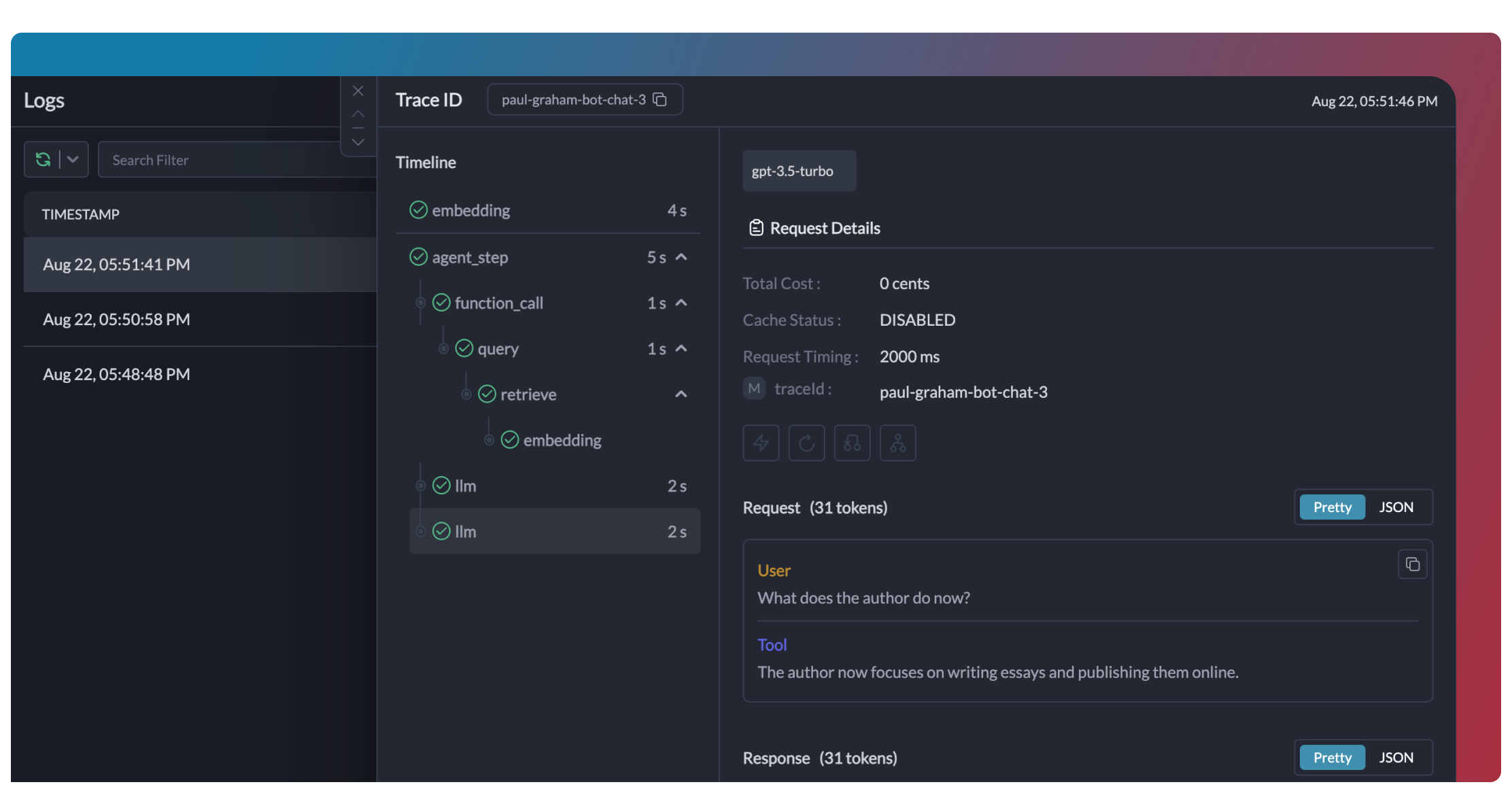

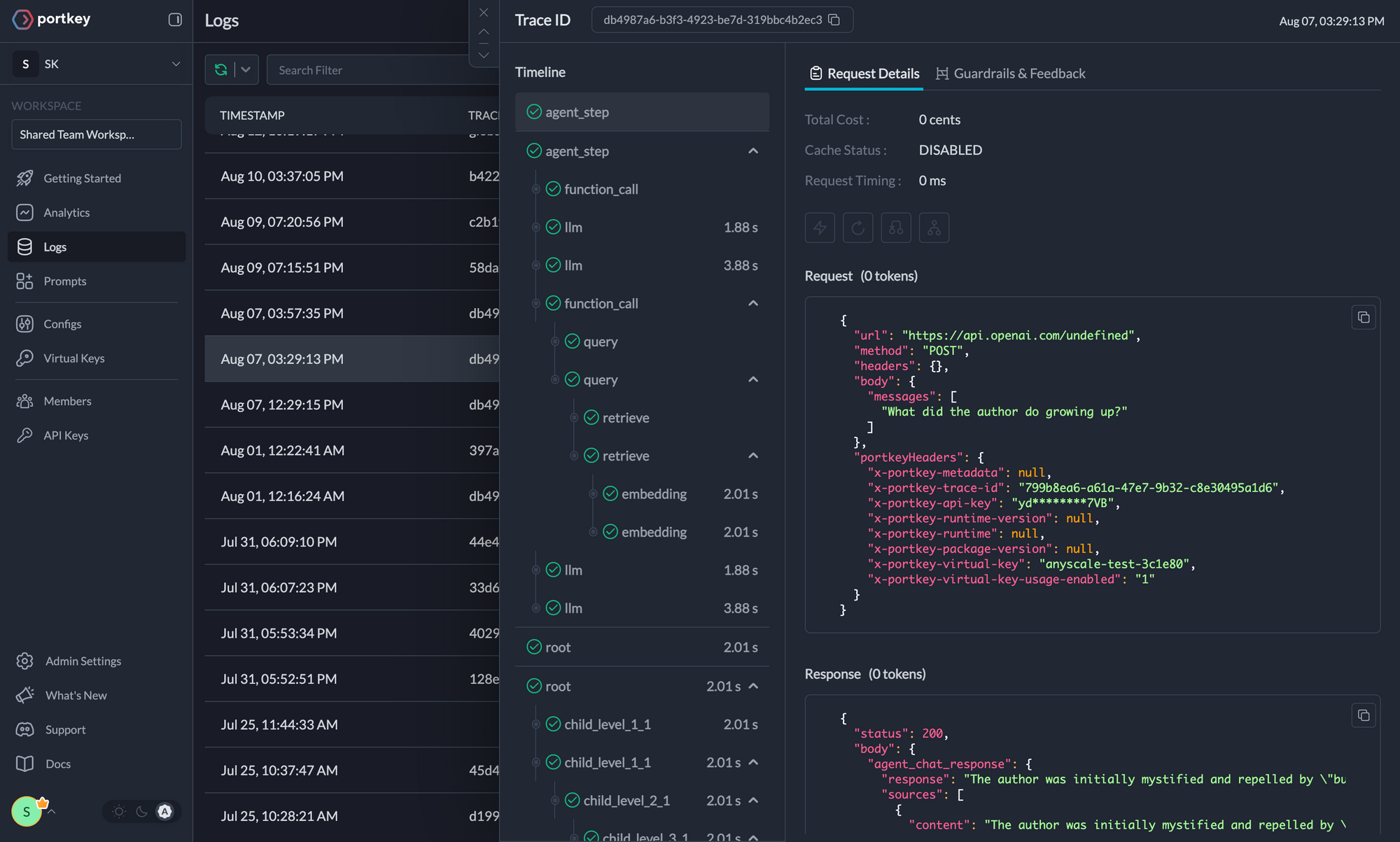

+Traces provide a hierarchical view of your crew's execution, showing the sequence of LLM calls, tool invocations, and state transitions.

+

+```python

+# Add trace_id to enable hierarchical tracing in Portkey

+portkey_llm = LLM(

+ model="gpt-4o",

base_url=PORTKEY_GATEWAY_URL,

api_key="dummy",

extra_headers=createHeaders(

api_key="YOUR_PORTKEY_API_KEY",

- virtual_key="YOUR_AZURE_VIRTUAL_KEY", #You don't need provider when using Virtual keys

- trace_id="azure_agent"

+ virtual_key="YOUR_OPENAI_VIRTUAL_KEY",

+ trace_id="unique-session-id" # Add unique trace ID

+ )

+)

+```

+

+

+

+Traces provide a hierarchical view of your crew's execution, showing the sequence of LLM calls, tool invocations, and state transitions.

+

+```python

+# Add trace_id to enable hierarchical tracing in Portkey

+portkey_llm = LLM(

+ model="gpt-4o",

base_url=PORTKEY_GATEWAY_URL,

api_key="dummy",

extra_headers=createHeaders(

api_key="YOUR_PORTKEY_API_KEY",

- virtual_key="YOUR_AZURE_VIRTUAL_KEY", #You don't need provider when using Virtual keys

- trace_id="azure_agent"

+ virtual_key="YOUR_OPENAI_VIRTUAL_KEY",

+ trace_id="unique-session-id" # Add unique trace ID

+ )

+)

+```

+  +

+

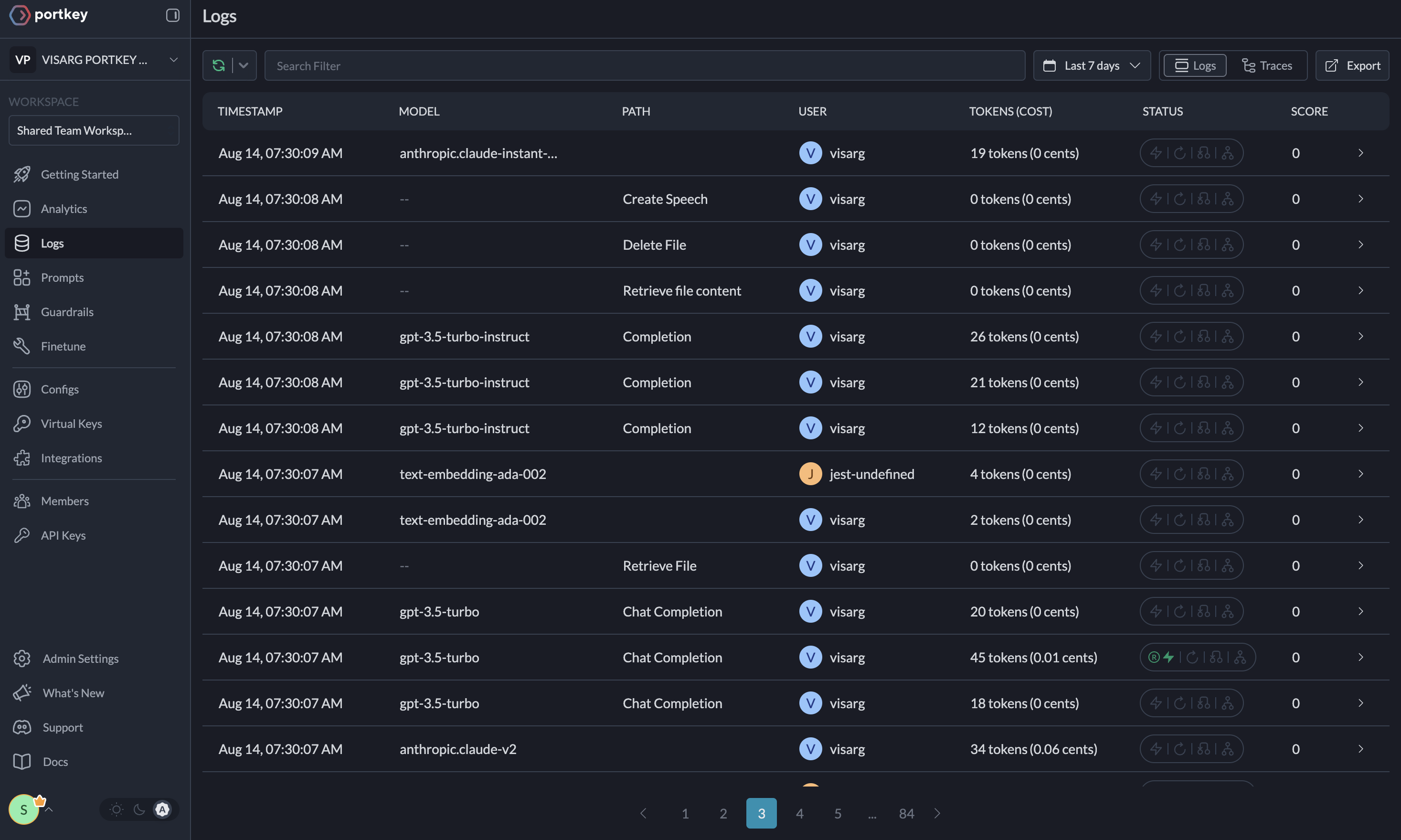

+Portkey logs every interaction with LLMs, including:

+

+- Complete request and response payloads

+- Latency and token usage metrics

+- Cost calculations

+- Tool calls and function executions

+

+All logs can be filtered by metadata, trace IDs, models, and more, making it easy to debug specific crew runs.

+

+

+

+Portkey logs every interaction with LLMs, including:

+

+- Complete request and response payloads

+- Latency and token usage metrics

+- Cost calculations

+- Tool calls and function executions

+

+All logs can be filtered by metadata, trace IDs, models, and more, making it easy to debug specific crew runs.

+  +

+

+Portkey provides built-in dashboards that help you:

+

+- Track cost and token usage across all crew runs

+- Analyze performance metrics like latency and success rates

+- Identify bottlenecks in your agent workflows

+- Compare different crew configurations and LLMs

+

+You can filter and segment all metrics by custom metadata to analyze specific crew types, user groups, or use cases.

+

+

+

+Portkey provides built-in dashboards that help you:

+

+- Track cost and token usage across all crew runs

+- Analyze performance metrics like latency and success rates

+- Identify bottlenecks in your agent workflows

+- Compare different crew configurations and LLMs

+

+You can filter and segment all metrics by custom metadata to analyze specific crew types, user groups, or use cases.

+  +

+

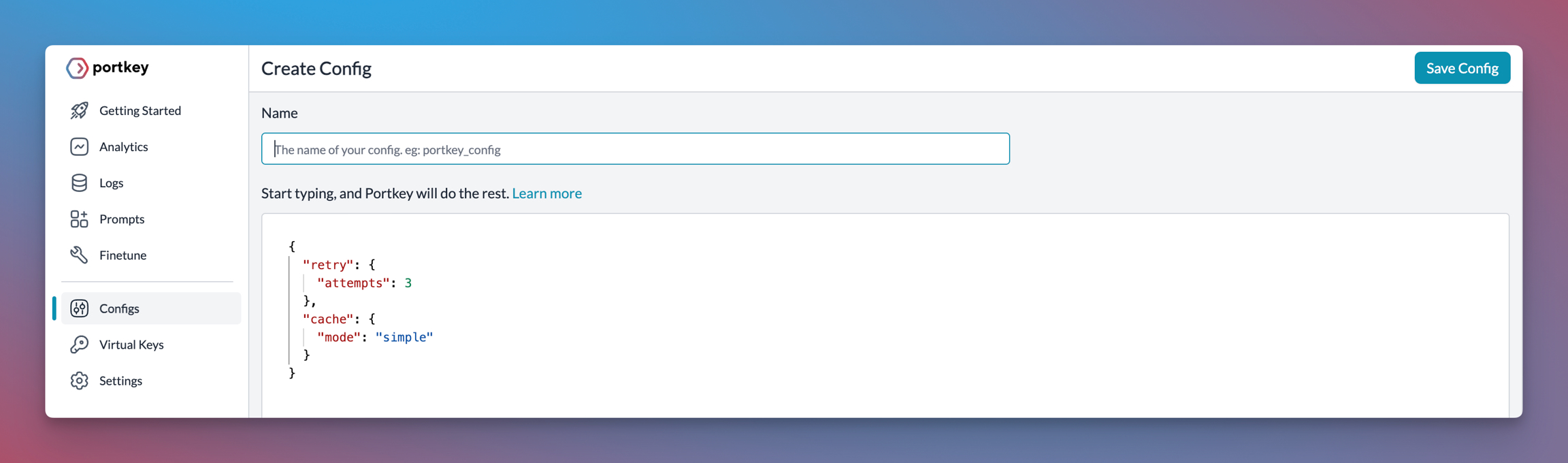

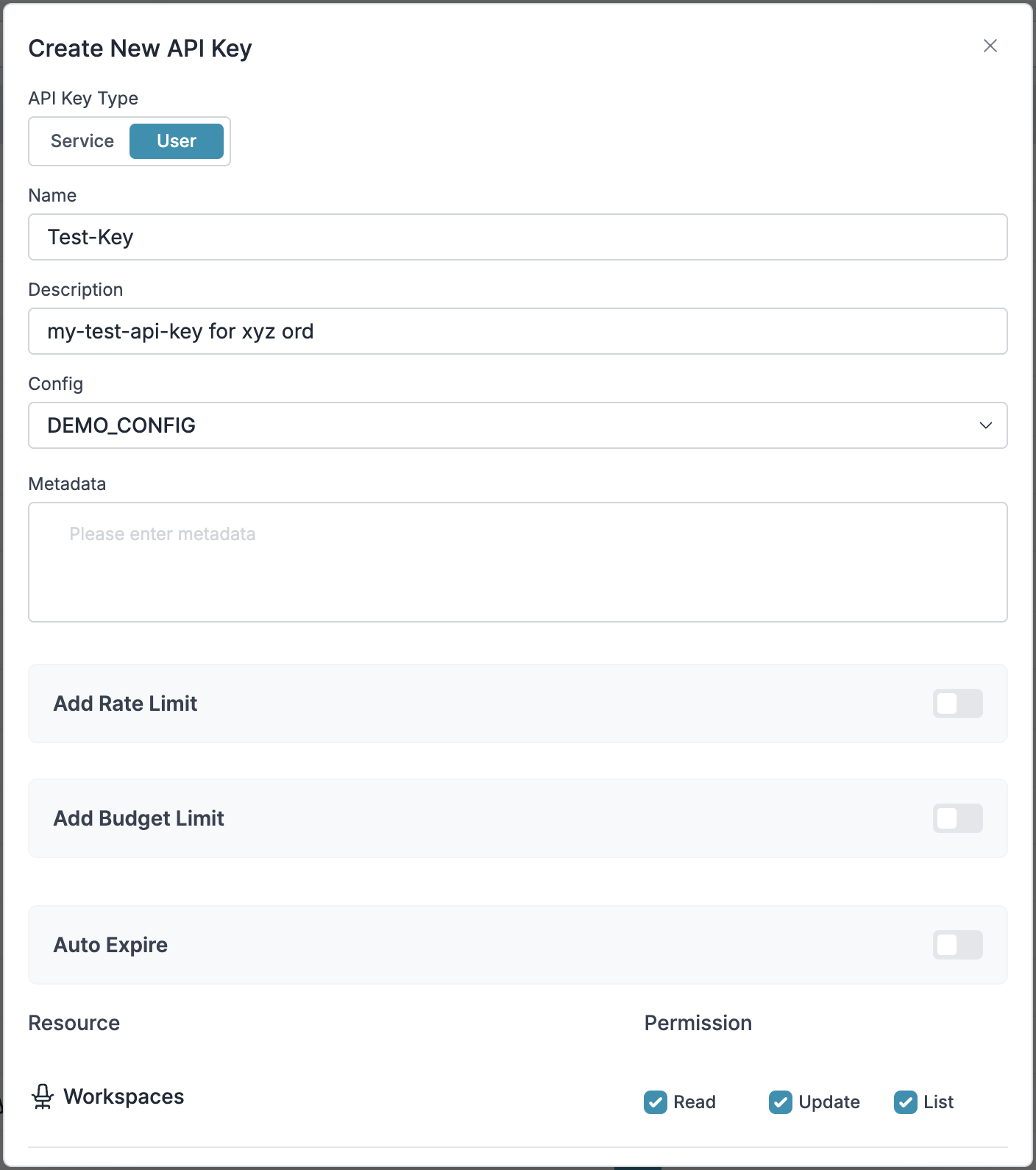

+Add custom metadata to your CrewAI LLM configuration to enable powerful filtering and segmentation:

+

+```python

+portkey_llm = LLM(

+ model="gpt-4o",

+ base_url=PORTKEY_GATEWAY_URL,

+ api_key="dummy",

+ extra_headers=createHeaders(

+ api_key="YOUR_PORTKEY_API_KEY",

+ virtual_key="YOUR_OPENAI_VIRTUAL_KEY",

+ metadata={

+ "crew_type": "research_crew",

+ "environment": "production",

+ "_user": "user_123", # Special _user field for user analytics

+ "request_source": "mobile_app"

+ }

)

)

```

+This metadata can be used to filter logs, traces, and metrics on the Portkey dashboard, allowing you to analyze specific crew runs, users, or environments.

+

+

+

+Add custom metadata to your CrewAI LLM configuration to enable powerful filtering and segmentation:

+

+```python

+portkey_llm = LLM(

+ model="gpt-4o",

+ base_url=PORTKEY_GATEWAY_URL,

+ api_key="dummy",

+ extra_headers=createHeaders(

+ api_key="YOUR_PORTKEY_API_KEY",

+ virtual_key="YOUR_OPENAI_VIRTUAL_KEY",

+ metadata={

+ "crew_type": "research_crew",

+ "environment": "production",

+ "_user": "user_123", # Special _user field for user analytics

+ "request_source": "mobile_app"

+ }

)

)

```

+This metadata can be used to filter logs, traces, and metrics on the Portkey dashboard, allowing you to analyze specific crew runs, users, or environments.

+  -[Portkey](https://portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai) is a 2-line upgrade to make your CrewAI agents reliable, cost-efficient, and fast.

-Portkey adds 4 core production capabilities to any CrewAI agent:

-1. Routing to **200+ LLMs**

-2. Making each LLM call more robust

-3. Full-stack tracing & cost, performance analytics

-4. Real-time guardrails to enforce behavior

+## Introduction

-## Getting Started

+Portkey enhances CrewAI with production-readiness features, turning your experimental agent crews into robust systems by providing:

+

+- **Complete observability** of every agent step, tool use, and interaction

+- **Built-in reliability** with fallbacks, retries, and load balancing

+- **Cost tracking and optimization** to manage your AI spend

+- **Access to 200+ LLMs** through a single integration

+- **Guardrails** to keep agent behavior safe and compliant

+- **Version-controlled prompts** for consistent agent performance

+

+

+### Installation & Setup

-[Portkey](https://portkey.ai/?utm_source=crewai&utm_medium=crewai&utm_campaign=crewai) is a 2-line upgrade to make your CrewAI agents reliable, cost-efficient, and fast.

-Portkey adds 4 core production capabilities to any CrewAI agent:

-1. Routing to **200+ LLMs**

-2. Making each LLM call more robust

-3. Full-stack tracing & cost, performance analytics

-4. Real-time guardrails to enforce behavior

+## Introduction

-## Getting Started

+Portkey enhances CrewAI with production-readiness features, turning your experimental agent crews into robust systems by providing:

+

+- **Complete observability** of every agent step, tool use, and interaction

+- **Built-in reliability** with fallbacks, retries, and load balancing

+- **Cost tracking and optimization** to manage your AI spend

+- **Access to 200+ LLMs** through a single integration

+- **Guardrails** to keep agent behavior safe and compliant

+- **Version-controlled prompts** for consistent agent performance

+

+

+### Installation & Setup

-

-

-

-### 1. Use 250+ LLMs

-Access various LLMs like Anthropic, Gemini, Mistral, Azure OpenAI, and more with minimal code changes. Switch between providers or use them together seamlessly. [Learn more about Universal API](https://portkey.ai/docs/product/ai-gateway/universal-api)

-

-

-Easily switch between different LLM providers:

+

-

-

-

-### 1. Use 250+ LLMs

-Access various LLMs like Anthropic, Gemini, Mistral, Azure OpenAI, and more with minimal code changes. Switch between providers or use them together seamlessly. [Learn more about Universal API](https://portkey.ai/docs/product/ai-gateway/universal-api)

-

-

-Easily switch between different LLM providers:

+ +

+

+

+

+

+ +

+

+

+

+

+ +

+

+

+

+

+ + For detailed key management instructions, see our [API Keys documentation](/api-reference/admin-api/control-plane/api-keys/create-api-key).

+

+ For detailed key management instructions, see our [API Keys documentation](/api-reference/admin-api/control-plane/api-keys/create-api-key).

+  -

- -

-